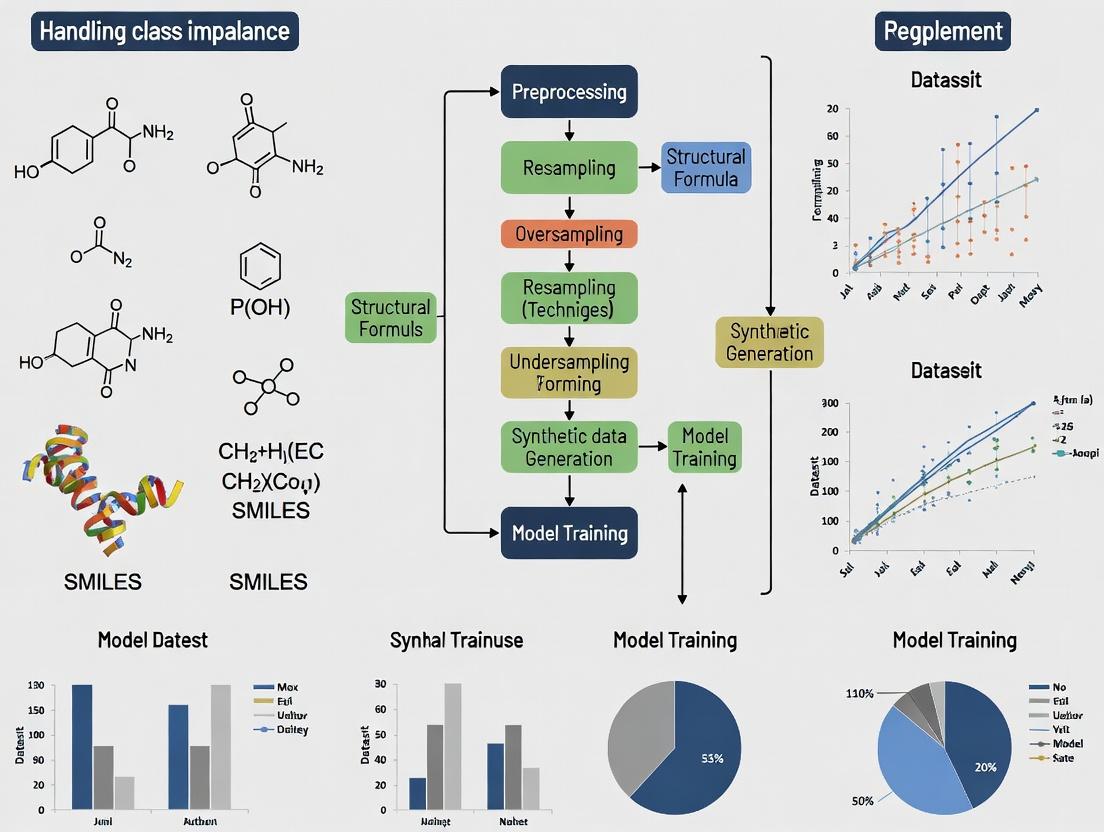

Beyond the Majority: Advanced Strategies for Handling Class Imbalance in Chemogenomic Drug Discovery Models

This article provides a comprehensive guide for researchers and drug development professionals tackling the pervasive challenge of class imbalance in chemogenomic classification.

Beyond the Majority: Advanced Strategies for Handling Class Imbalance in Chemogenomic Drug Discovery Models

Abstract

This article provides a comprehensive guide for researchers and drug development professionals tackling the pervasive challenge of class imbalance in chemogenomic classification. We explore the fundamental causes and consequences of skewed datasets in drug-target interaction prediction. A detailed methodological review covers algorithmic, data-level, and cost-sensitive learning techniques tailored for biological data. The guide further addresses practical troubleshooting, performance metric selection, and model optimization. Finally, we present a framework for rigorous validation, benchmarking of state-of-the-art methods, and translating balanced model performance into credible preclinical insights, ultimately aiming to de-risk the early stages of drug discovery.

The Imbalance Problem in Chemogenomics: Why Your Drug-Target Data is Skewed and Why It Matters

Technical Support Center

Troubleshooting Guides & FAQs

Q1: My chemogenomic model achieves >95% accuracy, but fails to predict any true positive interactions in validation. What is wrong? A: This is a classic symptom of extreme class imbalance where the model learns to always predict the majority class (non-interactions). Accuracy is a misleading metric here. Your dataset likely has a very low prevalence of positive interactions.

- Diagnostic Step: Check your class distribution. Calculate:

- Number of positive samples (e.g., known interactions).

- Number of negative samples (unknown or non-interacting pairs).

- Imbalance Ratio (IR) = (Number of Negative Samples) / (Number of Positive Samples).

- Solution: Switch to balanced evaluation metrics (see Q2). Implement resampling techniques (see Experimental Protocol 1) before relying on accuracy.

Q2: What metrics should I use instead of accuracy to evaluate my imbalanced classification model? A: Use metrics that are robust to class imbalance. Report a suite of metrics from your confusion matrix (True Positives TP, False Positives FP, True Negatives TN, False Negatives FN).

| Metric | Formula | Focus | Ideal Value in Imbalance |

|---|---|---|---|

| Precision | TP / (TP + FP) | Reliability of positive predictions | High |

| Recall (Sensitivity) | TP / (TP + FN) | Coverage of actual positives | High |

| F1-Score | 2 * (Precision*Recall)/(Precision+Recall) | Harmonic mean of Precision & Recall | High |

| Matthew’s Correlation Coefficient (MCC) | (TPTN - FPFN) / sqrt((TP+FP)(TP+FN)(TN+FP)(TN+FN)) | Balanced measure for both classes | +1 |

| AUPRC | Area Under the Precision-Recall Curve | Performance across probability thresholds | High (vs. AUROC) |

Q3: How prevalent is class imbalance in standard public DTI datasets? A: Extreme imbalance is the rule. Below is a summary of popular benchmark datasets.

| Dataset | Total Pairs | Positive Pairs | Negative Pairs | Imbalance Ratio (IR) | Key Characteristic |

|---|---|---|---|---|---|

| BindingDB (Curated) | ~40,000 | ~40,000 | 0 (requires generation) | Variable | Contains only positives. Negatives are "non-observed" and must be generated carefully. |

| BIOSNAP (ChChMiner) | 1,523,133 | 15,138 | 1,507,995 | ~100:1 | Non-interactions are random pairs, leading to severe artificial imbalance. |

| DrugBank Approved | 9,734 | 4,867 | 4,867 | 1:1 | Artificially balanced subset. Not representative of real-world prevalence. |

| Lenselink | 2,027,615 | 214,293 | 1,813,322 | ~8.5:1 | Comprehensive, but still exhibits significant imbalance. |

Q4: What is a standard protocol for generating a robust negative set for DTI data? A: Experimental Protocol 1: Generating "Putative Negatives" for DTI.

- Collect Known Positives: Gather confirmed interactions from credible sources (ChEMBL, BindingDB, IUPHAR).

- Define the Universe: List all unique drugs and targets present in your positive set.

- Generate All Possible Pairs: Create the Cartesian product (all possible combinations) of drugs and targets.

- Subtract Known Positives: Remove all known positive pairs from the universal set. The remainder are candidate negatives.

- Apply Biological Filtering (Critical): Remove pairs that are likely false negatives:

- Remove drug-target pairs where the target is not in the relevant organism/proteome.

- Remove pairs where the drug's known therapeutic class is unrelated to the target's pathway (requires manual curation or ontology matching).

- Finalize Set: The remaining pairs are "putative negatives." The IR is now defined and reflects a more realistic screening scenario.

Q5: In phenotypic screening, how does imbalance manifest and how can I address it? A: Phenotypic hits (e.g., active compounds in a cytotoxicity assay) are typically rare (often <1% hit rate). This creates extreme imbalance.

- Issue: A model predicting "inactive" for all compounds will be 99% accurate but useless.

- Solution:

- Use AUPRC as the primary metric.

- Apply strategic undersampling of the majority class during training to create mini-batches with a more manageable IR (e.g., 3:1 or 5:1). Never undersample your final test/validation set.

- Incorporate cost-sensitive learning where misclassifying a rare active compound is penalized more heavily than misclassifying an inactive.

Title: Workflow for Generating Putative Negative DTI Pairs

The Scientist's Toolkit: Research Reagent Solutions

| Item | Function in Imbalance Research |

|---|---|

| Imbalanced-Learn (Python Library) | Provides implementations of SMOTE, ADASYN, Tomek links, and other resampling algorithms for strategic dataset balancing. |

| ChEMBL Database | A primary source for curated bioactivity data, used to build reliable positive interaction sets and understand assay background. |

| PubChem BioAssay | Source of phenotypic screening data; essential for understanding real-world hit rates and imbalance in activity datasets. |

| RDKit | Used to compute chemical descriptors/fingerprints; critical for ensuring chemical diversity when subsampling majority classes. |

| TensorFlow/PyTorch (with Weighted Loss) | Deep learning frameworks that allow implementation of weighted cross-entropy loss, a key cost-sensitive learning technique. |

| MCC (Metric Calculation Script) | A custom script to compute Matthew’s Correlation Coefficient, as it is not always the default in ML libraries. |

| Custom Negative Set Generator | A tailored pipeline (as per Protocol 1) to create biologically relevant negative sets, moving beyond random pairing. |

Title: Choosing the Right Metrics for Imbalanced Model Evaluation

Technical Support Center: Troubleshooting Skewed Data in Chemogenomic Models

FAQs: Identifying and Addressing Data Skew

Q1: Our model shows high accuracy but fails to predict novel active compounds. What is the most likely root cause? A: This is a classic symptom of severe class imbalance where the model learns to always predict the majority class (inactives). Your model's "accuracy" is misleading. For a dataset with 99% inactives, a model that always predicts "inactive" will have 99% accuracy but 0% recall for actives. Prioritize metrics like Balanced Accuracy, Matthews Correlation Coefficient (MCC), or Area Under the Precision-Recall Curve (AUPRC) instead of raw accuracy.

Q2: Our high-throughput screening (HTS) yielded only 0.5% active compounds. How do we proceed without creating a biased model? A: A 0.5% hit rate is a common biological source of skew. Do not train a model on the raw dataset. Instead, implement strategic sampling during the training phase. The recommended protocol is to use Stratified Sampling for creating your test/hold-out set (to preserve the imbalance for realistic evaluation) and Combined Sampling (SMOTEENN) on the training set only to reduce imbalance for the model learner.

Q3: What are the critical experimental biases in biochemical assays that lead to skewed data? A: Key experimental biases include:

- Compound Interference: Compounds that fluoresce, quench fluorescence, or aggregate can create false negatives or positives.

- Edge Effects in Microplates: Systematic false readings from wells on plate edges.

- Concentration Range Bias: Testing only a narrow, non-physiological concentration range skews the "inactive" class.

- Target Bias: Over-representation of assays for well-studied protein families (e.g., kinases) vs. harder-to-drug targets.

Q4: How can we validate that our model has learned real structure-activity relationships and not just experimental noise? A: Implement a Cluster-Based Splitting protocol for validation. Instead of random splitting, split data so that structurally similar compounds are in the same set. This tests the model's ability to generalize to truly novel scaffolds. A model performing well on random splits but failing on cluster splits likely memorized assay artifacts.

Troubleshooting Guides

Issue: Model Performance Collapse on External Test Sets

| Symptom | Potential Root Cause | Diagnostic Check | Remedial Action |

|---|---|---|---|

| High AUROC, near-zero AUPRC | Extreme class imbalance | Plot Precision-Recall curve vs. ROC curve. | Use AUPRC as primary metric. Apply cost-sensitive learning or threshold moving. |

| Good recall, terrible precision | Artifacts in "active" class (e.g., aggregators) | Apply PAINS filters or perform promiscuity analysis. | Clean training data of nuisance compounds. Use experimental counterscreens. |

| Performance varies wildly by scaffold | Data skew across chemical space | Perform PCA/t-SNE; color by activity and assay batch. | Use cluster splitting for validation. Apply domain adaptation techniques. |

Issue: Biological Replicate Variability Causing Label Noise

| Metric | Replicate 1 vs. 2 | Replicate 1 vs. 3 | Action Threshold |

|---|---|---|---|

| Pearson Correlation | 0.85 | 0.78 | If < 0.7, investigate assay conditions. |

| Active Call Concordance | 92% | 88% | If < 85%, data is too noisy for reliable modeling. |

| Z'-Factor | 0.6 | 0.4 | If < 0.5, assay is not robust for screening. |

Protocol 1: Cluster-Based Data Splitting for Rigorous Validation

- Input: Standardized SMILES strings for all screened compounds.

- GenerateDescriptors: Calculate ECFP4 fingerprints (2048 bits, radius 2).

- Cluster: Apply Butina clustering (using RDKit) with a Tanimoto similarity cutoff of 0.35.

- Split: Assign all molecules within a single cluster to the same subset (train, validation, or test). Use a 60/20/20 ratio at the cluster level.

- Train/Validate: Train models on the training clusters. This ensures the model is tested on structurally distinct scaffolds.

Protocol 2: Combined Sampling (SMOTEENN) for Training Set Rebalancing Warning: Apply only to the training set after creating a hold-out test set.

- Input: Training set feature matrix (Xtrain) and labels (ytrain).

- Synthetic Oversampling: Apply SMOTE (Synthetic Minority Over-sampling Technique). Use

imbalanced-learndefaults: k_neighbors=5, randomly interpolate between minority class instances to create synthetic examples. - Edited Undersampling: Apply ENN (Edited Nearest Neighbors). Remove any instance (majority or minority) whose class label differs from at least two of its three nearest neighbors.

- Output: A new, less-imbalanced training set (Xtrainresampled, ytrainresampled) with cleaned decision boundaries.

Signaling Pathway Diagram: Assay Interference Leading to Skewed Labels

Title: How Compound Interference Creates Skewed Assay Data

Experimental Workflow Diagram: Mitigating Skew from HTS to Model

Title: Workflow to Manage Class Imbalance in Drug Discovery

The Scientist's Toolkit: Research Reagent Solutions

| Item | Function | Role in Mitigating Skew |

|---|---|---|

| Triton X-100 | Non-ionic detergent. | Reduces false positives from compound aggregation by disrupting colloidal aggregates. |

| β-Lactamase (NanoBIT, HiBIT) | Enzyme fragment complementation reporters. | Provides a highly sensitive, low-background assay readout, reducing false negatives. |

| BSA (Fatty Acid-Free) | Protein stabilizer. | Minimizes non-specific compound binding, reducing false negatives for lipophilic compounds. |

| DTT/TCEP | Reducing agents. | Maintains target protein redox state, ensuring consistent activity and reducing assay noise. |

| Control Compound Plates (e.g., LOPAC) | Libraries of pharmacologically active compounds. | Used for per-plate QC (Z'-factor), identifying systematic positional bias. |

| qHTS Concentration Series | Testing compounds at multiple concentrations (e.g., 7 points). | Prevents concentration-range bias; generates rich dose-response data instead of binary labels. |

Technical Support Center: Troubleshooting Chemogenomic Models

Troubleshooting Guides & FAQs

Q1: My binary classifier for active vs. inactive compounds achieves 98% accuracy, but it fails to identify any true actives in new validation screens. What is wrong? A: This is a classic symptom of severe class imbalance. If your inactive class constitutes 98% of the data, a model can achieve 98% accuracy by simply predicting "inactive" for every sample. The metric is misleading.

- Solution: Immediately stop using accuracy. Switch to balanced metrics:

- Calculate Precision-Recall AUC or Average Precision (AP).

- Examine the Confusion Matrix directly.

- Use the Balanced Accuracy or Matthews Correlation Coefficient (MCC).

- Protocol - Diagnostic Confusion Matrix:

- On your held-out test set, generate prediction probabilities.

- Apply a threshold (start at 0.5).

- Tabulate: True Positives (TP), False Positives (FP), True Negatives (TN), False Negatives (FN).

- If TP and FN are zero or very low, your model is not learning the minority class.

Q2: After applying SMOTE to balance my dataset, my model's cross-validation performance looks great, but it generalizes poorly to external test data. Why? A: Synthetic Minority Over-sampling Technique (SMOTE) can create unrealistic synthetic samples, especially in high-dimensional chemogenomic feature space, leading to overfitting and over-optimistic CV scores.

- Solution: Implement more rigorous validation and consider alternative methods.

- Use a Strict Train-Validation-Test Split: Ensure no data leakage. The test set must never be seen during SMOTE or training.

- Apply SMOTE only on the training fold within cross-validation, never on the entire dataset before splitting.

- Consider alternative techniques: Cost-sensitive learning, under-sampling the majority class (if data is sufficient), or using algorithms like XGBoost with a

scale_pos_weightparameter.

Q3: I cannot reproduce a published model's performance on my own, imbalanced dataset. Where should I start debugging? A: Reproducibility failure often stems from unreported handling of class imbalance.

- Solution - Reproduction Protocol:

- Contact Authors: Request the exact, curated dataset and splitting strategy.

- Audit Metrics: Determine if the published metric (e.g., ROC-AUC) is robust to imbalance for that specific data distribution.

- Replicate Sampling: If they used a sampling technique (e.g., Random Under-Sampling), the random seed is critical. Attempt to match it.

- Hyperparameter Search: Many models have class-weight parameters (e.g.,

class_weight='balanced'in scikit-learn). These are often key but under-reported.

Q4: What is the best algorithm for imbalanced chemogenomic data? A: There is no single "best" algorithm. Performance depends on data size, dimensionality, and imbalance ratio. The key is to choose algorithms amenable to imbalance correction.

| Algorithm Class | Pros for Imbalance | Cons / Considerations | Typical Use Case |

|---|---|---|---|

| Tree-Based (RF, XGBoost) | Native cost-setting, handles non-linear data well. | Can still be biased if not weighted; prone to overfitting on noise. | Medium to large datasets, high-dimensional fingerprints. |

| Deep Neural Networks | Flexible with custom loss functions. | Requires very large data; hyperparameter tuning is complex. | Massive datasets (e.g., full molecular graphs). |

| Support Vector Machines | Effective in high-dim spaces with class weights. | Computationally heavy for very large datasets. | Smaller, high-dimensional genomic feature sets. |

| Logistic Regression | Simple, interpretable, easy to apply class weights. | Limited to linear decision boundaries unless kernelized. | Baseline model, lower-dimensional descriptors. |

- Protocol - Implementing Cost-Sensitive XGBoost:

- Compute the imbalance ratio:

scale_pos_weight = number_of_negative_samples / number_of_positive_samples. - Set this parameter in your XGBoost classifier.

- Use

eval_metric='aucpr'(Area Under Precision-Recall Curve) for early stopping. - Perform hyperparameter tuning (e.g.,

max_depth,learning_rate) usingRandomizedSearchCVwith stratification.

- Compute the imbalance ratio:

The Scientist's Toolkit: Research Reagent Solutions

| Item | Function in Imbalance Research |

|---|---|

| Imbalanced-Learn (Python library) | Provides implementations of SMOTE, ADASYN, RandomUnderSampler, and ensemble samplers for systematic resampling experiments. |

| XGBoost / LightGBM | Gradient boosting frameworks with built-in parameters (scale_pos_weight, is_unbalance) to directly adjust for class imbalance during training. |

| Scikit-learn | Offers class_weight parameter for many models and essential metrics like average_precision_score, balanced_accuracy_score, and plot_precision_recall_curve. |

| DeepChem | Provides tools for handling molecular datasets and can integrate with PyTorch/TensorFlow for custom weighted loss functions in deep learning models. |

| MCCV (Monte Carlo CV) | A validation strategy superior to k-fold for severe imbalance; involves repeated random splits to better estimate performance variance. |

| PubChem BioAssay | A critical source for publicly available screening data where imbalance is the norm; used for benchmarking model robustness. |

Visualizations

Title: Robust Validation Workflow for Imbalanced Data

Title: Consequences of Ignoring Data Imbalance

Welcome to the Technical Support Center for Handling Class Imbalance in Chemogenomic Classification Models. This guide addresses common questions and troubleshooting issues related to evaluating model performance beyond simple accuracy.

Troubleshooting Guides & FAQs

Q1: My chemogenomic model for predicting compound-protein interactions has a 95% accuracy, but upon manual verification, it seems to be missing most of the true active interactions. What is happening?

A: This is a classic symptom of class imbalance, where one class (e.g., non-interacting pairs) vastly outnumbers the other (interacting pairs). A model can achieve high accuracy by simply predicting the majority class for all samples. You must use metrics that are sensitive to the performance on the minority class.

- Diagnosis: Relying solely on Accuracy.

- Solution: Calculate Recall (Sensitivity) for the "interaction" class. A low recall confirms the model is missing true positives. Immediately evaluate Precision, F1-Score, and AUPRC.

Q2: When evaluating my imbalanced kinase inhibitor screening model, Precision and Recall give me two very different stories. Which one should I prioritize for my drug discovery pipeline?

A: The priority depends on the cost of false positives vs. false negatives in your research phase.

- Early Screening (High-Throughput): Prioritize High Recall. The goal is to cast a wide net and not miss potential active compounds (minimize false negatives). Subsequent assays will filter out false positives.

- Lead Optimization/Candidate Selection: Prioritize High Precision. The cost of experimental validation is high, so you need high confidence that your predicted actives are real (minimize false positives).

Q3: I've implemented the F1-Score, but it still seems to give an overly optimistic view of my severely imbalanced toxicology prediction model. Is there a more robust metric?

A: Yes. The F1-Score is the harmonic mean of Precision and Recall but can be misleading when the negative class is very large. For a comprehensive single-value metric, use Matthews Correlation Coefficient (MCC). It considers all four quadrants of the confusion matrix (TP, TN, FP, FN) and is reliable even with severe imbalance. An MCC value close to +1 indicates near-perfect prediction.

Q4: The AUROC (Area Under the ROC Curve) for my model is high (~0.85), but the precision-recall curve looks poor. Which one should I trust?

A: For imbalanced datasets common in chemogenomics (e.g., active vs. inactive compounds), trust the AUPRC (Area Under the Precision-Recall Curve). AUROC can be overly optimistic because the large number of true negatives inflates the score. AUPRC focuses solely on the performance regarding the positive (minority) class, making it a more informative metric for your use case.

Comparative Metrics Table

The following table summarizes key metrics beyond accuracy for imbalanced classification in chemogenomics.

| Metric | Formula | Focus | Ideal Value for Imbalance | Interpretation in Chemogenomics |

|---|---|---|---|---|

| Precision | TP / (TP + FP) | False Positives | Context-Dependent | Of all compounds predicted to bind a target, how many actually do? High precision means fewer wasted lab resources on false leads. |

| Recall (Sensitivity) | TP / (TP + FN) | False Negatives | High (if missing actives is costly) | Of all true binding compounds, how many did the model find? High recall means you're unlikely to miss a potential drug candidate. |

| F1-Score | 2 * (Precision * Recall) / (Precision + Recall) | Balance of P & R | > 0.7 (Contextual) | A single score balancing precision and recall. Useful for a quick, combined assessment when class balance is moderately skewed. |

| MCC | (TPTN - FPFN) / √((TP+FP)(TP+FN)(TN+FP)(TN+FN)) | All Confusion Matrix Cells | Close to +1 | A robust, correlation-based metric. Values between -1 and +1, where +1 is perfect prediction, 0 is random, and -1 is inverse prediction. Highly recommended for severe imbalance. |

| AUPRC | Area under the Precision-Recall curve | Positive Class Performance | Close to 1 | The gold standard for evaluating model performance on imbalanced data. A value significantly higher than the baseline (fraction of positives) indicates a useful model. |

Experimental Protocol: Calculating Metrics for an Imbalanced Dataset

Objective: To rigorously evaluate a binary classifier predicting compound-protein interaction using a dataset where only 5% of pairs are known interactors (positive class).

Materials: A trained model, a held-out test set with known labels, a computing environment (e.g., Python with scikit-learn).

Procedure:

- Generate Predictions: Use the trained model to predict probabilities (

y_pred_proba) and binary labels (y_pred) for the test set. - Create Confusion Matrix: Tabulate True Positives (TP), True Negatives (TN), False Positives (FP), False Negatives (FN).

- Calculate Metrics:

- Precision, Recall, F1: Use

sklearn.metrics.precision_score,recall_score,f1_score. - MCC: Use

sklearn.metrics.matthews_corrcoef.

- Precision, Recall, F1: Use

- Generate Curves & Areas:

- ROC & AUROC: Calculate False Positive Rate (FPR) and True Positive Rate (TPR) across thresholds. Compute area using

sklearn.metrics.roc_auc_score. - Precision-Recall & AUPRC: Calculate precision and recall across thresholds. Compute area using

sklearn.metrics.average_precision_scoreorauc.

- ROC & AUROC: Calculate False Positive Rate (FPR) and True Positive Rate (TPR) across thresholds. Compute area using

- Visualize: Plot both ROC and Precision-Recall curves on the same page for comparative assessment.

Metric Selection Decision Pathway

The Scientist's Toolkit: Research Reagent Solutions

| Item | Function in Imbalance Research |

|---|---|

| scikit-learn library | Primary Python toolkit for computing all metrics (precisionrecallcurve, classificationreport, matthewscorrcoef). |

| imbalanced-learn library | Provides advanced resampling techniques (SMOTE, Tomek Links) to synthetically balance datasets before modeling. |

| Precision-Recall Curve Plot | Critical visualization to diagnose performance on the minority class and compare models. AUROC should not be the sole curve. |

| Cost-Sensitive Learning | A modeling approach (e.g., class_weight in sklearn) that assigns a higher penalty to misclassifying the minority class during training. |

| Stratified Sampling | A data splitting method (e.g., StratifiedKFold) that preserves the class imbalance ratio in training/validation/test sets, ensuring representative evaluation. |

| MCC Calculator | A dedicated function or online calculator to verify the Matthews Correlation Coefficient, ensuring correct interpretation of model quality. |

Troubleshooting Guides & FAQs

Q1: I've extracted a target dataset from ChEMBL (e.g., Kinases). My model performs with 99% accuracy but fails completely on external validation. What is the most likely cause? A: This is a classic symptom of severe class imbalance and dataset bias. Public repositories often have thousands of confirmed active compounds (positive class) for popular targets like kinases, but very few confirmed inactives (negative class). Models may learn to predict "active" for everything, exploiting the imbalance. The high accuracy is misleading. Solution: Implement rigorous negative sampling strategies, such as using assumed inactives from unrelated targets or applying cheminformatic filters to generate putative negatives, followed by careful external benchmarking.

Q2: When querying BindingDB for a specific protein, I get hundreds of active compounds with Ki values, but how do I construct a reliable negative set for a balanced classification task? A: Reliable negative set construction is a central challenge. Do not use random compounds from other targets, as they may be unknown actives. Recommended protocol:

- Collect Actives: Retrieve all compounds with binding measurements (Ki, IC50 ≤ 10 µM) for your target.

- Generate Candidate Negatives: Use a set of compounds tested against distantly related targets (e.g., GPCRs if your target is a protease) from the same repository. Ensure no overlap with your actives.

- Apply Similarity Filter: Remove any candidate negative whose molecular fingerprint (e.g., ECFP4) Tanimoto similarity exceeds 0.4-0.5 to any known active. This reduces the risk of latent actives.

- Validate Chemospace: Use PCA or t-SNE to visually confirm separation between active and negative sets.

Q3: My dataset from a public repository is imbalanced (10:1 active:inactive ratio). What algorithmic techniques should I prioritize to mitigate this? A: A combination of data-level and algorithm-level techniques is best. Start with:

- Data-Level: Undersample the majority class (actives) cautiously, or use synthetic oversampling (SMOTE) in the chemical descriptor space, though the latter can lead to overgeneralization.

- Algorithm-Level: Use tree-based models (e.g., Random Forest, XGBoost) that can handle imbalance better via class weighting. Always set

class_weight='balanced'in scikit-learn or scaleposweight in XGBoost. - Evaluation: Immediately stop using accuracy. Use metrics robust to imbalance: Precision-Recall AUC (PR-AUC), Matthews Correlation Coefficient (MCC), and Balanced Accuracy. Generate confusion matrices for all validation steps.

Q4: Are there specific target classes in ChEMBL/BindingDB known to have extreme imbalance that I should be aware of? A: Yes. Analysis reveals consistent patterns. The table below summarizes imbalance ratios for common target classes.

Table 1: Class Imbalance Ratios in Public Repositories (Illustrative Example)

| Target Class (ChEMBL) | Approx. Active Compounds | Reported Inactive/Decoy Compounds | Estimated Imbalance Ratio (Active:Inactive) | Primary Risk |

|---|---|---|---|---|

| Kinases | ~500,000 | ~50,000 (curated) | 10:1 | High false positive rate in screening. |

| GPCRs (Class A) | ~350,000 | ~30,000 | >10:1 | Model learns family-specific features, not binding. |

| Nuclear Receptors | ~80,000 | < 5,000 | >15:1 | Extreme overfitting to limited chemotypes. |

| Ion Channels | ~120,000 | ~15,000 | 8:1 | Difficulty generalizing to novel scaffolds. |

| Note: These figures are illustrative based on common extraction queries. Actual ratios depend on specific filtering criteria. |

Q5: What is a detailed experimental protocol for creating a balanced chemogenomic dataset from ChEMBL for a kinase inhibition model? A: Protocol: Curating a Balanced Kinase Inhibitor Dataset

1. Data Retrieval (ChEMBL via API or web):

- Actives: Query

target_chembl_idfor a specific kinase (e.g., CHEMBL203 for EGFR). Retrieve compounds withstandard_type= 'IC50' or 'Ki' andstandard_relation= '=' andstandard_value≤ 10000 nM. Apply a threshold (e.g., ≤ 100 nM) to define 'Active'. - Putative Inactives: Query a structurally distant kinase (e.g., CHEMBL279 for a MAP kinase). Collect compounds tested with

standard_value≥ 10000 nM ORactivity_comment= 'Inactive'. This forms your candidate negative pool.

2. Data Curation & Deduplication:

- Standardize molecules (RDKit: Remove salts, neutralize, generate canonical SMILES).

- Remove duplicates by InChIKey.

- Apply Lipinski's Rule of Five filters to remain in drug-like space.

3. Negative Set Refinement (Critical Step):

- Compute ECFP4 fingerprints for all actives and candidate inactives.

- Calculate pairwise Tanimoto similarity matrix.

- Filter out any candidate inactive that is >0.45 similar to any active. This yields your final "Clean Negative" set.

4. Final Dataset Assembly:

- Randomly sample from the larger class (usually actives) to match the size of the smaller class.

- Split into Train/Validation/Test sets using Stratified Splitting to preserve ratio.

Visualization: Workflow for Balanced Dataset Creation

Diagram Title: Balanced Chemogenomic Dataset Creation Workflow

The Scientist's Toolkit: Key Research Reagent Solutions

Table 2: Essential Tools for Handling Repository Imbalance

| Item / Tool | Function in Imbalance Research | Example / Note |

|---|---|---|

| ChEMBL Web API / RDKit | Programmatic data retrieval and molecular standardization. | Enables reproducible, large-scale dataset construction. |

| ECFP4 / Morgan Fingerprints | Molecular representation for similarity filtering. | Critical for removing latent actives from the negative set. |

scikit-learn imbalanced-learn |

Implements SMOTE, ADASYN, and various undersamplers. | Use cautiously; synthetic data may not reflect chemical reality. |

| XGBoost / LightGBM | Gradient boosting frameworks with native class weighting. | scale_pos_weight parameter is key for imbalanced data. |

| Precision-Recall (PR) Curves | Evaluation metric robust to class imbalance. | More informative than ROC curves when classes are skewed. |

| Matthews Correlation Coefficient (MCC) | Single score summarizing confusion matrix for imbalance. | Ranges from -1 to +1; +1 is perfect prediction. |

| Chemical Clustering (Butina) | To ensure diversity when subsampling the majority class. | Prevents model from learning only the most common scaffold. |

Visualization: Model Evaluation Pathway for Imbalanced Data

Diagram Title: Evaluation Metrics for Imbalanced Models

A Toolkit for Balance: Data, Algorithm, and Hybrid Techniques for Chemogenomic Models

FAQ & Troubleshooting Guide

Q1: After applying SMOTE to my high-dimensional molecular feature set (e.g., 1024-bit Morgan fingerprints), my model's performance on the test set worsened significantly. What went wrong? A: This is a classic symptom of overfitting due to the "curse of dimensionality" and synthetic sample generation in irrelevant regions of the feature space. SMOTE generates samples along the line between minority class neighbors, but in high-dimensional space, distance metrics become less meaningful, and all points are nearly equidistant. This leads to the creation of unrealistic, noisy synthetic samples.

- Solution: Apply dimensionality reduction (e.g., UMAP, PCA) before oversampling, or use feature selection to identify the most informative descriptors. Alternatively, consider using SMOTE-ENN (Edited Nearest Neighbours), which cleans the resulting dataset by removing any sample whose class differs from at least two of its three nearest neighbors. This can remove both synthetic and original noisy samples.

Q2: My molecular dataset is extremely imbalanced (1:100). ADASYN seems to create an excessive number of synthetic samples for certain subclusters, leading to poor model generalization. How can I control this? A: ADASYN generates samples proportionally to the density of minority class examples. In molecular data, active compounds (the minority) may form tight clusters, causing ADASYN to overpopulate these areas.

- Solution: Tune the

n_neighborsparameter in ADASYN. A higher value considers a broader neighborhood, smoothing out density estimates. You can also cap the generation ratio. Instead of targeting a 1:1 balance, aim for a less aggressive ratio (e.g., 1:10) and combine it with a cost-sensitive learning algorithm.

Q3: When using random undersampling on my chemogenomic dataset, I am concerned about losing critical SAR (Structure-Activity Relationship) information from the majority class. Are there smarter undersampling techniques? A: Yes. Random removal is rarely optimal. Use NearMiss or Cluster Centroids.

- NearMiss-1 selects majority samples whose average distance to the three closest minority samples is the smallest, preserving the boundary.

- Cluster Centroids uses K-Means on the majority class and retains only the centroids, preserving the overall data distribution shape. This is particularly useful for compressing large, redundant libraries of inactive compounds.

Q4: For my assay data, which is best: SMOTE, ADASYN, or undersampling? A: The choice is data-dependent. See the comparative table below for a guideline.

Comparative Performance Table: Data-Level Methods on Molecular Datasets

| Method | Core Principle | Best For (Molecular Context) | Key Risk / Consideration | Typical Impact on Model (AUC-PR) |

|---|---|---|---|---|

| Random Undersampling | Randomly remove majority class samples. | Very large datasets where computational cost is primary. Can be used in ensemble (e.g., EasyEnsemble). | Loss of potentially useful SAR information; can remove critical inactive examples. | May increase recall but often at a significant cost to precision. |

| Cluster Centroids | Undersample by retaining K-Means cluster centroids of the majority class. | Large, redundant compound libraries (e.g., vendor libraries) where "diversity" of inactives is preserved. | Computationally intensive; cluster quality depends on distance metric and K. | Generally improves precision over random undersampling by keeping distribution shape. |

| SMOTE | Generates synthetic minority samples via linear interpolation between k-nearest neighbors. | Moderately imbalanced datasets where the minority class forms coherent clusters in descriptor space. | Generation of noisy, unrealistic molecules in high-D space; can cause overfitting. | Often improves recall; can degrade precision if noise is introduced. |

| ADASYN | Like SMOTE, but generates more samples where minority density is low (harder-to-learn areas). | Datasets where the decision boundary is highly complex and minority clusters are sparse. | Can over-amplify outliers and generate samples in ambiguous/overlapping regions. | Can improve recall for borderline/minority subclusters more than SMOTE. |

| SMOTE-ENN | Applies SMOTE, then cleans data using Edited Nearest Neighbours. | Noisy molecular datasets or those with significant class overlap. | Increases computational time; cleaning can sometimes be too aggressive. | Typically improves both precision and recall compared to vanilla SMOTE. |

Experimental Protocol: Evaluating Sampling Strategies in a Chemogenomic Pipeline

Objective: To empirically determine the optimal data-level resampling strategy for building a classifier to predict compound activity against a target protein.

Materials & Reagents (The Scientist's Toolkit):

| Item | Function in Experiment |

|---|---|

| Molecular Dataset (e.g., from ChEMBL) | Contains SMILES strings and binary activity labels (Active/Inactive) for a specific target. Imbalance ratio should be >1:10. |

| RDKit (Python) | Used to compute molecular descriptors (e.g., Morgan fingerprints) from SMILES strings. |

| imbalanced-learn (Python library) | Provides implementations of SMOTE, ADASYN, NearMiss, ClusterCentroids, and SMOTE-ENN. |

| Scikit-learn | For train-test splitting, model building (e.g., Random Forest, XGBoost), and performance metrics. |

| UMAP | Optional dimensionality reduction tool for visualizing and potentially preprocessing high-dimensional fingerprint data before sampling. |

| Cross-Validation Scheme (Stratified K-Fold) | Ensures each fold maintains the original class distribution, critical for unbiased evaluation. |

Methodology:

- Data Preparation: Standardize the dataset. Convert SMILES to 2048-bit Morgan fingerprints (radius=2).

- Baseline Establishment: Split data into 80% train and 20% hold-out test set using stratified sampling. Train a model (e.g., Random Forest) on the unmodified training set. Evaluate on the test set using AUC-ROC and AUC-PR (primary metric for imbalance).

- Resampling Trials: On the training set only, apply five different resampling techniques to achieve a 1:2 (minority:majority) ratio:

- Random Undersampling

- Cluster Centroids (set nclusters = # of minority samples)

- SMOTE (kneighbors=5)

- ADASYN (n_neighbors=5)

- SMOTE-ENN (smotek=5, ennk=3)

- Model Training & Validation: For each resampled training set, train an identical Random Forest model. Use 5-fold Stratified Cross-Validation on the resampled set for hyperparameter tuning. Evaluate each model on the original, untouched test set.

- Analysis: Compare the AUC-PR and F1 scores across all methods. The method yielding the highest AUC-PR on the hold-out test set, without a severe drop in precision, is optimal for this specific target.

Diagram: Experimental Workflow for Sampling Strategy Evaluation

Troubleshooting Guides & FAQs

FAQ 1: My imbalanced chemogenomic dataset has >99% negative compounds. Which tree ensemble method should I start with, and why?

- Answer: For extreme imbalance (>99:1), start with BalancedRandomForest. It is a variant of Random Forest where each bootstrap sample is forced to balance the class distribution. This prevents the model from being overwhelmed by the majority class (e.g., non-binders) during tree construction. Gradient Boosting Machines (GBM) like XGBoost, while powerful, can be more sensitive to hyperparameters under extreme imbalance and may require more careful tuning of the

scale_pos_weightparameter.

FAQ 2: I've implemented a Cost-Sensitive Learning framework, but my model's recall for the active class is still unacceptably low. What are the key parameters to check?

- Answer: Low recall for the minority (active) class indicates the cost of false negatives is still not high enough. First, verify your cost matrix. The penalty for misclassifying a minority instance as majority should be significantly larger than the reverse. For algorithms like XGBoost, ensure the

scale_pos_weightparameter is set appropriately (e.g.,num_negative_samples / num_positive_samples). For Scikit-learn'sRandomForestClassifier, use theclass_weight='balanced_subsample'parameter. Recalibrate these weights incrementally.

FAQ 3: My ensemble model performs well on validation but fails on external test sets. Could this be a data leakage issue from the sampling method?

- Answer: Yes, this is a common pitfall. Never apply sampling techniques (like SMOTE or Random Undersampling) before splitting your data. This allows information from the "future" test set to leak into the training process, creating over-optimistic validation scores.

- Correct Protocol: Always split your data into training and hold-out test sets first. Apply any sampling or cost-sensitive adjustments only on the training fold during cross-validation. The final model evaluation must be performed on the pristine, unsampled test set.

FAQ 4: How do I choose between Synthetic Oversampling (e.g., SMOTE) and adjusting class weights in tree-based models?

- Answer: The choice depends on computational resources and data characteristics. See the comparison table below.

| Feature | Synthetic Oversampling (SMOTE + RF/XGBoost) | Class Weight / Cost-Sensitive Learning (RF/XGBoost) |

|---|---|---|

| Core Approach | Generates synthetic minority samples to balance dataset before training. | Increases the penalty for misclassifying minority samples during training. |

| Training Time | Higher (due to larger dataset). | Lower (original dataset size). |

| Risk of Overfitting | Moderate (if SMOTE generates unrealistic samples in high-dimension). | Lower (works on original data distribution). |

| Best for | Smaller datasets where the absolute number of minority samples is very low. | Larger datasets or when computational efficiency is key. |

| Key Parameter | SMOTE k_neighbors, sampling strategy. |

class_weight, scale_pos_weight, custom cost matrix. |

FAQ 5: Can you provide a standard experimental protocol for benchmarking these solutions?

- Answer: Yes. Follow this workflow for reproducible chemogenomic classification benchmarking.

Experimental Protocol: Benchmarking Imbalance Solutions

- Data Partitioning: Split the full chemogenomic dataset (e.g., compounds vs. protein targets) into 80% training and 20% held-out test set using stratified splitting to preserve the imbalance ratio.

- Baseline Training: On the unsampled training set, train three baseline models: a) Standard Random Forest (RF), b) Standard XGBoost, c) Logistic Regression. Use cross-validation.

- Intervention Training: Create four modified training sets/strategies from the original training data:

- SMOTE-RF: Apply SMOTE only to the training fold.

- BalancedRandomForest: Use the

BalancedRandomForestalgorithm. - XGBoost-Cost: Train XGBoost with

scale_pos_weight. - RF-Weighted: Train RF with

class_weight='balanced_subsample'.

- Validation: Evaluate all models using Stratified 5-Fold Cross-Validation on the training set. Use metrics: AUC-ROC, Average Precision (PR-AUC), F1-Score, and specifically Recall (Sensitivity) for the active/binding class.

- Final Evaluation: Retrain the best configuration from each method on the entire training set. Perform final evaluation on the untouched, unsampled held-out test set. Report all metrics.

Research Reagent Solutions: Key Computational Tools

| Item / Software | Function in Experiment |

|---|---|

| imbalanced-learn (scikit-learn-contrib) | Provides SMOTE, BalancedRandomForest, and other advanced resampling algorithms. |

| XGBoost or LightGBM | Efficient gradient boosting frameworks with built-in cost-sensitive parameters (scale_pos_weight). |

| scikit-learn | Core library for data splitting, standard models, metrics, and basic ensemble methods. |

| Bayesian Optimization (e.g., scikit-optimize) | For efficient hyperparameter tuning of complex ensembles, crucial for maximizing performance on imbalanced data. |

| Molecule Featurization Library (e.g., RDKit) | Converts chemical structures into numerical descriptors (ECFP, molecular weight) for model input. |

Visualization: Experimental Workflow for Imbalanced Chemogenomic Modeling

Visualization: Decision Logic for Choosing an Imbalance Solution

Technical Support & Troubleshooting Center

This support center is designed to assist researchers in implementing synthetic data generation techniques to address class imbalance in chemogenomic classification models. The FAQs and guides below address common technical hurdles.

Frequently Asked Questions (FAQs)

Q1: When using a GPT-based molecular language model for data augmentation, my generated SMILES strings are often invalid. What are the primary causes and fixes?

A: Invalid SMILES typically stem from the model's inability to learn fundamental chemical grammar. Troubleshooting steps:

- Pre-train on a Large, Canonical Dataset: Ensure your base model is pre-trained on a large corpus (e.g., 10M+ molecules from PubChem) using canonical SMILES representations.

- Fine-tune with a Constrained Vocabulary: Use a tokenizer restricted to common chemical symbols and parentheses. Implement a SMILES syntax checker as a post-generation filter.

- Adjust Sampling Temperature: A very high temperature (>1.2) increases randomness and invalid structures. Start with a lower temperature (0.7-0.9) for more conservative, rule-following generation.

Q2: My conditional VAE generates synthetic compounds for a rare target class, but they lack diversity (high similarity to each other). How can I improve diversity?

A: This is a classic mode collapse issue in generative models.

- Check the Latent Space: Ensure the Kullback–Leibler (KL) divergence loss weight in your VAE is not too high, which can force all latent vectors to cluster tightly. Gradually reduce the

betaparameter (from 1.0 to ~0.01) to encourage a more spread-out latent space. - Augment the Conditioning Input: Instead of using a simple one-hot vector for the rare class, condition the model on a combination of target fingerprint and a randomly sampled "intent" vector to promote variation.

- Incorporate a Diversity Loss: Implement a pairwise distance metric (e.g., Tanimoto distance on molecular fingerprints) between a batch of generated molecules and maximize it during training.

Q3: After augmenting my imbalanced dataset with synthetic samples, my model's performance on the validation set improved, but external test set performance dropped. What happened?

A: This indicates potential overfitting to the biases in your synthetic data generation process.

- Assess Synthetic Data Quality: Calculate key metrics (see Table 1) for your synthetic set versus the original rare class. A significant drift in properties suggests the generator is off-distribution.

- Implement a Filtering Pipeline: Use a rule-based or predictive filter (e.g., for drug-likeness, synthetic accessibility) before adding synthetic data to the training pool.

- Use a Staged Training Protocol: First, pre-train your classifier on the original balanced data (from majority classes). Then, fine-tune it on the augmented dataset for a limited number of epochs to prevent catastrophic forgetting of general features.

Q4: How do I quantitatively evaluate the quality and utility of synthetic molecular data before using it for model training?

A: Employ a multi-faceted evaluation framework. Key metrics to compute are summarized below:

Table 1: Quantitative Metrics for Synthetic Molecular Data Evaluation

| Metric Category | Specific Metric | Ideal Target Range | Calculation/Description |

|---|---|---|---|

| Validity | SMILES Validity Rate | >98% | Percentage of generated strings that parse into valid molecules (RDKit/Chemaxon). |

| Uniqueness | Unique Rate | >90% | Percentage of valid, non-duplicate molecules (after deduplication against training set). |

| Novelty | Novelty Rate | Context-dependent | Percentage of unique molecules not found in the reference training set. Can be 100% for pure generation. |

| Fidelity | Fréchet ChemNet Distance (FCD) | Lower is better | Measures distribution similarity between real and synthetic molecules using a pre-trained ChemNet. |

| Diversity | Internal Pairwise Similarity (Avg) | Lower is better (<0.5) | Mean Tanimoto similarity (using ECFP4) between all pairs in the synthetic set. |

| Property Match | Property Distribution (e.g., MW, LogP) p-value | >0.05 (Not Sig.) | Kolmogorov-Smirnov test p-value comparing distributions of key properties between real and synthetic sets. |

Q5: My molecular language model generates molecules, but their predicted activity for the target is poor. How can I better guide generation toward active compounds?

A: You need to integrate activity prediction into the generation loop.

- Reinforcement Learning (RL) Fine-tuning: After initial training, fine-tune your generator using a policy gradient method (e.g., PPO) where the reward is the predicted pIC50 or pKi from a pre-trained activity predictor (oracle).

- Bayesian Optimization in Latent Space: For a VAE, use a Bayesian optimizer to search the latent space for points that maximize the predicted activity, then decode them.

- Transfer Learning with Conditional Generation: Re-train your generator's output layer conditioned on continuous activity scores or activity class labels (active/inactive) derived from your predictor.

Experimental Protocols

Protocol 1: Standardized Workflow for Augmenting a Rare Class using a Fine-Tuned Molecular Transformer

Objective: To generate 5,000 novel, valid, and diverse synthetic molecules for an under-represented kinase inhibitor class.

Materials (Research Reagent Solutions): Table 2: Essential Toolkit for Synthetic Data Generation Experiment

| Item | Function | Example/Supplier |

|---|---|---|

| Curated Dataset | Foundation for training and evaluation. Must include canonical SMILES and target labels. | CHEMBL, BindingDB |

| Pre-trained Model | Base generative model with learned chemical grammar. | MolGPT, Chemformer (Hugging Face) |

| Cheminformatics Toolkit | For processing, standardizing, and analyzing molecules. | RDKit (Open Source) |

| GPU Computing Resource | For efficient model training and inference. | NVIDIA V100/A100, Google Colab Pro |

| Activity Prediction Oracle | Pre-trained QSAR model to score generated molecules. | In-house Random Forest/CNN model |

| Evaluation Scripts | Custom Python scripts to compute metrics in Table 1. | Custom, using RDKit & NumPy |

Methodology:

- Data Preparation: Isolate all SMILES strings for the rare target class (e.g., Class Y, n=200). Canonicalize and remove duplicates. Split the remaining majority classes data: 80% for pre-training, 20% for validation.

- Model Fine-tuning: Load a pre-trained Molecular Transformer (e.g., Chemformer). Continue training it on the rare class SMILES only for 10-20 epochs using a masked language modeling objective. Use a low learning rate (e.g., 1e-5) to avoid catastrophic forgetting.

- Conditional Generation: Use the fine-tuned model for inference. Prompt the model with a "[BOS]" token and generate molecules via nucleus sampling (top-p=0.9) until an "[EOS]" token is produced. Generate 50,000 SMILES strings.

- Post-processing & Filtering: Parse all outputs with RDKit. Filter for valid molecules. Remove any duplicates within the synthetic set and against the original 200 real molecules. Apply basic property filters (150 < MW < 600, LogP < 5).

- Evaluation & Selection: From the filtered pool, calculate metrics from Table 1 against the original 200 molecules. Use the FCD score and property p-values to ensure distributional match. Randomly select 5,000 molecules from the pool that passes these checks.

- Augmentation: Combine the original 200 real molecules with the 5,000 high-quality synthetic molecules to form the augmented rare class dataset for downstream classifier training.

Protocol 2: Active Learning Loop with VAE and Bayesian Optimization

Objective: Iteratively generate and select synthetic molecules predicted to be highly active for a specific target.

Methodology:

- Initialization: Train a VAE (e.g., JT-VAE) on a broad drug-like chemical library. Train a separate random forest activity predictor on the available (imbalanced) labeled data.

- Latent Space Sampling: Encode the molecules from the rare active class into the VAE's latent space.

- Bayesian Optimization: Fit a Gaussian Process (GP) model to the latent space points, using their predicted activity as the target value.

- Generation: Use the GP to propose a new latent point

z*that maximizes the expected improvement (EI) in predicted activity. Decodez*using the VAE decoder to produce a new molecule. - Validation & Iteration: Validate the new molecule's properties. Add it to a candidate pool. Every N iterations (e.g., N=100), retrain the activity predictor with the expanded candidate pool (pseudo-labeled) and repeat from step 2.

Workflow & Pathway Visualizations

Title: Synthetic Data Augmentation Workflow for Class Imbalance

Title: RL Fine-Tuning for Activity-Guided Generation

Implementing Weighted Loss Functions in Deep Learning Architectures for Molecular Representation

Troubleshooting Guides & FAQs

Q1: I've implemented a weighted binary cross-entropy loss for my imbalanced chemogenomic dataset, but my model's predictions are skewed heavily towards the minority class. What could be wrong?

A: This is often due to incorrect weight calculation or application. The loss weight for a class is typically inversely proportional to its frequency. For binary classification, the weight for class i is often computed as total_samples / (num_classes * count_of_class_i). Ensure you are applying the weight tensor correctly to the loss function. In PyTorch, using pos_weight in nn.BCEWithLogitsLoss requires a weight for the positive class only, not a tensor for both classes. For a multi-class scenario, use weight in nn.CrossEntropyLoss. Verify your class counts with a simple histogram before calculating weights.

Q2: During training with a weighted loss, my loss value is significantly higher than with a standard loss. Is this normal, and how do I interpret validation metrics?

A: Yes, this is normal. The weighted loss amplifies the contribution of errors on minority class samples, leading to a larger numerical value. Do not compare loss values directly between weighted and unweighted training runs. Instead, focus on balanced metrics for validation, such as Balanced Accuracy, Matthews Correlation Coefficient (MCC), or the F1-score (especially F1-micro or macro-average). Tracking loss on a held-out validation set for early stopping remains valid, as you are comparing relative decreases within the same weighted run.

Q3: How do I choose between class-weighted loss, oversampling (e.g., SMOTE), and two-stage training for handling imbalance in molecular property prediction?

A: The choice is empirical, but a common strategy is:

- Start with class-weighted loss, as it is the simplest to implement and modifies only the optimization objective.

- If performance is poor, try combining weighted loss with moderate oversampling (like SMOTE on learned molecular fingerprints, not raw SMILES) to provide more minority examples.

- Two-stage training (pretraining on a large, balanced dataset, then fine-tuning with weights) is powerful but resource-intensive. It is highly recommended if you have access to a large source dataset like ChEMBL. For a systematic comparison, see the experimental protocol below.

Q4: My framework (TensorFlow/Keras) automatically calculates class weights via compute_class_weight. Are there scenarios where I should manually define them?

A: Yes. Automatic calculation assumes a linear inverse frequency relationship. You may need to manually adjust weights ("weight tuning") if:

- The cost of misclassifying a specific class (e.g., an active compound) is much higher. You can apply a multiplicative factor.

- The imbalance is extreme (e.g., 1:1000). Pure inverse frequency can lead to numerical instability; applying a square root or logarithmic smoothing to the weights (

weight = sqrt(total_samples / count_of_class_i)) can help. - You are using a loss function like Focal Loss, which has its own modulating parameters (

alpha,gamma) that need to be tuned alongside class weights.

Q5: I'm using a Graph Neural Network (GNN) for molecular graphs. Where in the architecture should the class weighting be applied?

A: The weighting is applied only in the loss function, not within the GNN layers. The architecture (message passing, readout) remains unchanged. Ensure your batch sampler or data loader does not use implicit weighting (like weighted random sampling) unless you account for it in the loss function, as this would double-weight the samples.

Experimental Protocols & Data

Protocol 1: Benchmarking Imbalance Handling Strategies

Objective: Compare the efficacy of Weighted Cross-Entropy, Focal Loss, and Oversampling on a benchmark chemogenomic dataset.

- Dataset Preparation: Use a publicly available dataset like the Tox21 challenge dataset. Select a specific assay (e.g., NR-AR) to create a binary classification task with a pronounced class imbalance (~95:5 ratio). Perform an 80/10/10 stratified split for train/validation/test sets.

- Model Architecture: Implement a standard Directed Message Passing Neural Network (D-MPNN) with hidden size 300 and 3 message passing steps. Use a global mean pooling readout.

- Training Configurations:

- Baseline: Standard Binary Cross-Entropy (BCE) loss.

- Weighted BCE:

pos_weight = (num_negatives / num_positives). - Focal Loss: Use

alpha=0.25, gamma=2.0as starting points, withalphapotentially set to the inverse class frequency. - Oversampling: Randomly duplicate minority class samples in each epoch to achieve a 1:1 ratio, using standard BCE.

- Combined: Weighted BCE + moderate oversampling (to a 1:3 ratio).

- Training: Train all models for 100 epochs with the Adam optimizer (lr=0.001), batch size=64, and early stopping on validation ROC-AUC with patience=20.

- Evaluation: Report ROC-AUC, PR-AUC (critical for imbalance), Balanced Accuracy, and F1-score on the held-out test set.

Table 1: Comparative Performance on Tox21 NR-AR Assay (Simulated Results)

| Strategy | Test ROC-AUC | Test PR-AUC | Balanced Accuracy | F1-Score |

|---|---|---|---|---|

| Baseline (Standard BCE) | 0.72 | 0.25 | 0.55 | 0.28 |

| Weighted BCE | 0.81 | 0.45 | 0.73 | 0.52 |

| Focal Loss (α=0.75, γ=2.0) | 0.83 | 0.48 | 0.75 | 0.55 |

| Oversampling (1:1) | 0.79 | 0.41 | 0.70 | 0.49 |

| Combined (Weighted + 1:3 OS) | 0.85 | 0.53 | 0.78 | 0.58 |

Protocol 2: Implementing & Tuning Focal Loss for Molecular Graphs

Objective: Provide a step-by-step guide to implement and tune Focal Loss in a PyTorch GNN project.

Implementation:

Tuning Workflow: a. Fix

gamma=2.0(default). Perform a coarse grid search foralphaover[0.1, 0.25, 0.5, 0.75, 0.9]. b. Select the bestalphabased on validation PR-AUC. Then, perform a fine search forgammaover[0.5, 1.0, 2.0, 3.0]. c. For extreme imbalance, consider adding a class weight to the Focal Loss:focal_loss = weight * self.alpha * (1-pt)self.gamma * bce_loss.

Visualizations

Title: Workflow for Training with Weighted Loss

Title: Taxonomy of Loss Functions for Class Imbalance

The Scientist's Toolkit: Research Reagent Solutions

| Item / Solution | Function & Application |

|---|---|

| RDKit | Open-source cheminformatics toolkit. Used for converting SMILES to molecular graphs, calculating descriptors, and scaffold splitting. Essential for dataset preparation and analysis. |

| PyTor Geometric (PyG) / DGL-LifeSci | Libraries for building Graph Neural Networks (GNNs). Provide pre-built modules for message passing, graph pooling, and commonly used molecular GNN architectures (e.g., AttentiveFP, GIN). |

| Imbalanced-learn (imblearn) | Provides algorithms for oversampling (SMOTE, ADASYN) and undersampling. Use with caution on molecular data—prefer to apply to learned representations rather than raw input. |

| Focal Loss Implementation | A custom PyTorch/TF module (as shown above). Critical for down-weighting easy, majority class examples and focusing training on hard, minority class examples. |

| Class Weight Calculator | A simple utility function to compute inverse frequency or "balanced" class weights from dataset labels. Integrates with torch.utils.data.WeightedRandomSampler if needed. |

| Molecular Scaffold Splitter | Ensures that structurally similar molecules are not spread across train/val/test sets, preventing data leakage and providing a more realistic performance estimate. |

| Hyperparameter Optimization Library (Optuna, Ray Tune) | Crucial for systematically tuning loss function parameters (like alpha, gamma in Focal Loss) alongside model hyperparameters. |

Technical Support Center

Troubleshooting Guides & FAQs

Q1: My model's recall for the minority class (e.g., 'active compound-target pair') is still very low after applying SMOTE. What could be wrong? A: This is often a data-level issue. SMOTE generates synthetic samples in feature space, which can be problematic in high-dimensional chemogenomic data (e.g., 1024-bit molecular fingerprints + target protein descriptors). Check for:

- Feature Sparsity: High-dimensional, sparse features lead to poor interpolation. Synthetic samples may be created in nonsensical regions of the feature space.

- Class Overlap: The intrinsic separation between majority and minority classes may be low.

- Protocol Error: You may be applying resampling before splitting data into training and validation sets, causing data leakage and over-optimistic performance.

Protocol: Corrected Resampling Workflow

- Split your dataset into Training and Hold-out Test sets. The Hold-out Test set must remain untouched and imbalanced to reflect real-world distribution.

- Perform k-fold cross-validation only on the Training set. Within each fold:

- Split the training portion into train and validation folds.

- Apply the chosen imbalance technique (e.g., SMOTE, ADASYN, RandomUnderSampler) only to the train fold of that iteration.

- Train the model on the resampled train fold.

- Validate on the untouched validation fold (which preserves the original imbalance).

- After cross-validation, train the final model on the entire Training set, applying the chosen resampling technique.

- Evaluate the final model's performance only once on the untouched Hold-out Test set.

Q2: When using ensemble methods like Balanced Random Forest, my model becomes computationally expensive and hard to interpret for feature importance. How can I mitigate this? A: This is a common trade-off. For interpretability in chemogenomic models, consider a hybrid approach:

- Two-Stage Interpretation: First, use a powerful, non-linear ensemble (e.g., XGBoost with

scale_pos_weightparameter) to identify top-performing models. Second, for the final interpretable model, use a cost-sensitive logistic regression or SVM with class weighting, trained on the most important features identified by the ensemble model (e.g., top 50 molecular descriptors and protein features). - Parameter Tuning: For Balanced Random Forest, reduce

n_estimatorsand usemax_samplesto control bootstrap sample size. Use permutation importance or SHAP values on a subset of the ensemble to approximate global feature importance.

Protocol: Hybrid Interpretation Pipeline

- Train an XGBoost classifier with

scale_pos_weight = (number of majority samples / number of minority samples). - Extract the top N features using gain-based or SHAP feature importance.

- Subset your original dataset to these top N features.

- Train a cost-sensitive Logistic Regression model (

class_weight='balanced') on this feature subset. - Interpret the model using the sign and magnitude of the regression coefficients.

Q3: After implementing threshold-moving for my trained classifier, performance metrics become inconsistent. Why? A: Threshold-moving optimizes for a specific metric (e.g., F1-score for the minority class). Inconsistency arises because different metrics respond differently to threshold shifts. You must define a single, primary evaluation metric aligned with your research goal before tuning.

Protocol: Metric-Guided Threshold Tuning

- After model training, obtain predicted probabilities for the validation set.

- Define your primary metric (e.g., F2-score to emphasize recall, or Geometric Mean).

- Use a systematic search (e.g., Precision-Recall curve analysis, Youden's J statistic) to find the optimal threshold that maximizes your primary metric on the validation set.

- Apply this new threshold to the hold-out test set probabilities and report metrics.

Table 1: Comparison of Imbalance Technique Performance on a Chemogenomic Dataset (Sample Experiment) Dataset: BindingDB subset (Target: Kinase, Imbalance Ratio ~ 1:20). Model: Gradient Boosting. Evaluation Metric: Average over 5-fold CV on validation fold (imbalanced).

| Technique | Precision (Minority) | Recall (Minority) | F1-Score (Minority) | Geometric Mean | Training Time (Relative) |

|---|---|---|---|---|---|

| Baseline (No Correction) | 0.45 | 0.18 | 0.26 | 0.42 | 1.0x |

| Random Undersampling | 0.23 | 0.65 | 0.34 | 0.58 | 0.7x |

| SMOTE | 0.32 | 0.61 | 0.42 | 0.65 | 1.8x |

| SMOTE + Tomek Links | 0.35 | 0.70 | 0.47 | 0.71 | 2.1x |

| Cost-Sensitive Learning | 0.41 | 0.55 | 0.47 | 0.68 | 1.1x |

| Ensemble (Balanced RF) | 0.38 | 0.63 | 0.47 | 0.69 | 3.5x |

Diagram 1: Corrected ML pipeline for imbalance handling.

Diagram 2: Workflow for performance metric-guided threshold moving.

The Scientist's Toolkit: Research Reagent Solutions for Imbalance Experiments

| Item/Reagent | Function in the Imbalance Workflow |

|---|---|

| Imbalanced-learn (imblearn) Python Library | Provides standardized implementations of oversampling (SMOTE, ADASYN), undersampling, and combination techniques for reliable experiments. |

| XGBoost / LightGBM | Gradient boosting libraries with built-in scale_pos_weight hyperparameter for easy and effective cost-sensitive learning. |

| SHAP (SHapley Additive exPlanations) | Explains model predictions and calculates consistent, global feature importance, crucial for interpreting models trained on imbalanced data. |

Scikit-learn's classification_report & precision_recall_curve |

Essential functions for generating detailed per-class metrics and plotting curves to guide threshold-moving decisions. |

| Molecular Descriptor/Fingerprint Kit (e.g., RDKit, Mordred) | Generates numerical feature representations (e.g., ECFP4 fingerprints) from chemical structures, forming the basis for chemogenomic data. |

| Protein Descriptor Library (e.g., ProtDCal, iFeature) | Generates numerical feature representations from protein sequences, enabling the creation of a unified compound-target feature vector. |

| Custom Cost-Benefit Matrix | A researcher-defined table quantifying the real-world "cost" of false negatives vs. false positives, used to guide metric selection and threshold tuning. |

Diagnosing and Fixing Your Model: Practical Troubleshooting for Imbalanced Chemogenomic Datasets

Troubleshooting Guides & FAQs

Q1: My model's overall accuracy is high (>95%), but it fails to predict any active compounds for the minority class. What is the primary diagnostic? A1: The primary diagnostic is to examine the class-wise precision-recall curve. A high recall for the majority class (inactive compounds) with near-zero recall for the minority class (active compounds), despite high overall accuracy, is a definitive sign of overfitting to the majority class. Generate a Precision-Recall curve for each class separately.

Q2: How should I structure my validation splits to reliably detect this overfitting during model training? A2: You must use a Stratified K-Fold Cross-Validation split that preserves the class imbalance percentage in each fold. Do not use a single random train/test split. A minimum of 5 folds is recommended. Monitor performance metrics per fold for each class independently.

Q3: What quantitative metrics from the validation splits should I track in a table? A3: Summarize the following metrics per fold and averaged for both classes:

Table 1: Key Validation Metrics per Class for Imbalanced Chemogenomic Data

| Fold | Class | Precision | Recall (Sensitivity) | Specificity | F1-Score | MCC |

|---|---|---|---|---|---|---|

| 1 | Active (Minority) | |||||

| 1 | Inactive (Majority) | |||||

| 2 | Active (Minority) | |||||

| ... | ... | |||||

| Mean | Active (Minority) | |||||

| Std. Dev. | Active (Minority) |

Q4: I've confirmed overfitting to the majority class. What are the first three protocol steps to address it? A4:

- Resampling Validation: Implement a combined approach: oversample the minority class (e.g., SMOTE) only on the training fold and optionally undersample the majority class. Crucially, leave the validation fold untouched with its original distribution to get a realistic performance estimate.

- Algorithm Tuning: Adjust the decision threshold of your classifier (e.g., via the precision-recall curve) or use algorithms with built-in class weight adjustment (e.g.,

class_weight='balanced'in sklearn). - Cost-Sensitive Learning: Explicitly assign a higher misclassification cost to the minority class during model training.

Q5: Are there specific diagnostic curves beyond Precision-Recall that are useful? A5: Yes. Generate and compare:

- Receiver Operating Characteristic (ROC) Curve: Can be overly optimistic for severe imbalance. Check the area under the curve (AUC) for each class.

- Calibration Curve: Determines if the predicted probabilities are reliable. A model overfit to the majority class will typically show poor calibration for the minority class.

Experimental Protocol: Stratified Cross-Validation with Resampling

Objective: To train and evaluate a chemogenomic classifier while reliably diagnosing overfitting to the majority class.

- Data Preparation: Label compounds as 'Active' (minority) or 'Inactive' (majority) based on bioactivity threshold (e.g., IC50 < 10 μM).

- Stratified Splitting: Use

StratifiedKFold(n_splits=5, shuffle=True, random_state=42)to create 5 folds. - Per-Fold Training Loop:

- For each fold, apply SMOTE only to the training set.

- Train the model (e.g., Random Forest with

class_weight='balanced_subsample'). - Predict on the unmodified validation fold.

- Calculate and store metrics from Table 1 for both classes.

- Diagnostic Plotting: Generate per-class Precision-Recall and ROC curves for each fold, then compute the average curve.

Visualization: Diagnostic Workflow for Imbalanced Models

Title: Diagnostic Workflow for Detecting Majority Class Overfitting

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Tools for Imbalanced Chemogenomic Model Development

| Item | Function in Context |

|---|---|

| Scikit-learn | Python library providing StratifiedKFold, SMOTE (via imbalanced-learn), and classification metrics. |

| Imbalanced-learn | Python library dedicated to resampling techniques (SMOTE, ADASYN, Tomek links). |

| RDKit or ChemPy | For handling chemical structure data and generating molecular descriptors/fingerprints. |

| MCC (Matthews Correlation Coefficient) | A single, informative metric that is robust to class imbalance for model evaluation. |

| Class Weight Parameter | Built-in parameter in many classifiers (e.g., class_weight in sklearn) to penalize mistakes on the minority class. |

Probability Calibration Tools (CalibratedClassifierCV) |

Adjusts model output probabilities to better match true likelihood, improving threshold selection. |

Troubleshooting Guides & FAQs

Q1: After applying class weights to my chemogenomic model, validation loss decreased but the precision for the minority class (active compounds) collapsed. What went wrong?

A: This is often due to excessive weight scaling. The model may become overly penalized for missing minority class instances, causing it to over-predict that class and introduce many false positives. Troubleshooting Steps:

- Verify your weight calculation. For

sklearn, ensureclass_weight='balanced'usesn_samples / (n_classes * np.bincount(y)). - Implement a grid search for a weight multiplier. Instead of using calculated weights directly, search over

[calculated_weight * C]for C in[0.5, 1, 2, 3, 5]. - Monitor per-class metrics (Precision, Recall, F1) on a validation set during training, not just overall loss.

Q2: When using SMOTE to balance my dataset of molecular fingerprints, the model's cross-validation performance looks great, but it fails completely on the held-out test set. Why?

A: This typically indicates data leakage between the synthetic training and validation splits. Troubleshooting Steps:

- Isolate the sampling: Apply SMOTE only to the training fold within each CV loop and to the final training set. The validation/test folds must contain only original, non-synthetic data.

- Check molecular similarity: In chemogenomics, synthetic samples created in high-dimensional fingerprint space may be unrealistic. Use tools like the "Enhancing The Effectiveness (ETE)" fingerprint or "Matched Molecular Pairs" analysis to check if SMOTE generates chemically implausible structures.

- Consider alternative: Use Random Under-Sampling (RUS) of the majority class instead, or try the SMOTEENN variant (SMOTE + Edited Nearest Neighbors) which cleans the resulting data.

Q3: I tuned the decision threshold to optimize F1-score, but the resulting model has unacceptable false negative rates for early-stage lead identification. How should I approach this?

A: The F1-score (harmonic mean of precision and recall) may not align with your drug discovery utility function. Troubleshooting Steps:

- Define a business-aware metric: Assign costs (e.g., cost of a missed lead vs. cost of a false positive assay). Optimize threshold for minimum cost or maximum Net Benefit (Decision Curve Analysis).

- Use Precision-Recall Curve (PRC): For high imbalance, PRC is more informative than ROC. Choose a threshold that meets your minimum recall requirement for the active class, then maximize precision at that point.

- Implement a two-threshold system: Create an "uncertainty zone" between thresholds. Predictions in this zone are flagged for expert review, reducing high-stakes errors.

Q4: My hyperparameter tuning for a neural network on bioactivity data is unstable—each run gives a different "optimal" set of class weights, threshold, and learning rate. How can I stabilize this?

A: This is common with imbalanced, high-variance data. Troubleshooting Steps:

- Increase computational budget: Use Bayesian Optimization (e.g., HyperOpt, Optuna) instead of grid/random search. It requires fewer iterations to find a robust optimum.

- Fix the seed: Ensure reproducibility by setting random seeds for

numpy,tensorflow/pytorch, and the data splitting library. - Use nested cross-validation: Perform hyperparameter tuning in an inner CV loop on the training set, and evaluate the final chosen model on a completely held-out outer CV test fold. This gives an unbiased performance estimate.

- Prioritize parameters: Tune in this order: 1) Learning Rate & Network Architecture, 2) Class Weight / Loss Function, 3) Decision Threshold (post-training).

Experimental Protocols for Key Cited Studies

Protocol 1: Systematic Evaluation of Class Weight and Threshold Tuning

Objective: To determine the optimal combination of class weight scaling and post-training threshold adjustment for a Random Forest classifier on a chemogenomic bioactivity dataset.

Materials: PubChem BioAssay dataset (AID: 1851), RDKit (for fingerprint generation), scikit-learn.

Method:

- Data Preparation: Convert SMILES strings to 2048-bit Morgan fingerprints (radius=2). Split data into 70%/30% train/test, stratified by activity class. Hold out test set.

- Class Weight Grid:

- Calculate base weight

w_base = n_samples / (n_classes * np.bincount(y_train)). - Define scaling factors

S = [0.25, 0.5, 1, 2, 4]. - Create weight set:

W = {minority: w_base * s, majority: 1.0}for eachsinS.

- Calculate base weight

- Model Training & Validation: Using 5-fold stratified CV on the training set:

- Train a Random Forest (100 trees) for each weight in

W. - For each model, obtain predicted probabilities for the minority class on the CV validation folds.

- Train a Random Forest (100 trees) for each weight in

- Threshold Optimization: For each weight's set of validation probabilities:

- Vary decision threshold from 0.1 to 0.9 in steps of 0.05.

- At each threshold, compute the F2-Score (beta=2, emphasizing recall).

- Record the threshold

t_optthat maximizes the F2-Score.

- Final Evaluation: Retrain a model on the entire training set using the weight

W_optthat yielded the highest CV F2-Score at itst_opt. Evaluate this final model on the held-out test set using the optimized thresholdt_opt. Report precision, recall, F1, F2, and MCC.

Protocol 2: Comparative Analysis of Sampling Ratios in Deep Learning Models