Automating High-Throughput NGS for Chemogenomics: Strategies for Scalable Drug Discovery

This article provides a comprehensive guide for researchers and drug development professionals on implementing automated Next-Generation Sequencing (NGS) workflows for high-throughput chemogenomics.

Automating High-Throughput NGS for Chemogenomics: Strategies for Scalable Drug Discovery

Abstract

This article provides a comprehensive guide for researchers and drug development professionals on implementing automated Next-Generation Sequencing (NGS) workflows for high-throughput chemogenomics. It explores the foundational drivers of automation, including the need for scalability and reproducibility in large-scale drug screening. The scope covers practical methodologies for integrating liquid handling systems and end-to-end platforms, strategies for overcoming common bottlenecks in data analysis and library preparation, and frameworks for the rigorous validation required in regulated research environments. By synthesizing current technological advancements with practical application, this resource aims to equip scientists with the knowledge to accelerate target identification and therapeutic discovery.

The Rise of Automation in NGS: Fueling the Next Generation of Chemogenomics

The global landscape of genomic analysis is undergoing a rapid transformation, driven by an unprecedented convergence of technological advancement, declining costs, and expanding applications across biomedical research and clinical diagnostics. The genome reconstruction tools market, a specialized segment of the bioinformatics industry, is projected to grow from USD 182.6 million in 2025 to USD 387 million by 2035, reflecting a compound annual growth rate (CAGR) of 7.8% [1]. This growth is fundamentally fueled by the critical need for scalable analytical solutions that can manage the enormous data volumes generated by modern sequencing technologies. Concurrently, the broader genome testing market demonstrates even more accelerated expansion, expected to rise from USD 22.45 billion in 2025 to USD 55.23 billion by 2032, at a CAGR of 13.7% [2]. These markets are being reshaped by the pervasive adoption of cloud-based bioinformatics platforms across biotechnology and pharmaceutical sectors, alongside a pronounced shift toward precision medicine tools in both research and clinical applications [1].

For researchers and drug development professionals, this expansion creates both opportunities and challenges. The ability to process and interpret massive genomic datasets efficiently has become a critical bottleneck in high-throughput chemogenomics research, where the rapid profiling of chemical-genetic interactions is essential for target identification and validation. This application note examines the key drivers behind this growing demand for scalability and provides detailed protocols for implementing automated, high-throughput genomic workflows that address these challenges directly.

Table 1: Key Market Growth Indicators for Genomic Analysis Technologies

| Market Segment | 2025 Projected Value | 2035/2032 Projected Value | CAGR | Primary Growth Drivers |

|---|---|---|---|---|

| Genome Reconstruction Tools | USD 182.6 million [1] | USD 387 million [1] | 7.8% [1] | Cloud/SaaS adoption, pharmaceutical R&D, microorganism analysis [1] |

| Whole Genome Sequencing | USD 3 billion [3] | USD 6.1 billion (2030) [3] | 15.1% [3] | Cancer genomics, rare disease research, personalized medicine [3] |

| Functional Genomics | USD 11.34 billion [4] | USD 28.55 billion (2032) [4] | 14.1% [4] | NGS technology advances, drug discovery applications [4] |

| Genome Testing | USD 22.45 billion [2] | USD 55.23 billion (2032) [2] | 13.7% [2] | Clinical diagnostics, direct-to-consumer testing, pharmacogenomics [2] |

Key Market Drivers for Scalable Genomic Analysis

Technological Advancements and Cost Reduction

The dramatic reduction in sequencing costs has been the most fundamental driver accelerating demand for scalable genomic analysis. Since the completion of the Human Genome Project, the cost of sequencing a full human genome has decreased by approximately 96% [5], making large-scale genomic studies economically feasible for more laboratories. This cost reduction has been coupled with substantial improvements in sequencing throughput and capabilities. Modern NGS technologies can simultaneously sequence millions to billions of DNA fragments in a massively parallel fashion, enabling researchers to expand the scale and discovery power of their genomic studies far beyond what was possible with traditional techniques [5].

Leading instrument companies are continuously pushing the boundaries of sequencing performance. For instance, Ultima Genomics' UG 100 Solaris system, launched in 2025, offers a >50% increase in output to 10-12 billion reads per wafer while reducing the price to $0.24 per million reads, potentially enabling the $80 genome [6]. Similarly, Roche's introduction of Sequencing by Expansion (SBX) technology represents a significant innovation that uses biochemical conversion to encode DNA into surrogate Xpandomer molecules, enabling highly accurate single-molecule nanopore sequencing [6]. These technological advancements are critically important for chemogenomics research, where profiling thousands of chemical compounds across multiple cell lines requires unprecedented sequencing scale and cost-efficiency.

Expanding Applications in Biomedical Research and Clinical Diagnostics

The applications driving demand for scalable genomic analysis span virtually all areas of biomedical research and are increasingly penetrating clinical diagnostics. In cancer genomics and rare inherited diseases, whole genome sequencing has become indispensable for identifying genetic mutations and enabling faster, more accurate diagnoses [3]. The growing focus on targeted therapies and personalized medicine further fuels this demand, as WGS supports treatment personalization by revealing genetic profiles that guide therapeutic decisions [3].

The functional genomics market, where NGS commands a dominant 32.5% technology share [4], exemplifies the broadening applications. Transcriptomics alone accounts for 23.4% of the functional genomics application segment [4], driven by expanding research on gene expression dynamics under different biological conditions. The microorganisms segment represents another substantial application area, accounting for 27.8% of genome reconstruction tools demand [1], with growing importance in microbiome research, infectious disease monitoring, and industrial biotechnology.

For drug development professionals, the integration of multi-omics approaches represents a particularly significant trend. The combination of genomic, proteomic, and metabolomic data provides unprecedented insights into disease mechanisms and therapeutic responses [2]. Additionally, pharmacogenomic testing services are expanding rapidly, enabling personalized medication management based on individual genetic profiles [2].

Adoption of Cloud Computing and Artificial Intelligence

The massive data volumes generated by modern genomic technologies have necessitated a fundamental shift in computational strategies. Cloud/SaaS subscription models have emerged as the leading service model in the genome reconstruction tools market, accounting for 32.8% market share [1]. These platforms provide the essential scalability and accessibility required for managing and analyzing large genomic datasets without substantial local computational infrastructure.

The integration of artificial intelligence and machine learning represents another transformative driver for scalable genomic analysis. As noted in the market research, "Efforts in standardization, artificial intelligence, and machine learning drive improvements in diagnostic reliability and processing speed, delivering value for both clinical and biopharmaceutical users" [2]. The development of sophisticated AI models specifically for genomic applications is accelerating, exemplified by initiatives such as the "Genos" AI model launched by BGI-Research and Zhejiang Lab in 2025 – the world's first deployable genomic foundation model with 10 billion parameters designed to analyze up to one million base pairs at single-base resolution [4].

Table 2: Key Technology Adoption Trends in Genomic Analysis

| Technology Trend | Market Impact | Application in Scalable Genomics |

|---|---|---|

| Cloud/SaaS Platforms | 32.8% market share in genome reconstruction tools [1] | Enables scalable data storage, computation, and collaboration for large-scale genomic studies |

| AI/ML Integration | Improving variant interpretation accuracy and processing speed [2] | Accelerates analysis of massive genomic datasets; enables pattern recognition in chemogenomic screens |

| Automation & High-throughput Workflows | Enables access to optimization space not possible using traditional laboratory work [7] | Increases sample processing capacity; reduces manual errors in library preparation |

| Multi-omics Approaches | Development of testing panels combining genome, proteome, and metabolome data [2] | Provides comprehensive view of biological systems for drug discovery and biomarker identification |

| NGS Technology | 32.5% share of functional genomics technology segment [4] | Foundation for high-throughput genomic analysis across diverse applications |

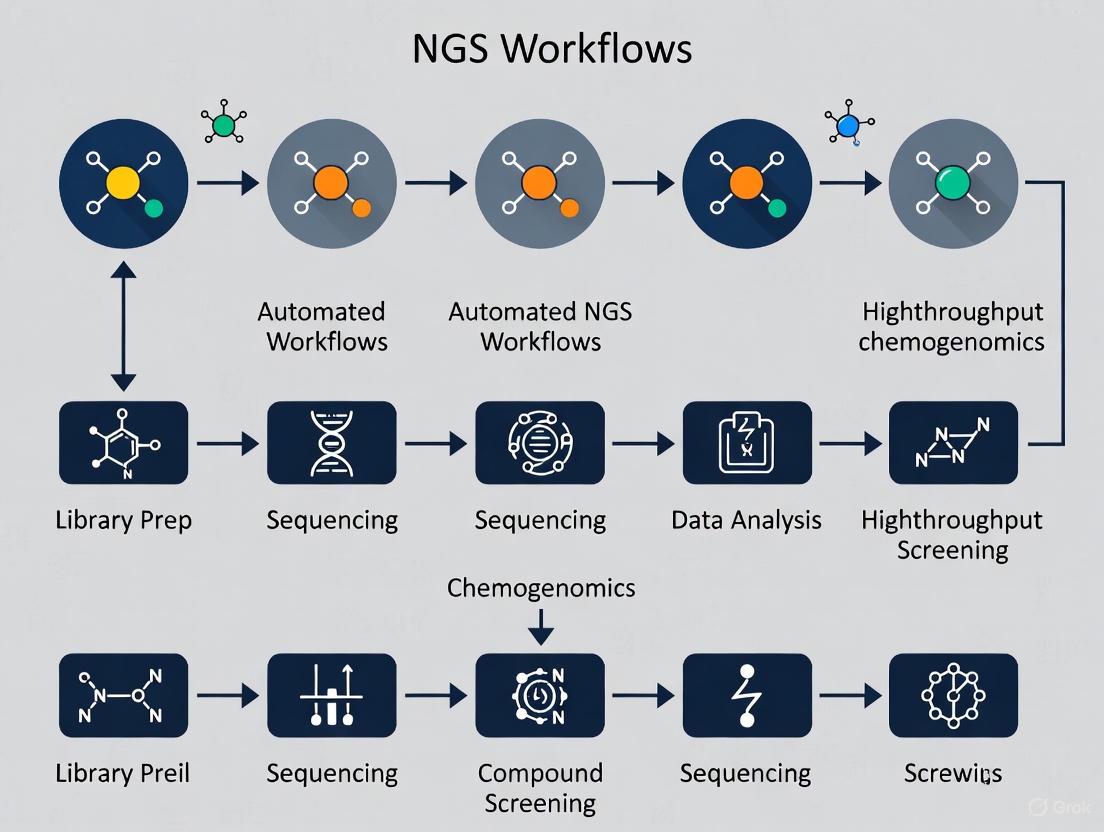

Automated NGS Workflow for High-Throughput Chemogenomics

The implementation of automated, high-throughput NGS workflows is particularly critical for chemogenomics research, which involves systematically profiling the interactions between chemical compounds and genomic elements. The optimization space for maximizing microbial conversions in biomanufacturing alone is vast, and "automation and rapid workflows can enable access to optimization space not possible using the throughput allowed by traditional laboratory work" [7]. For drug development professionals, this capability translates directly to accelerated target identification and validation cycles.

The fundamental NGS workflow comprises four key steps: nucleic acid extraction, library preparation, sequencing, and data analysis [5] [8]. In high-throughput chemogenomics applications, each of these steps presents specific scalability challenges that can be addressed through strategic automation and process optimization. The workflow detailed in this section has been specifically adapted for large-scale chemogenomic profiling, where processing hundreds to thousands of samples in parallel is essential for statistical power and discovery.

Protocol 1: Automated Nucleic Acid Extraction and Quality Control

Experimental Principle

The initial extraction phase is critical for generating high-quality sequencing data, particularly in chemogenomics applications where compound treatments may introduce inhibitors or affect nucleic acid integrity. Automated nucleic acid extraction ensures consistency across thousands of samples while minimizing cross-contamination risks – essential factors for reliable compound-genotype interaction studies [9].

Materials and Equipment

- Automated liquid handling system (e.g., Agilent Magnis NGS Prep System, Revvity chemagic 360)

- High-throughput nucleic acid extraction kits (compatible with automation)

- Cell lysis modules appropriate for your cell types

- Magnetic stand or plate handler for bead-based purification

- Real-time PCR system for quantification

- Fluorometric quantitation system (e.g., Qubit)

- Microfluidic electrophoresis system (e.g., Bioanalyzer, TapeStation)

Step-by-Step Procedure

Sample Plate Preparation: Arrange cell lysates from compound-treated samples in 96- or 384-well plates compatible with your automated liquid handling system. Include appropriate controls (untreated, vehicle controls, positive controls).

Automated Extraction Program:

- Program the liquid handling system to transfer 200μl of each lysate to a fresh processing plate.

- Add 20μl magnetic beads and 200μl binding buffer to each sample using automated pipetting.

- Incubate for 5 minutes at room temperature with periodic mixing.

- Engage magnetic stand for 2 minutes to separate beads from solution.

- Program aspiration of supernatant without disturbing bead pellets.

Wash Steps:

- Add 200μl wash buffer 1 to each well, resuspend beads, and incubate for 30 seconds.

- Engage magnetic stand, then aspirate supernatant.

- Repeat with wash buffer 2.

- Perform final wash with 80% ethanol.

Elution:

- Air-dry beads for 5-10 minutes to evaporate residual ethanol.

- Add 50μl elution buffer (10mM Tris-HCl, pH 8.5) to each well.

- Resuspend beads thoroughly and incubate for 2 minutes at room temperature.

- Engage magnetic stand and transfer 45μl of eluate to a fresh output plate.

Quality Control Assessment:

- Quantitate DNA/RNA yield using fluorometric methods (e.g., Qubit) with automated plate reading.

- Assess integrity via microfluidic electrophoresis (RIN >8.0 for RNA; distinct high molecular weight band for DNA).

- Verify purity through spectrophotometric ratios (A260/280: 1.8-2.0; A260/230: >2.0).

Critical Protocol Parameters

- Input Requirements: 10-1000ng DNA or 10-500ng RNA per sample

- Throughput: 96 samples in approximately 2 hours; 384 samples in 4 hours

- Success Indicators: Yield sufficient for library prep (>5ng/μl), high purity, minimal degradation

Protocol 2: Automated Library Preparation and Normalization

Experimental Principle

Automated library preparation standardizes the fragmentation, adapter ligation, and amplification steps required for NGS, eliminating the variability introduced by manual pipetting [9]. For chemogenomics applications, maintaining consistency across all samples is particularly crucial when comparing gene expression or mutation profiles across hundreds of compound treatments.

Materials and Equipment

- Automated library preparation kit (e.g., Illumina DNA Prep, KAPA HyperPrep)

- Dual-indexed adapter plates for sample multiplexing

- Automated liquid handling workstation with thermal cycling capability

- Magnetic separation module

- Real-time PCR quantification system

- Normalization and pooling automation

Step-by-Step Procedure

Fragmentation and End Repair:

- Program liquid handler to transfer 50-100ng of input DNA in 50μl volume to a PCR plate.

- Add 20μl fragmentation/end repair mix to each well.

- Seal plate and transfer to integrated thermal cycler: 5 minutes at 55°C, then hold at 4°C.

Adapter Ligation:

- Add 30μl ligation mix containing uniquely dual-indexed adapters to each well.

- Program addition of 15μl ligation enhancer and thorough mixing.

- Incubate for 15 minutes at 20°C using integrated temperature control.

Cleanup and Size Selection:

- Add 87μl bead-based cleanup solution to each well.

- Incubate 5 minutes at room temperature, engage magnets, and remove supernatant.

- Wash twice with 80% ethanol using automated dispensing and aspiration.

- Elute in 22μl resuspension buffer.

Library Amplification:

- Add 28μl PCR master mix to each well.

- Transfer to thermal cycler: 98°C for 45 seconds; [98°C for 15 seconds, 60°C for 30 seconds] × 8-12 cycles; 72°C for 1 minute.

- Perform final cleanup with 45μl beads, eluting in 25μl buffer.

Quality Control and Normalization:

- Quantify libraries using fluorometric methods with automated plate reading.

- Assess library size distribution via microfluidic electrophoresis (expected peak: 300-500bp).

- Program liquid handler to normalize all libraries to 4nM concentration based on quantification data.

- Combine equal volumes of normalized libraries into a sequencing pool.

Critical Protocol Parameters

- Input Requirements: 10-1000ng DNA or 10-500ng RNA per sample

- Throughput: 96 libraries in approximately 6 hours; 384 libraries in 9 hours

- Success Indicators: Appropriate library size distribution, concentration >2nM, minimal adapter dimer

Protocol 3: Automated Data Analysis Pipeline for Chemogenomics

Experimental Principle

The massive datasets generated in high-throughput chemogenomics require automated bioinformatic processing to extract meaningful biological insights. The analysis workflow progresses through three key stages: primary analysis (base calling, demultiplexing), secondary analysis (alignment, variant calling), and tertiary analysis (chemogenomic interpretation) [8]. Automation ensures consistency and enables the processing of hundreds of samples in parallel.

- High-performance computing cluster or cloud computing environment

- Workflow management system (e.g., Nextflow, Snakemake)

- Containerization platform (e.g., Docker, Singularity)

- Genomic analysis toolkit (e.g., GATK, Bioconductor)

- Custom scripts for chemogenomic profiling

Step-by-Step Procedure

Primary Analysis Setup:

- Configure workflow manager to process sequencing output from the instrument.

- Implement base calling and demultiplexing using bcl2fastq or similar tools.

- Program quality control checks (FastQC) with automated reporting.

Secondary Analysis Automation:

- Implement alignment to reference genome using BWA-MEM or STAR (for RNA-Seq).

- Program duplicate marking to remove PCR artifacts.

- Execute variant calling (HaplotypeCaller for DNA-Seq) or expression quantification (featureCounts for RNA-Seq).

Tertiary Analysis for Chemogenomics:

- Normalize expression counts or variant frequencies across all samples.

- Implement differential expression/analysis comparing each compound treatment to controls.

- Perform pathway enrichment analysis (GO, KEGG) for each compound.

- Generate compound-gene interaction networks.

Quality Monitoring and Reporting:

- Integrate automated quality metrics (alignment rate, duplication rate, coverage uniformity).

- Implement multiQC reporting for overall project assessment.

- Generate automated summary reports highlighting top compound-gene interactions.

Critical Protocol Parameters

- Computing Requirements: 32+ cores, 64GB+ RAM for typical datasets

- Processing Time: 4-24 hours depending on sample number and sequencing depth

- Success Indicators: High alignment rates (>90%), expected number of variants/expressed genes, identification of compound-specific signatures

Essential Research Reagent Solutions

The successful implementation of automated, high-throughput genomic workflows requires careful selection of reagents and materials specifically designed for automation compatibility and consistency. The following table details essential solutions for scalable chemogenomics research:

Table 3: Essential Research Reagent Solutions for Automated Genomic Workflows

| Reagent Category | Specific Product Examples | Function in Workflow | Automation Compatibility Features |

|---|---|---|---|

| Nucleic Acid Extraction Kits | Magnetic bead-based purification kits | Isolation of high-quality DNA/RNA from cell lysates | Pre-filled deep well plates, reduced incubation times, room temperature processing |

| Library Preparation Kits | Illumina DNA Prep, KAPA HyperPrep, NEBNext Ultra II | Fragmentation, adapter ligation, and amplification of libraries | Reduced hands-on time, pre-mixed reagents, stable at room temperature |

| Automated Liquid Handling Consumables | Low-retention tips, pre-filled reagent plates, magnetic beads | Precise liquid transfers and purification steps | Compatibility with automated systems, reduced bubble formation, minimal retention |

| Quality Control Reagents | Qubit assay kits, Bioanalyzer reagents, qPCR quantification mixes | Assessment of nucleic acid quality, quantity, and library integrity | Pre-diluted standards, reduced pipetting steps, multi-plate compatibility |

| Normalization and Pooling Buffers | TE buffer, resuspension buffers, hybridization buffers | Standardization of library concentrations and preparation for sequencing | Chemical stability, viscosity optimization for automated pipetting |

| Sequencing Reagents | Illumina SBS chemistry, NovaSeq XP kits | Cluster generation and sequencing-by-synthesis | Enhanced stability, reduced volume requirements, increased output |

The growing demand for scalable genomic analysis represents a fundamental shift in biomedical research, particularly in high-throughput chemogenomics where the systematic profiling of compound-genome interactions drives therapeutic discovery. The market drivers analyzed in this application note – including technological advancements, expanding applications, and the adoption of cloud computing and AI – collectively underscore the critical importance of implementing automated, robust workflows for genomic analysis.

The protocols detailed herein provide a framework for laboratories seeking to enhance their throughput and reproducibility in chemogenomic studies. As the field continues to evolve, several emerging trends warrant particular attention. The integration of artificial intelligence into genomic analysis pipelines is accelerating, with models specifically designed for genomic data showing promise in predicting compound-gene interactions and optimizing experimental design [4]. Additionally, the continued expansion of multi-omics approaches will likely further drive demand for scalable solutions that can integrate genomic, transcriptomic, proteomic, and metabolomic data into a unified analytical framework [2].

For research organizations and drug development companies, strategic investment in automated NGS workflows represents not merely a tactical improvement but a fundamental capability that will determine competitive advantage in the evolving landscape of precision medicine and chemogenomic discovery.

High-Throughput Screening (HTS) is a foundational technology in modern drug discovery and chemogenomics research, enabling the rapid testing of thousands to millions of chemical compounds against biological targets. However, traditional manual approaches to screening create significant bottlenecks that limit throughput, introduce error, and constrain the scale of research. Manual processes are notoriously labor-intensive, requiring precise pipetting, repeated wash steps, and time-sensitive manipulations that are difficult to maintain across large-scale experiments [10]. These limitations become particularly problematic in the context of Next-Generation Sequencing (NGS) workflows, where the complexity of sample preparation can undermine the revolutionary throughput of the sequencing technology itself.

The integration of automation addresses these fundamental constraints by transforming HTS from a resource-intensive process to an efficient, reproducible, and scalable research platform. Automated systems streamline experimental workflows, minimize human error, and maximize throughput and efficiency through sophisticated liquid handling robots, integrated robotic arms, and advanced data analysis software [11]. This paradigm shift is especially critical for quantitative HTS (qHTS) paradigms, where compounds are tested at multiple concentrations to generate comprehensive concentration-response curves, requiring maximal efficiency and miniaturization [12]. By overcoming manual limitations, automation enables researchers to focus on experimental design and data interpretation rather than repetitive manual tasks, accelerating the entire drug discovery pipeline.

Key Bottlenecks in Manual HTS Workflows

Labor Intensity and Human Error

Manual HTS processes require extensive hands-on time for precise pipetting, repeated wash steps, and time-sensitive manipulations. In NGS workflows, for example, manual sample preparation necessitates numerous pipetting steps that create opportunities for human error [10]. These errors can be amplified during subsequent PCR stages, potentially ruining entire experiments and wasting significant time and resources. The consistency of manual pipetting varies between researchers, leading to batch effects where technical factors rather than biological variables influence results [10]. Such batch effects can mask true biological differences and lead to incorrect conclusions, particularly problematic in large-scale chemogenomics studies where reproducibility is essential.

Time Constraints and Throughput Limitations

The high-throughput potential of modern screening and sequencing technologies is fundamentally limited by manual sample preparation speeds. Manual processes create a significant bottleneck as researchers must spend vast amounts of time on preparatory work rather than innovative research [10]. This limitation restricts laboratory capabilities for large-scale experiments and reduces the time available for experimental design and data analysis. In conventional screening operations, the decoupling of screening and drug development has created unique challenges that demand efficient, unattended screening capabilities, particularly in academic settings where resources may be limited [12].

Contamination Risks and Consistency Issues

Cross-contamination presents a substantial risk during manual HTS and NGS sample preparation, potentially leading to inaccurate results and data misinterpretation [10]. The risk is particularly high when processing multiple samples simultaneously, as improper handling can compromise entire experimental batches. Additionally, maintaining consistency across manual processes is challenging, especially when scaling experiments for large studies or clinical applications. Researcher-to-researcher variations in technique introduce variability that can affect data quality and reproducibility [10].

Table 1: Primary Bottlenecks in Manual HTS and NGS Workflows

| Bottleneck Category | Specific Challenges | Impact on Research |

|---|---|---|

| Labor Intensity | Precise pipetting, repeated wash steps, time-sensitive manipulations | High error rates, increased hands-on time, reduced productivity |

| Time Constraints | Limited processing speed, extensive hands-on requirements | Restricted throughput, delayed experiments, reduced scalability |

| Contamination Risks | Cross-contamination between samples, environmental exposure | Inaccurate results, data misinterpretation, failed experiments |

| Consistency Issues | Researcher-to-researcher variation, batch effects | Reduced reproducibility, compromised data quality, invalid conclusions |

Automation Solutions for HTS Bottlenecks

Integrated Robotic Screening Systems

Modern automated screening systems incorporate multiple components into unified platforms capable of storing compound collections, performing assay steps, and measuring various outputs without human intervention. These systems typically include peripheral units such as assay and compound plate carousels, liquid dispensers, plate centrifuges, and plate readers, all serviced by high-precision robotic arms [12]. For example, the system implemented at the NIH's Chemical Genomics Center (NCGC) features random-access online compound library storage carousels with a capacity of over 2.2 million samples, extremely reliable plate handling, innovative lidding systems, multifunctional reagent dispensers, and anthropomorphic arms for plate transport and delidding [12]. Such integration enables fully automated unattended screening in the 1,536-well plate format, dramatically increasing efficiency while reducing reagent use and human error.

Liquid Handling and Process Automation

Liquid handling robots serve as the workhorses of automated HTS, accurately transferring samples and compounds into assay plates with precision and efficiency unmatched by manual pipetting [11]. These robotic systems employ advanced technology to manipulate small liquid volumes across hundreds or thousands of wells simultaneously, ensuring reproducibility across experiments and minimizing inter-sample variability. In modern HTS laboratories, these robots are seamlessly integrated with other automated systems including plate readers, imaging devices, and data analysis software, creating a cohesive workflow where each component communicates and coordinates with others [11]. This integration minimizes downtime between assay steps and maximizes throughput, enabling researchers to screen large compound libraries more rapidly and with greater consistency.

Automated Data Management and Analysis

Advanced software platforms form a critical component of automated HTS, tracking experimental parameters, documenting results, and analyzing the extensive datasets generated during screening campaigns [11]. These platforms automate essential data processing tasks including signal quantification, dose-response curve fitting, and hit identification, enabling researchers to derive meaningful insights more rapidly. The integration of artificial intelligence and machine learning (AI/ML) technologies further enhances data analysis capabilities, with algorithms trained on screening data to identify additional hits and prioritize compounds for further validation based on predicted activity, off-target effects, and drug-likeness [13]. Automated data FAIRification (Findability, Accessibility, Interoperability, and Reuse) protocols, such as those implemented in tools like ToxFAIRy, convert high-throughput data into machine-readable formats that support reuse and meta-analysis [14].

Diagram 1: HTS Automation Overcoming Manual Limitations. This workflow illustrates how automated solutions address specific bottlenecks in manual HTS processes.

Application Notes: Implementing Automated HTS in Chemogenomics

Quantitative HTS (qHTS) for Concentration-Response Profiling

The quantitative HTS (qHTS) paradigm represents a significant advancement made possible through automation, wherein each library compound is tested at multiple concentrations to construct concentration-response curves (CRCs) and generate comprehensive datasets for each assay [12]. This approach mitigates the high false-positive and false-negative rates associated with conventional single-concentration screening by testing compounds across a approximately four-log range of concentrations in an efficient, automated manner. At the NCGC, implementation of qHTS on an integrated robotic system has enabled the generation of over 6 million CRCs from more than 120 assays within three years [12]. The practical implementation of qHTS for cell-based and biochemical assays across libraries of >100,000 compounds requires maximal efficiency and miniaturization, as well as the ability to easily accommodate different assay formats and screening protocols – all capabilities provided by advanced automation systems.

High-Content Screening with Transcriptomic Readouts

Automation has enabled the evolution from simple HTS to high-content screening (HCS) that incorporates multiparameter analysis, including transcriptomic readouts. HCS platforms utilize automated imaging systems and advanced image analysis algorithms to gather quantitative data from complex cellular images, analyzing thousands of cells per well to provide detailed information on cellular morphology, protein localization, and signaling pathway activity [11]. Recent developments in high-throughput RNA-seq technology have further enhanced these capabilities by adding transcriptome-wide information to screening outputs. Methods like Discovery-seq provide a cost-effective way to obtain high-quality transcriptomics data during compound screens, offering comprehensive analysis of genes and pathways affected by chemical treatments [11]. This integrated approach provides a much deeper layer of information that researchers can gather from their HTS campaigns, enabling more sophisticated assessment of mechanisms of action, toxicity, and off-target effects much earlier in the drug development pipeline.

Toxicity Screening and Profiling

Automated HTS approaches have been successfully implemented for broad toxic mode-of-action-based hazard assessment through integrated testing protocols. These systems combine the analysis of multiple assays into comprehensive hazard values, such as the Tox5-score, which integrates dose-response parameters from different endpoints and conditions into a final toxicity score [14]. Automated platforms can simultaneously assess multiple toxicity endpoints including cell viability, DNA damage, oxidative stress, and apoptosis across several time points and cell models. The resulting data supports clustering and read-across based on endpoint, timepoint, and cell line specific toxicity scores, enabling bioactivity-based grouping of chemicals and nanomaterials [14]. This automated, multi-parametric approach to toxicity screening provides a more nuanced and informative alternative to traditional single-endpoint testing, facilitating better safety assessment of new chemical entities.

Table 2: Automated HTS Applications in Chemogenomics Research

| Application Area | Automated Approach | Key Benefits |

|---|---|---|

| Quantitative HTS (qHTS) | Testing each compound at multiple concentrations using automated dilution series | Generates comprehensive concentration-response data, reduces false positives/negatives |

| High-Content Screening | Automated imaging systems with advanced image analysis algorithms | Multiparameter cellular analysis, detailed morphological and functional data |

| Transcriptomic Profiling | High-throughput RNA-seq integrated with compound screening | Pathway-level understanding of compound effects, earlier mechanism of action data |

| Toxicity Screening | Automated multi-endpoint testing across time points and cell models | Comprehensive hazard assessment, supports bioactivity-based grouping |

Protocols for Automated HTS Implementation

Protocol: Quantitative HTS (qHTS) Implementation

Objective: To implement a quantitative high-throughput screening approach that tests each compound at multiple concentrations for robust concentration-response profiling [12].

Materials:

- Integrated robotic screening system with compound storage carousels

- 1,536-well assay plates

- Liquid handling robots with solenoid valve dispensers

- Plate readers compatible with various detection technologies

- Compound library formatted as concentration series

Procedure:

- System Configuration: Ensure the automated system includes random-access compound storage with capacity for concentration-series plates. The NCGC system configuration provides 1,458 positions dedicated to compound storage and 1,107 positions for assay plates [12].

- Assay Plate Preparation: Program liquid handlers to dispense reagents and cells into 1,536-well plates. Miniaturization to this format is essential for efficiency and reagent conservation.

- Compound Transfer: Utilize a 1,536-pin array for rapid compound transfer from source plates to assay plates across the concentration series.

- Incubation Management: Coordinate plate movement between multiple incubators capable of controlling temperature, humidity, and CO₂ to maintain optimal assay conditions.

- Detection and Reading: Automate plate transfer to appropriate detectors (e.g., ViewLux, EnVision) based on assay readout requirements (fluorescence, luminescence, absorbance, etc.).

- Data Processing: Implement automated data capture and concentration-response curve fitting using specialized software algorithms.

Validation: Include control compounds with known activity on each plate to monitor assay performance and system operation. The qHTS approach should generate between 700,000 and 2,000,000 data points per full-library screen [12].

Protocol: Automated High-Content Toxicity Screening

Objective: To perform automated multi-parameter toxicity assessment using five complementary endpoints for comprehensive hazard evaluation [14].

Materials:

- Automated plate replicators, fillers, and readers

- Cell culture systems (e.g., BEAS-2B cells)

- Assay reagents: CellTiter-Glo (viability), DAPI (cell number), gammaH2AX (DNA damage), 8OHG (oxidative stress), Caspase-Glo 3/7 (apoptosis)

- Test compounds and reference chemicals

- Automated imaging and analysis systems

Procedure:

- Cell Seeding: Automate cell dispensing into assay plates using liquid handlers. Maintain consistency across replicates.

- Compound Exposure: Program robotic systems to apply test materials at 12 concentration points across 4 biological replicates.

- Time Point Management: Schedule automated processing at multiple time points (e.g., 0, 6, 24, 72 hours) to capture kinetic responses.

- Endpoint Measurement: Coordinate sequential measurement of five toxicity endpoints:

- Luminescence measurement for cell viability via ATP content

- Fluorescence imaging for cell number using DAPI staining

- Fluorescence detection for caspase-3 activation (apoptosis)

- Oxidative stress assessment via 8OHG staining

- DNA double-strand break quantification through γH2AX staining

- Data Integration: Automate data collection and processing through the ToxFAIRy Python module or similar tools to calculate Tox5-scores [14].

- FAIRification: Convert experimental data and metadata into standardized, machine-readable formats using automated workflows.

Validation: Include reference chemicals and nanomaterial controls in each screening batch. The protocol should generate approximately 58,368 data points per screening campaign [14].

Diagram 2: Automated HTS Protocol Workflow. This diagram outlines the key steps in a generalized automated HTS protocol, highlighting quality control checkpoints.

The Scientist's Toolkit: Essential Research Reagent Solutions

Table 3: Key Research Reagent Solutions for Automated HTS

| Reagent/Technology | Function | Application Notes |

|---|---|---|

| CellTiter-Glo Assay | Luminescent measurement of ATP content for viability assessment | Compatible with automation, provides reproducible viability data [14] |

| Caspase-Glo 3/7 Assay | Luminescent measurement of caspase activation for apoptosis detection | Suitable for automated screening platforms, kinetic measurements possible [14] |

| DAPI Staining | Fluorescent DNA staining for cell enumeration | Requires automated imaging systems, provides cell count data [14] |

| gammaH2AX Staining | Immunofluorescent detection of DNA double-strand breaks | Essential for genotoxicity assessment, compatible with automated HCS [14] |

| 8OHG Staining | Detection of nucleic acid oxidative damage | Marker of oxidative stress, requires automated imaging [14] |

| Unique Dual Index (UDI) Adapters | Barcode samples for multiplexed NGS | Critical for color-balanced sequencing, reduces index hopping [15] |

| Toehold Probes | Double-stranded molecular probes for variant detection | Enables color-mixing strategies for multiplex variant detection [16] |

| iTaq Universal Probes Supermix | PCR reaction mixture for probe-based detection | Compatible with automated liquid handling, consistent performance [16] |

Automation technologies have fundamentally transformed high-throughput screening by systematically addressing the critical bottlenecks associated with manual approaches. Through integrated robotic systems, precision liquid handling, and sophisticated data analysis tools, automation enables researchers to overcome limitations in throughput, reproducibility, and scalability that previously constrained chemogenomics research. The implementation of automated qHTS paradigms, high-content screening with transcriptomic readouts, and multi-parameter toxicity profiling demonstrates how these technologies enhance both the efficiency and quality of screening data. As automation continues to evolve with advancements in AI-driven data analysis and increasingly sophisticated robotic systems, its role in enabling robust, reproducible, and scalable high-throughput screening will only expand, further accelerating drug discovery and chemogenomics research.

The shift from manual procedures to automated systems in next-generation sequencing (NGS) is a pivotal transformation in modern genomics, particularly for high-throughput chemogenomics research. Automated liquid handlers and integrated workstations minimize hands-on time, reduce user-to-user variability, and enhance reproducibility, which is critical for generating robust, high-quality data in drug discovery pipelines [17]. These technologies enable researchers to standardize complex, multi-step NGS library preparation workflows, thereby accelerating the transition from genomic data to actionable therapeutic insights.

This application note details the key technologies, protocols, and practical considerations for implementing automated NGS solutions. It provides a framework for selecting the appropriate automation level—from modular liquid handlers to fully integrated workstations—to meet specific research throughput, budget, and application requirements.

The Scientist's Toolkit: Automated NGS Platforms and Reagents

Successful implementation of an automated NGS workflow requires a combination of specialized hardware and optimized reagent kits. The table below catalogues essential research reagent solutions and their functions within the automated workflow.

Table 1: Essential Research Reagent Solutions for Automated NGS Workflows

| Item | Function | Example Kits & Notes |

|---|---|---|

| Library Prep Kits | Fragments DNA/RNA and attaches sequencing adapters. | Illumina DNA Prep [18]; KAPA Library Prep kits [17]. Designed with overage for automated dead volumes. |

| Enrichment Panels | Selectively captures genomic regions of interest. | Used in Illumina DNA Prep with Enrichment [18]; crucial for targeted sequencing in chemogenomics. |

| Barcoding/Indexing Oligos | Uniquely tags individual samples for multiplexing. | Enables pooling of hundreds of samples [19]; critical for deconvolution in high-throughput screens. |

| Bead-Based Cleanup Reagents | Purifies nucleic acids between reaction steps. | G.PURE NGS Clean-Up Device [19]; automates removal of enzymes, primers, and adapter dimers. |

| Quantification Kits | Measures library concentration and quality. | Used pre-sequencing to ensure optimal loading [17]; can be integrated on-deck in some workstations. |

Commercially Available Automation Platforms

The market offers a spectrum of automation solutions, from flexible liquid handlers that can be incorporated into existing workflows to fully integrated, application-specific workstations. The choice depends on required throughput, level of walk-away automation, and budget.

Table 2: Comparison of Automated Liquid Handling Systems and Integrated Workstations

| Platform Type | Example Systems | Key Features | Throughput & Applications |

|---|---|---|---|

| Modular Liquid Handlers | Hamilton NGS STAR [18], Beckman Biomek i7 [18], Agilent Bravo NGS [20] | Flexible, open systems. Bravo NGS offers a compact design with optional on-deck thermal cycler (ODTC) [20]. | DNA Prep (96 libraries) [18]; RNA Prep (48 libraries) [18]. Ideal for labs with variable protocols. |

| Integrated Workstations | Aurora VERSA NGLP [21], Revvity explorer G3 [22], Roche AVENIO Edge [17] | Walk-away, end-to-end solutions. VERSA NGLP automates extraction, library prep, and PCR setup [21]. AVENIO Edge requires minimal setup time [17]. | Full workflow from nucleic acid extraction to ready-to-sequence libraries [21]. Best for standardized, high-volume labs. |

| Specialized & Low-Volume Systems | DISPENDIX I.DOT [19] | Non-contact dispenser for miniaturization. Capable of normalizing and pooling samples with a 1 µL dead volume [19]. | Dispenses nanoliter volumes; enables reaction miniaturization to 1/10th of standard volumes [19]. |

Detailed Experimental Protocols for Automated NGS

Protocol: Automated Library Preparation for Whole Genome Sequencing

Application Note: This protocol describes the automation of Illumina DNA Prep on a Hamilton Microlab NGS STAR or Beckman Biomek i7 liquid handler, enabling the preparation of 96 DNA libraries with over 65% less hands-on time compared to manual methods [18].

Materials:

- Input: 100 ng of high-quality genomic DNA per sample.

- Consumables: Illumina DNA Prep Kit [18], 96-well reaction plates, recommended tips.

- Equipment: Hamilton Microlab NGS STAR or Beckman Biomek i7 automated liquid handling system.

Methodology:

- Tagmentation: The automated system dispenses genomic DNA and tagmentation mix into a 96-well plate. The plate is transferred to an on-deck or external thermal cycler for a 10-minute incubation at 55°C to fragment the DNA.

- Stop Tagmentation and Amplification Prep: The system adds a neutralizing reagent to stop the tagmentation reaction, followed by the addition of a unique combination of Illumina CD Indexes to each sample well for sample multiplexing [18].

- PCR Amplification: The plate undergoes PCR cycling (program: 68°C for 1 minute; 98°C for 45 seconds, then cycle between 98°C for 15 seconds, 60°C for 30 seconds, and 68°C for 30 seconds for a total of 6-13 cycles; final extension at 68°C for 1 minute).

- Bead-Based Cleanup: The protocol integrates multiple bead-based cleanups using reagents like the G.PURE NGS Clean-Up Device to purify the DNA after tagmentation and PCR, removing enzymes, salts, and unwanted fragments [19].

- Final Library Elution: The purified DNA libraries are eluted in a resuspension buffer. The automated system can then proceed to normalization and pooling, or the libraries can be stored at -20°C.

Quality Control:

- Quantify final libraries using a fluorometric method (e.g., Qubit).

- Assess library size distribution using an instrument such as the Fragment Analyzer system [18].

Protocol: Automated Normalization and Pooling of NGS Libraries

Application Note: This protocol utilizes the DISPENDIX I.DOT Liquid Handler to normalize and pool up to 96 finished NGS libraries in a single, rapid step, minimizing dead volume and cross-contamination risk [19] [23].

Materials:

- Input: Quantified NGS libraries in a 96-well plate.

- Consumables: Fresh, clean source plate for the pooling destination.

- Equipment: DISPENDIX I.DOT Liquid Handler.

Methodology:

- Data Input: The concentration of each library, as determined by quality control, is input into the I.DOT software.

- Automated Normalization: The I.DOT calculates the volume required from each library to achieve an equimolar concentration. It then aspirates the libraries from the source plate.

- Simultaneous Pooling: The system dispenses the calculated, variable volumes of each normalized library into a single well of the destination plate. This combines the normalization and pooling steps into one efficient process [19].

- Verification: The I.DOT's software provides a log file confirming the volumes dispensed from each well, offering a record that the correct libraries were pooled as intended [19].

Technical Considerations for Implementation

Implementing an automated NGS workflow requires careful planning beyond selecting a platform. Key technical considerations ensure operational success and a strong return on investment.

- Throughput and Scalability: The system must align with your lab's current and projected sample volume. Integrated workstations like the Revvity explorer G3 are designed for high-throughput environments, whereas modular systems offer more flexibility for evolving needs [22].

- Liquid Handling Precision: For reaction miniaturization and costly clinical samples, precision at the nanoliter scale is paramount. Systems like the I.DOT with DropDetection technology verify dispensing volumes to ensure accuracy and result reliability [23].

- Software and Integration: The platform's software should be intuitive and compatible with Laboratory Information Management Systems (LIMS) for seamless sample tracking [23]. Evaluate the level of vendor support for protocol setup and troubleshooting, which is a key offering from providers like Illumina and Roche [18] [17].

- Cost and Return on Investment (ROI): While the initial investment is significant, automation provides long-term savings through reduced reagent consumption (via miniaturization), increased personnel efficiency, and higher data quality [23].

Workflow Diagram of an Automated NGS Pipeline

The following diagram illustrates the core stages of a fully automated NGS workflow, from sample input to sequencing-ready pools, and highlights the technologies involved at each step.

Diagram 1: Automated NGS workflow from sample to sequence-ready pool.

Automated liquid handlers and integrated workstations are no longer luxuries but core technologies for efficient, reproducible, and high-throughput NGS in chemogenomics research. The landscape of solutions is diverse, ranging from flexible platforms that automate specific protocol steps to walk-away systems that manage the entire workflow from sample extraction to sequencing-ready pools.

Selecting the right system requires a careful assessment of throughput needs, precision requirements, and the balance between initial investment and long-term efficiency gains. By leveraging validated protocols and partnering with vendors that offer robust application support, research teams can successfully deploy these key technologies to accelerate drug discovery and the development of novel therapeutics.

The escalating demand for reproducible, high-throughput data in chemogenomics and biomarker discovery is fundamentally reshaping next-generation sequencing (NGS) workflows. No single company possesses the complete suite of technologies required to seamlessly bridge the gap from biological sample to analyzable genomic data. This necessity has catalyzed a series of strategic partnerships between leading reagent providers and automation specialists. These collaborations are engineered to create integrated, end-to-end solutions that mitigate manual processing errors, enhance experimental reproducibility, and accelerate the pace of genomic discovery. This application note details specific partnerships and their resulting automated protocols, providing a framework for their implementation in high-throughput chemogenomics research.

The following table summarizes recent strategic partnerships that are defining the landscape of automated NGS workflows. These collaborations pair best-in-class assay chemistry with precision automation to address critical bottlenecks in sample preparation.

Table 1: Strategic Partnerships in Automated NGS Workflows

| Reagent Company | Automation Company | Collaborative Focus & Integrated Products | Key Benefits for Research | Status/Date |

|---|---|---|---|---|

| Integrated DNA Technologies (IDT) [24] [25] | Beckman Coulter Life Sciences [24] | Automation of IDT's Archer FUSIONPlex, VARIANTPlex, and xGen Hybrid Capture workflows on the Biomek i3 Benchtop Liquid Handler [24]. | Compact footprint; on-deck thermocycling; reduced hands-on time for lower-throughput sample volumes [24]. | In development (Nov 2025) [24]. |

| Integrated DNA Technologies (IDT) [25] | Hamilton [25] | Automation scripts for IDT's xGen and Archer NGS products on Hamilton's Microlab STAR and NIMBUS platforms [25]. | Scalability, consistency, and efficiency for comprehensive genomic profiling (CGP) in solid tumor and heme research [25]. | Global agreement (Oct 2025) [25]. |

| New England Biolabs (NEB) [26] | Volta Labs [26] | Integration of NEBNext reagents, starting with the Ultra II FS DNA Library Prep Kit, onto the Callisto Sample Preparation Platform [26]. | Fully automated, walk-away library prep; "Any Sequencer, Any Chemistry" flexibility (Illumina, Oxford Nanopore, PacBio) [26]. | Co-development partnership (Nov 2025) [26]. |

| HP [27] | Tecan [27] | Development of the Duo Digital Dispenser, combining single-cell and reagent dispensing using HP's inkjet technology [27]. | 40x faster drug discovery dosing; single-cell isolation in <5 minutes; surfactant-free reagent dispensing [27]. | Launched (May 2025) [27]. |

Detailed Experimental Protocols

Protocol: Automated Library Prep using IDT xGen Workflow on Hamilton NIMBUS Platform

This protocol outlines the procedure for automated library preparation for whole-genome sequencing using IDT's xGen hybridization capture reagents on a Hamilton NIMBUS system, derived from the stated partnership objectives [25].

The Scientist's Toolkit: Essential Materials

Table 2: Key Reagents and Consumables

| Item | Function / Description |

|---|---|

| IDT xGen Hybridization Capture Reagents [25] | A suite of probes designed for targeted sequencing, enabling the enrichment of specific genomic regions of interest. |

| Hamilton NIMBUS Liquid Handling Platform [25] | A precision automated workstation capable of performing complex liquid handling steps for NGS library construction. |

| NEBNext Ultra II FS DNA Library Prep Kit [26] | Provides enzymes and buffers for DNA fragmentation, end-prep, adapter ligation, and PCR amplification. |

| Microplates (96- or 384-well) | Reaction vessels compatible with the NIMBUS deck layout. |

| Magnetic Beads | For post-reaction clean-up and size selection steps. |

Methodology:

System Setup and Pre-Run Checklist:

- Ensure the Hamilton NIMBUS platform is calibrated. Load the validated method script developed through the IDT-Hamilton partnership [25].

- Position labware on the deck: source plates containing purified genomic DNA, reagent troughs with IDT xGen and NEB library prep reagents [25] [26], tip boxes, and microplate(s) for reactions.

- Pre-cool the on-deck thermocycler (if available).

Automated Fragmentation and End-Repair:

- The NIMBUS transfers a defined volume of genomic DNA (e.g., 100 ng in 50 µL) to the reaction plate.

- Using the integrated method, the system adds fragmentation mix from the NEBNext kit. The plate is transferred to the on-deck thermocycler for a programmed incubation to achieve desired fragment sizes (e.g., 300-400 bp) [26].

- Following fragmentation, the system adds end-repair enzyme mix to generate blunt-ended DNA fragments.

Adapter Ligation and Clean-Up:

- The workstation dispenses unique dual-indexed adapters and ligation master mix to each sample.

- After ligation, the protocol engages magnetic beads for a double-sided size selection clean-up. The NIMBUS performs all aspiration and dispensing steps to isolate the ligated product.

Hybridization Capture with IDT xGen Probes:

- The automated system transfers the purified library into a fresh plate and adds IDT xGen Hybridization Buffer and Blocking Oligos.

- The system then adds the specific xGen Probe Panels (e.g., for a cancer gene panel). The entire plate is sealed and transferred to the thermocycler for a prolonged hybridization incubation (e.g., 4-16 hours at 65°C) [25].

Post-Capture Amplification and Final Clean-Up:

- After hybridization, the system performs a series of stringent washes to remove non-specifically bound DNA.

- A post-capture PCR mix is added to amplify the enriched libraries.

- A final magnetic bead clean-up is performed. The NIMBUS elutes the final prepared library in elution buffer, ready for quantification and sequencing.

Workflow Visualization: Automated NGS from Sample to Sequencer

The following diagram illustrates the integrated, automated workflow described in the protocol, highlighting the roles of the respective partners' technologies.

Impact on Chemogenomics Research

The synergy from these partnerships delivers tangible benefits that directly address the core demands of high-throughput chemogenomics. Automated workflows ensure that the processing of hundreds of cell lines or compound-treated samples is consistent from batch to batch, a critical factor for generating robust, reproducible data for structure-activity relationship analysis [24] [25]. Furthermore, the significant reduction in hands-on time—a key benefit highlighted across all partnerships—frees highly skilled researchers to focus on experimental design and data interpretation rather than manual pipetting [24] [26]. Finally, the modular and scalable nature of these solutions, such as the "Any Sequencer, Any Chemistry" approach from Volta and NEB, provides the flexibility required to adapt to evolving research questions and sequencing technologies without overhauling core laboratory infrastructure [26].

Strategic collaborations between reagent and automation companies are more than a trend; they are a fundamental driver of innovation in modern genomics. By providing integrated, validated, and automation-ready workflows, these partnerships are directly empowering researchers to overcome traditional limitations of throughput, reproducibility, and scalability. As exemplified by the specific protocols and partnerships detailed herein, this collaborative model is proving indispensable for accelerating the pace of discovery in chemogenomics and the broader pursuit of precision medicine.

Building Your Automated NGS Workflow: From Library Prep to Data Generation

Automated liquid handling (ALH) systems and dedicated library preparation instruments are foundational to establishing robust, high-throughput Next-Generation Sequencing (NGS) workflows for chemogenomics research [28]. These technologies are critical for screening vast compound libraries against genomic targets, a process that demands exceptional precision, reproducibility, and scalability. The global NGS library preparation market, projected to grow from USD 2.07 billion in 2025 to USD 6.44 billion by 2034, reflects a significant shift towards automated and standardized workflows, with the automated preparation segment being the fastest-growing [29]. This application note provides a structured framework for selecting and implementing these core components to accelerate drug discovery.

Core Component Selection Criteria

Automated Liquid Handling Systems

ALH systems eliminate manual pipetting errors, reduce contamination risks, and standardize reagent dispensing, which is paramount for generating reliable, high-quality sequencing data in large-scale chemogenomics projects [28] [9]. When selecting a system, key features to consider include multi-channel pipetting, precision dispensing for sub-microliter volumes, and integration capabilities with Laboratory Information Management Systems (LIMS) [28].

Table 1: Key Considerations for Selecting an Automated Liquid Handler

| Consideration | Description & Relevance to Chemogenomics |

|---|---|

| Throughput Requirements | Dictates the scale of simultaneous processing. High-throughput systems are essential for screening large compound and genomic libraries [23]. |

| Precision and Accuracy | Critical for detecting single-nucleotide variants and ensuring data integrity in dose-response studies and genomic analysis [23]. |

| Sample Volume Ranges | The ability to accurately handle nanoliter volumes conserves precious clinical samples and high-value chemical compounds [23]. |

| Contamination Prevention | Features like disposable tips and acoustic liquid handling (non-contact) prevent cross-contamination between assay plates, ensuring result purity [23] [30]. |

| Integration with LIMS | Ensures full sample traceability from compound addition to sequencing data output, a key requirement for regulated research environments [28] [9]. |

Several types of liquid handling systems are available, each suited to different applications:

- Automated Liquid Handlers: Standard workhorses for most NGS library preparation steps, offering a balance of speed, accuracy, and flexibility [23].

- Integrated Workstations: Combine liquid handling with other instruments (e.g., plate sealers, washers) to create a fully automated walk-away solution for the entire library prep workflow [23].

- Acoustic Liquid Handlers: Use sound energy to transfer nanoliter-volume droplets without physical contact, ideal for miniaturized assays, PCR setup, and transferring precious samples in high-density plates [30].

Library Preparation Instruments

The selection of a library preparation platform directly impacts sequencing success. Automation in this stage standardizes processes, increases throughput, and enhances reproducibility by eliminating batch-to-batch variations inherent in manual protocols [9].

Table 2: NGS Library Preparation Market Overview & Trends (Data sourced from [29])

| Parameter | Market Data and Trends |

|---|---|

| Market Size (2025) | USD 2.07 Billion |

| Projected Market Size (2034) | USD 6.44 Billion |

| CAGR (2025-2034) | 13.47% |

| Dominating Region (2024) | North America (44% share) |

| Fastest Growing Region | Asia Pacific (CAGR of 15%) |

| Largest Product Segment | Library Preparation Kits (50% share in 2024) |

| Fastest-Growing Prep Type | Automated/High-Throughput Preparation (CAGR of 14%) |

Key technological shifts influencing instrument selection include the automation of workflows for higher efficiency and reproducibility, the integration of microfluidics for precise microscale control and reagent conservation, and advancements in single-cell and low-input kits that expand applications in oncology and personalized medicine [29].

Experimental Protocols for Automated NGS Workflows

Automated NGS Library Preparation Protocol

This protocol is designed for an integrated ALH system or workstation to process 96 samples for Illumina short-read sequencing.

Reagent Solutions:

- Fragmentation Mix: Enzymes or reagents for shearing DNA into desired fragment sizes.

- End-Repair & A-Tailing Mix: Enzymes to create blunt-ended, 5'-phosphorylated fragments with a single A-overhang for adapter ligation [31].

- Ligation Mix: Contains T4 DNA Ligase and indexing adapters with T-overhangs for ligation to A-tailed fragments [31].

- PCR Master Mix: Contains DNA polymerase, dNTPs, and primers for amplifying the final library.

- SPB Beads: Solid-phase reversible immobilization (SPRI) beads for post-reaction clean-up and size selection.

Procedure:

- DNA Normalization & Fragmentation:

- The ALH system transfers a calculated volume of each 50-200 ng DNA sample from a source plate to a 96-well PCR plate.

- The system then dispenses the Fragmentation Mix to each well.

- The plate is sealed, briefly centrifuged, and incubated on a thermal cycler as per the kit protocol to achieve the desired fragment size (e.g., 300-500 bp).

End-Repair & A-Tailing:

- The ALH system adds the End-Repair & A-Tailing Mix directly to the fragmented DNA.

- The plate is mixed, sealed, and incubated on a thermal cycler to create library-ready fragments.

Adapter Ligation:

- The ALH system dispenses a unique Ligation Mix containing a barcoded adapter into each well.

- The plate is incubated to allow adapters to ligate to the A-tailed fragments. This step enables sample multiplexing.

Post-Ligation Clean-Up:

- The ALH system adds a calibrated volume of SPB Beads to bind DNA.

- After incubation, the plate is placed on a magnetic stand. The ALH system, synchronized with the magnet, aspirates and discards the supernatant.

- Ethanol wash buffer is added and aspirated while the DNA-bound beads remain immobilized.

- Elution buffer is added, and the DNA is resuspended and eluted from the beads.

Library Amplification (PCR):

- The ALH system transfers the cleaned-up ligation product to a fresh PCR plate.

- A PCR Master Mix is dispensed into each well.

- The plate undergoes thermal cycling for a limited number of cycles to enrich for adapter-ligated fragments.

Final Library Clean-Up & Normalization:

- A final bead-based clean-up is performed using SPB Beads as in Step 4 to remove PCR reagents and primers.

- The final library is eluted in elution buffer or nuclease-free water.

- The ALH system can pool (multiplex) the libraries by transferring equal volumes based on prior quantification.

Quality Control:

- Quantify the final library using a fluorometric method (e.g., Qubit).

- Assess library size distribution using an instrument like the Agilent Bioanalyzer or TapeStation.

Diagram 1: Automated NGS Library Prep Workflow.

Protocol for Quantitative PCR Setup using Acoustic Liquid Handling

This protocol utilizes an acoustic liquid handler (e.g., Labcyte Echo) to miniaturize qPCR reactions for library quantification, significantly reducing reagent costs.

Reagent Solutions:

- qPCR Master Mix: Contains SYBR Green or TaqMan chemistry, DNA polymerase, dNTPs, and primers.

- Library Standards: A serially diluted library of known concentration to generate a standard curve.

- Nuclease-free Water: For diluting libraries and master mix.

Procedure:

- Plate Setup:

- Load a source plate containing the qPCR Master Mix onto the Echo.

- Load a separate plate with diluted Library Standards and experimental libraries.

- A low-volume destination qPCR plate (e.g., 384-well) is placed on the deck.

Reagent Transfer:

- The Echo uses sound energy to transfer precisely 2 µL of the qPCR Master Mix from the source plate to each well of the destination plate.

- Subsequently, it transfers 3 nL of each library (standard and unknown) from the sample plate into the corresponding wells containing the master mix. This non-contact transfer eliminates tip-based contamination and waste [30].

Sealing and Centrifugation:

- The destination plate is sealed with an optical film and briefly centrifuged to mix the contents and collect liquid at the bottom of the wells.

qPCR Run:

- The plate is transferred to a real-time PCR instrument and run according to the standard qPCR cycling protocol.

Data Analysis:

- The instrument software generates a standard curve from the known standards, which is used to calculate the concentration of each experimental library.

Implementation Strategy

Integration with Chemogenomics Workflow

For high-throughput chemogenomics, automated NGS components must function as part of a larger, integrated system. The library preparation process is a key step between target identification/compound treatment and bioinformatic analysis.

Diagram 2: NGS in High-Throughput Chemogenomics.

Quality Control and Compliance

Implementing real-time quality control is essential. Automated systems can be integrated with QC software (e.g., omnomicsQ) to monitor sample quality against pre-set thresholds, flagging failures before sequencing [9]. For drug development, adherence to regulatory standards like ISO 13485 and IVDR is critical. Automated systems support this compliance by ensuring standardized, documented, and reproducible workflows, facilitating participation in External Quality Assessment (EQA) programs [9].

The Scientist's Toolkit

Table 3: Essential Research Reagent Solutions for Automated NGS

| Item | Function in the Workflow |

|---|---|

| Library Preparation Kits | Pre-formulated reagent sets optimized for specific applications (e.g., exome, RNA-seq, single-cell). They ensure protocol consistency and high performance [29]. |

| Enzymes (Polymerases, Ligases) | Catalyze key reactions like PCR amplification and adapter ligation. Their quality and activity are critical for library yield and accuracy [31]. |

| Barcoded Adapters | Short DNA sequences ligated to fragments that enable sample multiplexing (pooling) on the sequencer and platform binding [31]. |

| Solid-Phase Reversible Immobilization (SPRI) Beads | Magnetic beads used for automated post-reaction clean-up, size selection, and buffer exchange throughout the library prep process. |

| Lyophilized Reagents | Stable, room-temperature reagents that remove cold-chain shipping and storage constraints, improving workflow flexibility and sustainability [29]. |

Next-generation sequencing (NGS) has revolutionized genomics, oncology, and infectious disease research, providing unprecedented insights into human health and disease [32]. However, manual NGS sample preparation presents significant challenges, including labor-intensive pipetting, sample variability, and reagent waste, creating critical bottlenecks in high-throughput chemogenomics research and modern laboratories [32]. The rising demand for NGS in clinical diagnostic settings, particularly for identifying genetic variations, diagnosing infectious diseases, and characterizing cancer mutations, necessitates solutions that ensure reproducible, reliable, and cost-effective results [32].

End-to-end automation addresses these challenges by transforming NGS workflows into streamlined, walk-away operations. Automated sample-to-sequencing platforms enhance data quality, improve operational efficiency, and enable scalable throughput while maintaining regulatory compliance [9] [33]. This application note details the implementation of fully automated NGS workflows within the context of high-throughput chemogenomics research, providing detailed protocols, performance metrics, and practical considerations for researchers and drug development professionals seeking to establish robust, hands-free sequencing operations.

The Case for Automation in NGS Workflows

Limitations of Manual NGS Processing

Manual NGS library preparation introduces multiple variables that compromise data quality and operational efficiency. Inconsistent pipetting techniques, sample tracking errors, and contamination risks during manual handling directly impact sequencing results [9]. These inconsistencies lead to poor quality outcomes that often require repetition, consuming additional time, financial resources, and precious samples [32]. Manual protocols also create substantial personnel burdens, with the Illumina DNA Prep protocol requiring approximately 3 hours of hands-on time per 8 samples processed [33].

The inherent variability of manual techniques poses particular challenges for chemogenomics research, where reproducible compound screening across large sample sets is essential for identifying therapeutic candidates. Batch-to-batch variation and differences in sample handling among staff members further complicate data interpretation and cross-study comparisons [34].

Advantages of Automated Platforms

Implementing end-to-end automation generates significant benefits across multiple dimensions of NGS operations:

Enhanced Data Quality and Reproducibility: Automated platforms perform precise liquid handling with minimal variability, producing consistent high-quality libraries [33]. Studies demonstrate that automation improves key NGS metrics including percentage of aligned reads, tumor mutational burden scoring, and median exon coverage [35]. This consistency is crucial for regulatory compliance in diagnostic applications and reliable compound screening in chemogenomics.

Substantial Time Savings: Automation dramatically reduces hands-on time while maintaining similar overall processing time. For example, automating the TruSight Oncology 500 assay reduced manual labor from approximately 23 hours to just 6 hours per run – a nearly four-fold decrease [35]. This efficiency gain allows researchers to focus on data analysis and experimental design rather than repetitive manual tasks.

Increased Throughput and Scalability: Automated systems can process 4 to 384 samples per run depending on the platform configuration, enabling laboratories to scale their sequencing operations without proportional increases in staffing [33]. This scalability is essential for chemogenomics applications that require screening large compound libraries across multiple cellular models.

Cost Optimization: While initial instrumentation investment is required (ranging from $45,000 to $300,000 depending on the system) [33], automation reduces long-term costs by minimizing reagent waste through precise nanoliter-range dispensing and decreasing failed runs due to human error [32] [34]. One study demonstrated that automated workflows can process thousands of samples weekly at less than $15 per sample [32].

Table 1: Quantitative Benefits of NGS Workflow Automation

| Performance Metric | Manual Process | Automated Process | Improvement |

|---|---|---|---|

| Hands-on time (TruSight Oncology 500) | ~23 hours/run | ~6 hours/run | 74% reduction [35] |

| Total process time | 42.5 hours | 24 hours | 44% reduction [35] |

| Aligned reads | ~85% | ~90% | ~5% increase [35] |

| Sample processing cost | Variable | <$15/sample | Significant cost reduction [32] |

| PCR hands-on time reduction | 3 hours | <15 minutes | >75% reduction [32] |

Integrated Automated NGS Platform Components

System Architecture for Walk-Away Operation

A fully automated NGS workstation integrates specialized instruments that perform complementary functions within the sequencing workflow. The G.STATION NGS Workstation exemplifies this integrated approach, incorporating the I.DOT Liquid Handler for non-contact reagent dispensing and the G.PURE NGS Clean-Up Device for magnetic bead-based purification [32]. This configuration enables complete walk-away operation for DNA-seq, RNA-seq, and targeted sequencing workflows.

Liquid handling systems form the core of automated NGS platforms, with major vendors including Hamilton, Beckman Coulter, Eppendorf, Tecan, and Revvity offering Illumina-compatible systems [18]. These systems provide precise fluid transfer across 96-, 384-, and 1536-well plate formats, enabling assay miniaturization that preserves precious reagents and samples [32]. The I.DOT Liquid Handler specifically dispenses in the nanoliter range, significantly reducing reagent consumption while maintaining assay integrity [32].

Integrated platforms incorporate ancillary modules that eliminate manual intervention points:

- On-deck thermocyclers for temperature-controlled incubations

- Magnetic bead handlers for purification and size selection steps

- Robotic arms for transferring plates between modules

- Barcode scanners for sample tracking and chain-of-custody documentation

This comprehensive integration enables true walk-away operation from sample preparation through sequencing-ready libraries.

Workflow Management and Quality Control

Automated NGS systems employ sophisticated software that orchestrates the entire sequencing workflow while monitoring quality parameters in real-time. Laboratory Information Management Systems (LIMS) integration enables complete sample tracking from nucleic acid extraction through library preparation, ensuring traceability for regulatory compliance [9].

Quality control software like omnomicsQ provides real-time monitoring of genomic samples, automatically flagging specimens that fail to meet pre-defined quality thresholds before they progress to sequencing [9]. This proactive quality assessment prevents wasted sequencing resources on suboptimal libraries and ensures only high-quality data advances through the pipeline.

Automated platforms also facilitate compliance with evolving regulatory frameworks including IVDR, ISO 13485, and ACMG guidelines [9]. The systems maintain detailed electronic records of all process parameters, reagent lots, and quality metrics necessary for diagnostic validation and audit trails.

Application Notes: Automated NGS in Chemogenomics Research

High-Throughput Compound Screening

Chemogenomics research requires systematic screening of chemical compounds against biological targets to identify therapeutic candidates. Automated NGS platforms enable comprehensive transcriptomic profiling of compound treatments at scales impractical with manual methods. Researchers can process hundreds of compound-treated samples in single runs, generating uniform RNA-seq libraries that reveal gene expression changes, alternative splicing events, and novel transcripts.

The I.DOT Liquid Handler has been specifically optimized for multiplex sequencing library preparation from low-input samples, enabling large-scale genomic surveillance applications [32]. This capability is particularly valuable for chemogenomics studies where sample material may be limited, such as primary cell cultures or patient-derived organoids. Automated systems can routinely process 48 DNA and 48 RNA samples simultaneously, compressing a 42.5-hour manual workflow into 24 hours [35].

Quality Control in Automated Screening

Maintaining quality standards across large compound screens presents significant challenges. Automated NGS platforms address this through integrated quality control checkpoints that assess RNA integrity, library concentration, and fragment size distribution at critical workflow stages. Systems can be programmed to automatically divert failing samples or flag them for review, preventing compromised libraries from consuming valuable sequencing resources.

Reference standards, including mock microbial communities or samples with well-defined sequence profiles, should be incorporated into each run to monitor workflow performance [34]. These controls enable continuous verification of sample lysis efficiency, nucleic acid extraction, cDNA synthesis, and overall library quality throughout automated operations.

Protocols for Automated NGS Library Preparation

Automated Whole Transcriptome Library Preparation

This protocol describes automated library preparation for RNA sequencing applications using the Hamilton NGS STARlet system with Illumina Stranded Total RNA Prep, Ligation with Ribo-Zero Plus, achieving over 65% reduction in hands-on time compared to manual methods [18].

Pre-Run Setup and Instrument Preparation

- Laboratory Preparation: Ensure all work surfaces are decontaminated using RNase decontamination solution. Thaw all reagents completely and mix by vortexing. Centrifuge briefly to collect contents at tube bottoms.