Addressing Data Bias in Structure-Based Chemogenomic Models: Strategies for Robust AI-Driven Drug Discovery

This article provides a comprehensive guide for researchers and drug development professionals on identifying, mitigating, and validating solutions to data bias in structure-based chemogenomic models.

Addressing Data Bias in Structure-Based Chemogenomic Models: Strategies for Robust AI-Driven Drug Discovery

Abstract

This article provides a comprehensive guide for researchers and drug development professionals on identifying, mitigating, and validating solutions to data bias in structure-based chemogenomic models. It covers foundational concepts of bias in structural and bioactivity data, methodological approaches for bias-aware model building, practical troubleshooting and optimization techniques, and robust validation frameworks. The content synthesizes current research to offer actionable strategies for developing more generalizable and predictive models, ultimately enhancing the reliability of AI in accelerating drug discovery pipelines.

Understanding the Roots of Bias: Sources and Impacts in Chemogenomic Data

Troubleshooting Guides & FAQs

Q1: My structure-based affinity prediction model performs well on my training set (high R²) but fails drastically on a new, external test set from a different source. What is the likely cause and how can I diagnose it?

A1: This is a classic symptom of a representation gap or dataset shift bias. Your training data likely under-represents the chemical space or protein conformations present in the new external set.

- Diagnostic Protocol:

- Calculate Dataset Statistics: Compute key physicochemical property distributions (e.g., molecular weight, logP, charge, rotatable bonds) for both datasets. Use a two-sample Kolmogorov-Sigmoid-Rank (KSR) test to check for significant differences.

- Perform Dimensionality Reduction: Use t-SNE or UMAP to project the molecular fingerprints or protein descriptor vectors of both datasets into 2D. Visualize the overlap.

- Apply Model Confidence Metrics: Use techniques like conformal prediction or Bayesian uncertainty estimation to see if the model outputs high uncertainty for the external set samples.

Q2: During virtual screening, my model consistently ranks compounds with certain scaffolds (e.g., flavones) highly, regardless of the target. Is this a model artifact?

A2: This indicates a generalization gap due to confounding bias in the training data. The model may have learned spurious correlations between the scaffold and a positive label, often because that scaffold was over-represented among active compounds in the training data.

- Diagnostic Protocol:

- Scaffold Frequency Analysis: Perform Murcko scaffold decomposition on your active and inactive/informer sets. Create a contingency table and calculate the Chi-squared statistic to identify scaffolds disproportionately associated with the "active" label.

- Adversarial Validation: Train a classifier to distinguish between your actives and inactives using only scaffold fingerprints. High accuracy indicates the scaffold is a strong, potentially confounding predictor.

- Control Experiment: Test the model on a new, carefully curated set where the confounding scaffold is present in both active and inactive compounds at equal rates.

Q3: How can I quantify structural bias in my protein-ligand complex dataset before model training?

A3: Bias can be quantified via property distribution asymmetry and structural coverage metrics.

- Experimental Protocol for Quantification:

- For each protein target in your dataset, calculate the following for its associated ligands:

- Mean & Std. Dev. of Molecular Weight (MW)

- Mean & Std. Dev. of Quantitative Estimate of Drug-likeness (QED)

- Shannon Entropy of Murcko scaffold types

- Compare these distributions across all targets in your dataset. High variance in means or low scaffold entropy for specific targets indicates bias.

- For each protein target in your dataset, calculate the following for its associated ligands:

Table 1: Quantifying Dataset Bias for Two Hypothetical Kinase Targets

| Target | # of Complexes | Mean Ligand MW ± SD | Mean Ligand QED ± SD | Scaffold Entropy (bits) | Note |

|---|---|---|---|---|---|

| Kinase A | 250 | 450.2 ± 75.1 | 0.45 ± 0.12 | 2.1 | Low diversity, heavy ligands |

| Kinase B | 240 | 355.8 ± 50.3 | 0.68 ± 0.08 | 4.8 | Higher diversity, drug-like |

| Ideal Profile | >300 | 350 ± 50 | 0.6 ± 0.1 | >5.0 | Balanced, diverse |

Q4: What are proven strategies to mitigate bias during the training of a graph neural network (GNN) on 3D protein-ligand structures?

A4: Mitigation requires both algorithmic and data-centric strategies.

- Detailed Mitigation Methodology:

- Reweighting/Resampling: Assign weight (wi = \sqrt{N / Nc}) to each sample i, where N is total samples and (N_c) is the number of samples in the c-th cluster (based on scaffold or protein fold). Use during loss calculation.

- Adversarial Debiasing: Jointly train the primary affinity prediction network and an adversarial network that tries to predict the confounding attribute (e.g., protein family). Use a gradient reversal layer to make the primary model's features invariant to the confounder.

- Data Augmentation: Apply valid, physics-preserving transformations to underrepresented classes: e.g., slight rotational perturbations of ligand pose, or homology-based mutation of non-critical protein residues to expand structural coverage.

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Tools for Bias-Aware Structure-Based Modeling

| Item | Function in Bias Handling | Example/Note |

|---|---|---|

| PDBbind (Refined/General Sets) | Provides a standardized, hierarchical benchmark for evaluating generalization gaps between protein families. | Use the "general set" as an external test for true generalization. |

| MOSES Molecular Dataset | Offers a cleaned, split benchmark designed to avoid scaffold-based generalization artifacts. | Use its scaffold split to test for scaffold bias. |

| DeepChem Library | Contains implemented tools for dataset stratification, featurization, and fairness metrics tailored to chemoinformatics. | dc.metrics.specificity_score can help evaluate subgroup performance. |

| RDKit | Open-source toolkit for computing molecular descriptors, generating scaffolds, and visualizing chemical space. | Critical for the diagnostic protocols in Q1 & Q2. |

| AlphaFold2 (DB) | Provides high-quality predicted protein structures for targets with no experimental complexes, mitigating representation bias. | Can expand coverage for orphan targets. |

| SHAP (SHapley Additive exPlanations) | Model interpretability tool to identify which structural features (atoms, residues) drive predictions, revealing learned biases. | Helps diagnose if a model uses correct physics or spurious correlations. |

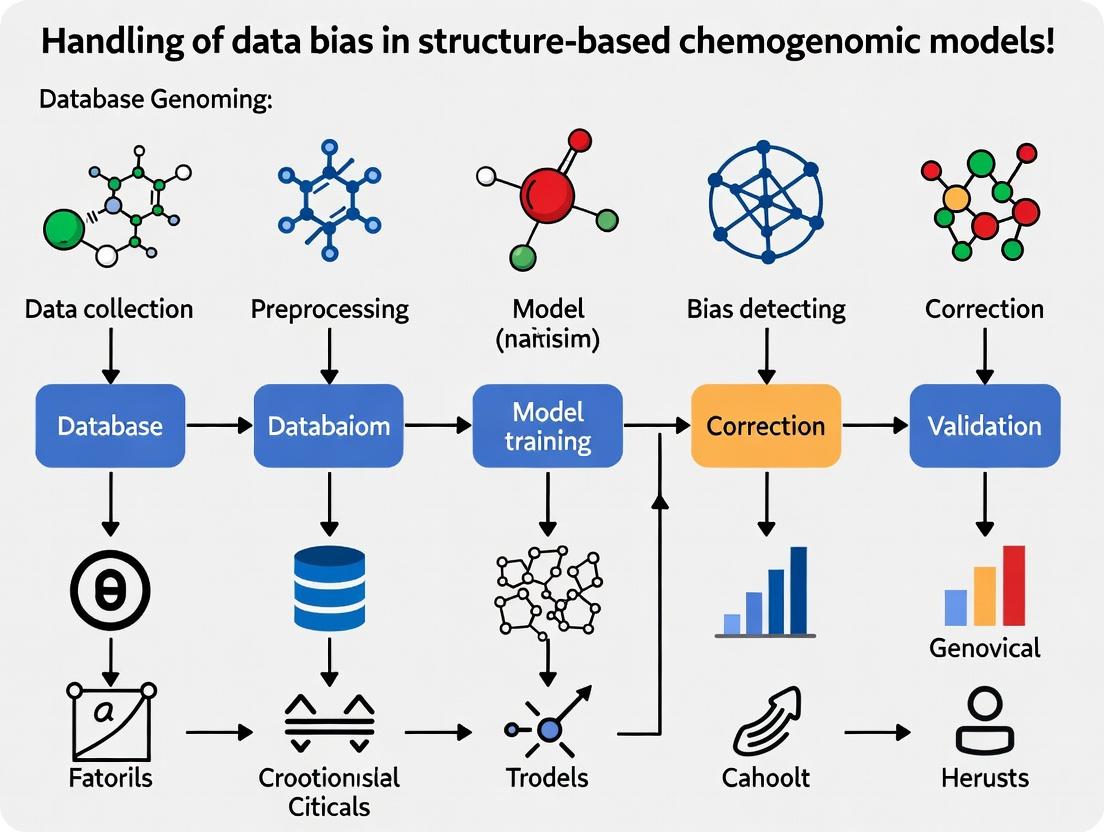

Experimental Workflow & Bias Pathways

Diagram 1: Bias Diagnosis and Mitigation Workflow

Diagram 2: Data Bias Leading to Generalization Gaps

Technical Support Center

Troubleshooting Guides & FAQs

Q1: My virtual screening campaign against a GPCR target yields an overwhelming number of hits containing a common triazine scaffold not present in known actives. What is the likely cause and how can I correct it?

A: This is a classic symptom of ligand scaffold preference bias in your training data. The model was likely trained on a benchmark dataset (e.g., from PDBbind or ChEMBL) that is overrepresented with triazine-containing ligands for certain protein families. This teaches the model to associate that scaffold with high scores, regardless of the specific target context.

- Solution: Implement a scaffold-aware train/test split. Use tools like RDKit to identify Bemis-Murcko scaffolds. Ensure no scaffold in your test/validation set is present in your training set. Re-train your model using this split to assess true generalization.

Q2: When benchmarking my pose prediction model, performance is excellent for kinases but fails for nuclear hormone receptors. Why?

A: This indicates a protein family skew in your training data. The Protein Data Bank (PDB) is dominated by certain protein families. For example, kinases represent ~20% of all human protein structures, while nuclear hormone receptors are underrepresented.

- Solution: Apply family-stratified sampling. Before training, audit your dataset's composition. Create a balanced subset that includes a minimum threshold of structures from underrepresented families. Alternatively, use data augmentation techniques like homology modeling for missing families before training.

Q3: I suspect my binding affinity prediction model is biased by the abundance of high-affinity complexes in the PDB. How can I diagnose and mitigate this?

A: You are addressing PDB imbalance, where the public structural data is skewed toward tight-binding ligands and highly stable, crystallizable protein conformations.

- Diagnosis: Plot the distribution of binding affinity (pKd/pKi) in your training data. Compare it to a broader biochemical database (e.g., ChEMBL). You will likely see a right-skew toward high affinity.

- Mitigation: Integrate negative or weakly binding data. Use docking to generate putative decoy poses for non-binders. Incorporate experimental data on inactive compounds from public sources. Apply a loss function that penalizes overconfidence on high-affinity examples.

Key Experimental Protocols

Protocol 1: Auditing Dataset for Protein Family Skew

- Source: Download a curated dataset (e.g., PDBbind refined set, sc-PDB).

- Annotation: Map each protein target to its primary gene family (e.g., using Gene Ontology, Pfam, or UniProt).

- Quantification: Count the number of unique complexes per family.

- Analysis: Calculate the percentage distribution. Identify families with representation below a defined threshold (e.g., <1% of total).

- Action: For underrepresented families, source additional structures from the PDB or generate homology models using Swiss-Model.

Protocol 2: Generating a Scaffold-Blind Evaluation Set

- Input: A dataset of ligand-protein complexes (SMILES strings and protein PDB IDs).

- Scaffold Extraction: For each ligand, compute its Bemis-Murcko framework using the RDKit

GetScaffoldForMolfunction. - Clustering: Perform Tanimoto similarity clustering on the scaffold fingerprints (ECFP4).

- Splitting: Use the scaffold clusters as grouping labels. Use a cluster-based splitting method (e.g.,

GroupShuffleSplitin scikit-learn) to ensure no scaffold cluster appears in both training and test sets.

Protocol 3: Augmenting Data with Putative Non-Binders

- Select Actives: From your target of interest, compile a set of known active ligands with structures.

- Select Inactives: From a broad compound library (e.g., ZINC), select molecules with similar physicochemical properties but different 2D topology to actives (property-matched decoys).

- Docking: Dock both actives and inactives into your target's binding site using software like AutoDock Vina or GLIDE.

- Curation: Manually inspect top poses of inactives to ensure plausible binding mode. Add these ligand-receptor complexes to your dataset with a "non-binding" or weak affinity label.

Data Presentation

Table 1: Representation of Major Protein Families in the PDB (vs. Human Proteome)

| Protein Family | Approx. % of Human Proteome | Approx. % of PDB Structures (2023) | Skew Factor (PDB/Proteome) |

|---|---|---|---|

| Kinases | ~1.8% | ~20% | 11.1 |

| GPCRs | ~4% | ~3% | 0.75 |

| Ion Channels | ~5% | ~2% | 0.4 |

| Nuclear Receptors | ~0.6% | ~0.8% | 1.3 |

| Proteases | ~1.7% | ~7% | 4.1 |

| All Other Families | ~86.9% | ~67.2% | 0.77 |

Table 2: Common Ligand Scaffolds in PDBbind Core Set (by Frequency)

| Scaffold (Bemis-Murcko) | Frequency Count | Example Target Families |

|---|---|---|

| Benzene | 1245 | Kinases, Proteases, Diverse |

| Pyridine | 568 | Kinases, GPCRs |

| Triazine | 187 | Kinases, DHFR |

| Indole | 452 | Nuclear Receptors, Enzymes |

| Purine | 311 | Kinases, ATP-Binding Proteins |

Visualizations

Title: Sources and Impacts of Structural Bias

Title: Bias Detection and Mitigation Workflow

The Scientist's Toolkit: Research Reagent Solutions

| Item | Function in Bias Mitigation |

|---|---|

| RDKit | Open-source cheminformatics toolkit. Essential for scaffold analysis (Bemis-Murcko), molecular fingerprinting, and property calculation to audit and split datasets. |

| Pfam/InterPro | Databases of protein families and domains. Used to annotate protein targets in a dataset and quantify family-level representation. |

| PDBbind/SC-PDB | Curated databases linking PDB structures with binding affinity data. Common starting points for building models; require auditing for inherent biases. |

| ZINC Database | Public library of commercially available compounds. Source for generating property-matched decoy molecules to augment datasets with non-binders. |

| AutoDock Vina | Widely-used open-source molecular docking program. Used to generate putative poses for decoy compounds in data augmentation protocols. |

| Swiss-Model | Automated protein homology modeling server. Can generate structural models for protein families underrepresented in the PDB. |

| scikit-learn | Python machine learning library. Provides utilities for strategic data splitting (e.g., GroupShuffleSplit) based on scaffolds or protein families. |

Troubleshooting Guides & FAQs

FAQ 1: Why does my chemogenomic model show excellent validation performance but fails to identify new active compounds in a fresh assay?

Answer: This is a classic symptom of training data bias, often due to the Dominance of Published Actives. Models trained primarily on literature-reported "actives" versus broadly tested but unpublished "inactives" learn features specific to that biased subset, not generalizable bioactivity rules.

- Diagnostic Check: Compare the chemical space (e.g., using PCA or t-SNE) of your training set actives versus a large, diverse database of commercial compounds (e.g., ZINC). Overlap is likely minimal.

- Solution: Apply artificial debiasing. Augment your training data with assumed inactives from "Dark Chemical Matter" (DCM) – compounds tested in many assays but never active. This teaches the model what inactivity looks like. See Protocol 1.

FAQ 2: My high-throughput screen (HTS) identified hits that are potent in the primary assay but are completely inert in all orthogonal assays. What could be the cause?

Answer: This typically indicates Assay-Specific Artifacts. Compounds may interfere with the assay technology (e.g., fluorescence quenching, luciferase inhibition, aggregation-based promiscuity) rather than modulating the target.

- Diagnostic Check: Perform a confirmatory assay using a different readout technology (e.g., switch from fluorescence polarization to SPR). Also, check for common nuisance behavior: test for colloidal aggregation (Dynamic Light Scattering), redox activity (catalase inhibition assay), or reactivity with thiols (see Protocol 2).

- Solution: Implement a counter-screen and triage workflow early in the validation pipeline. Filter hits against known assay artifact profiles.

FAQ 3: What is 'Dark Chemical Matter' and how should I handle it in my dataset to avoid bias?

Answer: Dark Chemical Matter (DCM) refers to the large fraction of compounds in corporate or public screening libraries that have never shown activity in any biological assay despite being tested numerous times. Ignoring DCM introduces a severe "confirmatory" bias.

- Impact: A model trained only on published actives and random presumed inactives may misclassify DCM as potentially active because it doesn't recognize its consistent inactivity as a meaningful signal.

- Solution: Treat DCM as a privileged class for negative data. Explicitly label DCM compounds (e.g., >30 assays, 0 hits) as "verified inactives" in your training set. This significantly improves model specificity. See Protocol 3.

Experimental Protocols

Protocol 1: Artificial Debiasing of Training Data Using DCM

Objective: To create a balanced training dataset that mitigates publication bias.

- Collect Actives: Gather confirmed active compounds from public sources (ChEMBL, PubChem BioAssay). Use stringent criteria (e.g., IC50/ Ki < 10 µM, dose-response confirmed).

- Collect DCM: Extract DCM compounds from large-scale screening data (e.g., PubChem's "BioAssay" data for compounds tested in >50 assays with 0% hit rate).

- Sample Negatives: Randomly select a set of presumed inactives from a general compound library (e.g., ZINC) that are not in the DCM set.

- Construct Training Set: Combine Actives, DCM (labeled inactive), and Sampled Negatives (labeled inactive) in a 1:2:1 ratio.

- Train Model: Use this composite set for model training, ensuring the DCM class is weighted appropriately in the loss function.

Protocol 2: Detecting Assay-Specific Artifact: Aggregation-Based Inhibition

Objective: To confirm if a hit compound acts via non-specific colloidal aggregation.

- Prepare Compound: Make a 10 mM stock of the hit compound in DMSO.

- Dilution Series: Prepare a 2X dilution series in aqueous assay buffer (e.g., PBS) with final DMSO concentration ≤ 1%. Include a control well with buffer and DMSO only.

- Dynamic Light Scattering (DLS): Immediately measure the hydrodynamic radius (Rh) of each dilution using a DLS instrument.

- Data Interpretation: A positive result for aggregation is indicated by a sharp increase in Rh (>50 nm) at concentrations near the assay IC50. A negative control compound should show Rh < 5 nm.

- Confirmatory Test: Add a non-ionic detergent (e.g., 0.01% Triton X-100) to the assay. True aggregators will lose potency, while specific inhibitors will remain active.

Protocol 3: Integrating DCM into a Machine Learning Workflow

Objective: To build a random forest classifier that leverages DCM.

- Feature Calculation: Compute molecular descriptors (e.g., RDKit descriptors, ECFP4 fingerprints) for three lists: Actives (Class 1), DCM (Class 0), Random Library Compounds (Class 0).

- Data Splitting: Perform a stratified split 80/20 into training and test sets, preserving class ratios.

- Model Training: Train a Random Forest classifier (scikit-learn) with class weight='balanced'. This automatically adjusts weights inversely proportional to class frequencies.

- Evaluation: Assess on the test set. Pay special attention to the Recall (Sensitivity) for Actives and Specificity for DCM. A good model should have high specificity for DCM.

Data Presentation

Table 1: Prevalence of Assay Artifacts in Public HTS Data (PubChem AID 1851)

| Artifact Type | Detection Method | % of Primary Hits (IC50 < 10µM) | Confirmed True Actives After Triaging |

|---|---|---|---|

| Fluorescence Interference | Red-shifted control assay | 12.5% | 2.1% |

| Luciferase Inhibition | Counter-screen with luciferase enzyme | 8.7% | 1.8% |

| Colloidal Aggregation | DLS / Detergent sensitivity test | 15.2% | 3.5% |

| Cytotoxicity (for cell-based) | Cell viability assay (MTT) | 18.9% | 4.0% |

Table 2: Impact of DCM Inclusion on Model Performance Metrics

| Training Data Composition | AUC-ROC (Test Set) | Precision (Actives) | Specificity (DCM Class) |

|---|---|---|---|

| Actives + Random Inactives | 0.89 | 0.65 | 0.81 |

| Actives + DCM only | 0.85 | 0.82 | 0.93 |

| Actives + DCM + Random Inactives | 0.91 | 0.78 | 0.95 |

Visualizations

Title: Data Bias Identification and Mitigation Workflow

Title: Assay Artifact Triage Decision Tree

The Scientist's Toolkit: Research Reagent Solutions

| Item | Function/Application in Bias Mitigation |

|---|---|

| Triton X-100 (or CHAPS) | Non-ionic detergent used in confirmatory assays to disrupt colloidal aggregates, identifying false positives from promiscuous aggregation. |

| Red-Shifted Fluorescent Probes | Control probes with longer excitation/emission wavelengths to identify compounds that interfere with assay fluorescence (inner filter effect, quenching). |

| Recombinant Luciferase Enzyme | For counter-screening hits from luciferase-reporter assays to identify direct luciferase inhibitors. |

| Dynamic Light Scattering (DLS) Instrument | Measures hydrodynamic radius of particles in solution to directly detect compound aggregation at relevant assay concentrations. |

| ChEMBL / PubChem BioAssay Database | Primary public sources for bioactivity data, used to extract both published actives and, critically, define Dark Chemical Matter. |

| RDKit or MOE Cheminformatics Suite | For calculating molecular fingerprints and descriptors, enabling the chemical space analysis crucial for identifying training set biases. |

| MTT or CellTiter-Glo Assay Kits | Standard cell viability assays used as orthogonal counterscreens for cell-based phenotypic assays to rule out cytotoxicity-driven effects. |

Technical Support Center

Troubleshooting Guides

Issue 1: Model shows excellent training/validation performance but fails on new external datasets.

- Likely Cause: Data leakage or non-representative training/validation split leading to overfitting on latent biases (e.g., assay, scaffold, or vendor bias).

- Diagnostic Steps:

- Perform Bias Audit: Stratify your internal test set by putative bias sources (e.g., chemical series, protein family, assay date). Calculate performance metrics per stratum.

- Conduct an "Analog Series" Test: For each compound in your external test set, find its nearest neighbor in the training set by chemical fingerprint (e.g., ECFP4, Tanimoto >0.8). Plot the model's error against the distance to the nearest neighbor. A sharp increase in error with distance indicates over-reliance on local interpolation.

- Use a Simple Baseline: Compare your model's performance against a simple descriptor-based model (e.g., molecular weight + LogP regression) on the external set. If the complex model performs worse, it suggests it has learned dataset-specific noise.

- Resolution Protocol:

- Rebuild with Bias-Aware Splitting: Use tools like

scaffold split(RDKit) ortime splitto create more challenging validation sets that mimic real-world generalization. - Implement Regularization: Increase dropout rates, apply stronger L1/L2 weight penalties, or use early stopping with a stricter patience threshold.

- Incorporate Bias as a Feature (Adversarial Debiasing): Train an auxiliary model to predict the bias source (e.g., assay type) from your primary model's latent representations. Simultaneously, train your primary model to predict the target while minimizing the auxiliary model's performance. This decorrelates the learned features from the bias.

- Rebuild with Bias-Aware Splitting: Use tools like

Issue 2: Prospective screening yields inactive compounds despite high model confidence.

- Likely Cause: The model has learned "syntactic" patterns from the training data (e.g., specific substructures always labeled active in a given high-throughput screening (HTS) campaign) that do not translate to true bioactivity.

- Diagnostic Steps:

- Analyze Confidence Calibration: Generate a reliability diagram. Plot predicted probability bins vs. observed fraction of positives. A well-calibrated model follows the y=x line. Deviations indicate overconfident predictions.

- Apply Explainability Tools: Use SHAP (SHapley Additive exPlanations) or integrated gradients on high-confidence, failed predictions. Identify if the prediction was driven by chemically irrelevant or assay-artifact-related features.

- Resolution Protocol:

- Bayesian Uncertainty Estimation: Switch to or incorporate models that provide uncertainty estimates (e.g., Gaussian processes, Bayesian neural networks, or deep ensembles). Filter prospective hits by both high predicted activity and low predictive uncertainty.

- Diverse Negative Sampling: Ensure your training data includes a robust set of experimentally confirmed inactive compounds, not just assumed inactives from HTS. This teaches the model what "inactivity" looks like.

Issue 3: Performance drops significantly when integrating new data sources (e.g., adding cryo-EM structures to an X-ray-based model).

- Likely Cause: Covariate shift or representation bias. The feature distributions of the new data differ from the training domain.

- Diagnostic Steps:

- Dimensionality Reduction & Visualization: Project the latent features of old and new datasets using t-SNE or UMAP. Look for clear separation or lack of overlap between the data source clusters.

- Train a Data Source Classifier: Train a simple classifier to discriminate between old and new data sources using the model's input features. High accuracy indicates a significant distribution shift.

- Resolution Protocol:

- Domain Adaptation: Use techniques like Domain-Adversarial Neural Networks (DANNs) to learn domain-invariant features.

- Transfer Learning with Fine-Tuning: Pre-train the model on the larger, older dataset. Then, unfreeze the final layers and fine-tune on a smaller, carefully curated mix of old and new data.

Frequently Asked Questions (FAQs)

Q2: My model uses protein pockets as input. How can structural bias manifest? A: Structural bias is common and can manifest as:

- Resolution Bias: Models trained on high-resolution X-ray structures (<2.0 Å) may fail on predictions for homology models or cryo-EM-derived pockets.

- Ligand-of-Origin Bias: Pockets are defined by a co-crystallized ligand. The model may learn to recognize the specific chemical features of that ligand rather than general binding principles.

- Protein Family Bias: Over-representation of certain families (e.g., kinases) leads to poor performance on underrepresented ones (e.g., GPCRs). The table below summarizes common biases and their impact.

Table 1: Common Data Biases in Structure-Based Chemogenomic Models and Their Impact on Performance

| Bias Type | Description | Typical Manifestation | Diagnostic Metric Shift |

|---|---|---|---|

| Scaffold/Series Bias | Over-representation of specific chemical cores in training. | Poor performance on novel chemotypes. | High RMSE on external sets with novel scaffolds. |

| Assay/Measurement Bias | Training data aggregated from different experimental protocols (Kd, IC50, Ki from different labs). | Inaccurate absolute potency prediction. | Poor correlation between predicted and observed pChEMBL values across assays. |

| Structural Resolution Bias | Training on high-resolution structures only. | Failure on targets with only low-resolution or predicted structures. | AUC-ROC drops when tested on targets with resolution >3.0 Å. |

| Protein Family Bias | Imbalanced representation of target classes. | Inability to generalize to novel target families. | Macro-average F1-score significantly lower than per-family F1. |

| Publication Bias | Only successful (active) compounds and structures are published/deposited. | Over-prediction of activity, high false positive rate. | Skewed calibration curve; observed actives fraction << predicted probability. |

Q3: Are there standard reagents or benchmarks for debiasing studies in this field? A: Yes, the community uses several benchmark datasets and software tools to stress-test models for bias. Key resources are listed in the Scientist's Toolkit below.

Q4: How much performance drop in external validation is "acceptable"? A: There is no universal threshold. The key is to benchmark the drop against a null model. A 10% drop in AUC may be acceptable if a simple baseline (e.g., random forest on fingerprints) drops by 25%. The critical question is whether your model, despite the drop, still provides actionable, statistically significant enrichment over random or simple screening.

Experimental Protocols for Bias Detection & Mitigation

Protocol 1: Bias-Auditing via Stratified Performance Analysis

Objective: To identify specific data subsets where model performance degrades, indicating potential bias. Materials: Trained model, full dataset with metadata (scaffold, assay type, protein family, etc.). Steps:

- For each putative bias source (e.g.,

Protein_Family), partition the external test set into distinct strata. - For each stratum, calculate key performance metrics (AUC-ROC, AUC-PR, RMSE, EF1%).

- Plot metrics per stratum (bar chart). Compare against the global performance on the entire test set.

- Statistically compare performance across strata using ANOVA or Kruskal-Wallis tests.

- Interpretation: Strata with significantly lower performance indicate a domain where the model may be biased due to under-representation or confounding factors.

Protocol 2: Adversarial Debiasing Training (Implementation Outline)

Objective: To learn representations that are predictive of the primary task (activity) but invariant to a specified bias source (e.g., assay vendor).

Materials: Dataset with labels Y (activity) and bias labels B (vendor ID). Deep learning framework (PyTorch/TensorFlow).

Steps:

- Network Architecture: Build a shared feature extractor

G_f(.), a primary predictorG_y(.), and an adversarial bias predictorG_b(.). - Forward Pass: Input

X->Features = G_f(X)->Y_pred = G_y(Features)andB_pred = G_b(Features). - Adversarial Loss: The key is to train

G_fto maximize the loss ofG_b(making features uninformative for predicting bias), whileG_bis trained normally to minimize its loss. A gradient reversal layer (GRL) is typically used betweenG_fandG_bduring backpropagation. - Total Loss:

L_total = L_y(Y_pred, Y) - λ * L_b(B_pred, B), whereλcontrols the strength of debiasing. - Train the network with alternating or joint optimization of the shared and task-specific parameters.

Visualizations

Bias Mitigation & Model Development Workflow (73 chars)

Adversarial Debiasing Network Architecture (55 chars)

Table 2: Essential Resources for Bias Handling in Chemogenomic Models

| Item Name | Type/Provider | Function in Bias Research |

|---|---|---|

| PDBbind (Refined/General Sets) | Curated Dataset | Standard benchmark for structure-based affinity prediction. Used to test for protein-family and ligand bias via careful cluster-based splitting. |

| ChEMBL Database | Public Repository | Source of bioactivity data. Enables temporal splitting and detection of assay/publication bias through metadata mining. |

| MOSES (Molecular Sets) | Benchmark Platform | Provides standardized training/test splits (scaffold, random) and metrics to evaluate generative model bias and overfitting. |

| RDKit | Open-Source Toolkit | Provides functions for molecular fingerprinting, scaffold analysis, and bias-aware dataset splitting (e.g., Butina clustering, Scaffold split). |

| DeepChem | Open-Source Library | Offers implementations of advanced splitting methods (e.g., ButinaSplitter, SpecifiedSplitter) and model architectures suitable for adversarial training. |

| SHAP (SHapley Additive exPlanations) | Explainability Library | Interprets model predictions to identify if specific, potentially biased, chemical features are driving decisions. |

| GNINA / AutoDock Vina | Docking Software | Used as a baseline structure-based method to compare against ML models, helping to distinguish true learning from data leakage. |

| PROTEINET | Curated Dataset | A bias-controlled benchmark for protein sequence and structure models, useful for testing generalization across folds. |

Technical Support Center

Troubleshooting Guide & FAQs

Q1: My virtual screening model trained on DUD-E shows excellent AUC on the benchmark but fails drastically on my internal compound set. What is the likely cause?

A1: This is a classic symptom of hidden bias. DUD-E's "artificial decoy" generation method can introduce bias, where decoys are dissimilar to actives in ways the model learns to exploit (e.g., molecular weight, charge). Your internal compounds likely do not share this artificial separation.

- Diagnostic Protocol: Calculate and compare simple physicochemical property distributions (e.g., logP, molecular weight, number of rotatable bonds) between your actives, DUD-E decoys, and your internal set. A significant mismatch indicates property bias.

- Mitigation Workflow:

- Re-analyze DUD-E: Use the provided "cleaning" scripts from later studies to identify potential false negatives.

- Employ Bias-Corrected Benchmarks: Augment or replace your test with newer benchmarks like DEKOIS 2.0 or LIT-PCBA.

- Apply Domain Adaptation: Use techniques like adversarial validation to detect and adjust for systematic differences between the DUD-E data distribution and your real-world data.

Q2: When using PDBbind to train a binding affinity predictor, the model performance drops sharply on targets not in the PDBbind core set. How should I debug this?

A2: This suggests a "target bias" or "sequence similarity bias." The model may be memorizing target-specific features rather than learning generalizable protein-ligand interaction rules.

- Diagnostic Protocol: Perform a leave-one-cluster-out cross-validation based on protein sequence similarity (e.g., using BLAST clustering). A large performance drop in this setting confirms target bias.

- Mitigation Workflow:

- Stratified Splitting: Always split training/validation/test sets by protein family, not randomly, to prevent data leakage.

- Data Augmentation: Use homology models or carefully applied synthetic data techniques to increase target diversity in training.

- Architecture Choice: Prioritize models that explicitly model protein structure (e.g., graph networks over atoms) over those relying heavily on target descriptors.

Q3: I suspect my ligand-based model has learned "temporal bias" from a public dataset like ChEMBL. How can I validate and correct for this?

A3: Temporal bias occurs when early-discovered, "privileged" scaffolds dominate the dataset, and test sets are non-chronologically split. The model fails on newer chemotypes.

- Diagnostic Protocol: Split your data chronologically by the compound's first reported date. Train on older data and test on newer data. Compare the performance to a random split.

- Mitigation Workflow:

- Temporal Splitting: Implement a rigorous time-split protocol for all model validation.

- Scaffold-based Evaluation: Use Bemis-Murcko scaffold splits to ensure the model generalizes to novel core structures.

- Active Learning: Integrate an active learning loop that prioritizes compounds dissimilar to the training set for experimental testing and feedback.

Q4: What are the concrete, quantitative differences in bias between DUD-E and its successor, DUD-E Z?

A4: DUD-E Z was designed to reduce analog and chemical bias. Key improvements are summarized below:

Table 1: Quantitative Comparison of Bias Mitigation in DUD-E vs. DUD-E Z

| Bias Type | DUD-E Characteristic | DUD-E Z Improvement | Quantitative Metric |

|---|---|---|---|

| Analog Bias | Decoys were chemically dissimilar to actives but also to each other, making them too easy to distinguish. | Decoys are selected to be chemically similar to each other, forming "chemical neighborhoods" that better mimic real screening libraries. | Increased mean Tanimoto similarity among decoys (within a target set). |

| Chemical Bias | Decoy generation rules could create systematic physicochemical differences from actives. | More refined property-matching (e.g., by 1D properties) and the use of the ZINC database as a decoy source. | Reduced Kullback-Leibler divergence between the property distributions (e.g., logP) of actives and decoys. |

| False Negatives | Known actives could potentially be included as decoys for other targets. | Stringent filtering against known bioactive compounds across a wider array of databases. | Number of confirmed false negatives removed from decoy sets. |

Key Experimental Protocol: Detecting Dataset Bias via Property Distribution Analysis

Objective: To diagnose and quantify potential chemical property bias between active and decoy/inactive compound sets in a benchmark like DUD-E.

Materials & Software: RDKit (Python), Pandas, NumPy, Matplotlib/Seaborn, Benchmark dataset (e.g., DUD-E CSV files).

Procedure:

- Data Loading: Load the actives (

*_actives_final.sdf) and decoys (*_decoys_final.sdf) for your target of interest using RDKit. - Descriptor Calculation: For each molecule in both sets, calculate a set of 1D/2D molecular descriptors:

- Molecular Weight (MW)

- Calculated LogP (AlogP)

- Number of Hydrogen Bond Donors (HBD)

- Number of Hydrogen Bond Acceptors (HBA)

- Number of Rotatable Bonds (RotB)

- Topological Polar Surface Area (TPSA)

- Statistical Summary: Generate a table of mean and standard deviation for each descriptor per set (Active vs. Decoy).

- Distribution Visualization: Plot the kernel density estimation (KDE) for each descriptor, overlaying the distributions of actives and decoys.

- Statistical Testing: Perform an appropriate statistical test (e.g., Kolmogorov-Smirnov test) to determine if the distributions for each property are significantly different (p < 0.01).

- Interpretation: Significant differences across multiple key properties indicate a strong chemical bias in the benchmark, which may lead to overly optimistic model performance.

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Resources for Bias-Aware Chemogenomic Modeling

| Item / Resource | Function & Purpose in Bias Mitigation |

|---|---|

| RDKit | Open-source cheminformatics toolkit. Critical for calculating molecular descriptors, scaffold analysis, and visualizing chemical distributions to detect bias. |

| DEKOIS 2.0 / LIT-PCBA | Bias-corrected benchmark datasets. Use as alternative or supplemental test sets to DUD-E for more realistic performance estimation. |

| PDBbind (Refined/General Sets) | The hierarchical structure of PDBbind (General -> Refined -> Core) allows researchers to consciously select data quality levels and avoid target leakage during splits. |

| Protein Data Bank (PDB) | Source of ground-truth structural data. Essential for constructing structure-based models and verifying binding mode hypotheses independent of affinity labels. |

| Time-Split ChEMBL Scripts | Custom or community scripts (e.g., from chembl_downloader) to split data chronologically, essential for evaluating predictive utility for future compounds. |

| Adversarial Validation Code | Scripts implementing a binary classifier to distinguish training from real-world data. Success indicates a distribution shift, guiding the need for domain adaptation. |

| Graphviz (DOT) | Tool for generating clear, reproducible diagrams of data workflows and model architectures, essential for documenting and communicating bias-testing pipelines. |

Visualizations

Title: Workflow for Detecting Chemical Property Bias

Title: PDBbind Hierarchical Dataset Structure

Title: Model Validation Strategies to Uncover Bias

Building Bias-Aware Models: Methodological Frameworks and Practical Applications

Technical Support Center: Troubleshooting Guides & FAQs

This support center addresses common technical issues encountered while constructing curation pipelines for structure-based chemogenomic models, specifically within research focused on mitigating data bias.

Frequently Asked Questions (FAQs)

Q1: During ligand-protein pair assembly, my dataset shows extreme affinity value imbalances (e.g., 95% inactive compounds). How can I address this programmatically without introducing selection bias?

A1: Implement stratified sampling during data sourcing, not just as a post-hoc step. Use the following protocol:

- Pre-source Stratification: Define your target distribution (e.g., 70% inactive, 25% active, 5% high-affinity) based on known biological priors.

- Query in Batches: For each affinity stratum, run separate queries to primary databases (PDBbind, BindingDB) using specific KI/IC50/KD thresholds.

- Oversampling/Undersampling with SMOTE & TOMEK: Apply Synthetic Minority Oversampling Technique (SMOTE) to generate synthetic examples for rare high-affinity classes. Follow with TOMEK links for cleaning. Crucially, apply these techniques only to the training split after dataset splitting to avoid data leakage.

- Validate with Blind Test Set: Hold out a fully representative test set before any balancing. The final model must be evaluated on this untouched data.

Q2: I suspect structural redundancy in my protein set is biasing my model towards certain protein families. How can I measure and control for this?

A2: Use sequence and fold similarity clustering to ensure diversity.

- Protocol:

- Extract all protein sequences from your assembled complex structures.

- Perform all-vs-all pairwise alignment using MMseqs2 (

mmseqs easy-cluster). - Cluster at a strict sequence identity threshold (e.g., 30%).

- Select a maximally diverse representative from each cluster for your final set. If cluster sizes are highly uneven (e.g., one superfamily has 50 clusters, another has 2), apply a second-stage sampling to select a balanced number of representatives from each major fold class (using CATH or SCOP annotations).

Q3: My pipeline pulls from multiple sources (ChEMBL, PubChem, DrugBank). How do I resolve conflicting activity annotations for the same compound-target pair?

A3: Implement a confidence-scoring and consensus system.

- Create a Conflict Resolution Table:

| Data Source | Assay Type Priority (High to Low) | Trust Score | Curation Level |

|---|---|---|---|

| PDBbind (refined) | X-ray crystal structure | 1.0 | High (manual) |

| BindingDB | Ki (single protein, direct) | 0.9 | Medium (semi-auto) |

| ChEMBL | IC50 (cell-based) | 0.7 | Medium (semi-auto) |

| PubChem BioAssay | HTS screen result | 0.5 | Low (auto) |

- Rule-based Selection: For each conflicting pair, select the annotation from the source with the highest Trust Score. If from the same source, prioritize the assay type with the higher priority.

- Flag Ambiguity: Annotate records where the discrepancy between the top two values exceeds one log unit for manual inspection.

Q4: What are the best practices for logging and versioning in a multi-step curation pipeline to ensure reproducibility?

A4: Adopt a pipeline framework with inherent provenance tracking. Use a tool like Snakemake or Nextflow. Each rule/task should log:

- Input dataset hash (e.g., SHA-256).

- All parameters (random seed, clustering threshold, sampling fraction).

- Output dataset hash and summary statistics (counts, distributions).

- Version numbers for all tools/databases used.

Store this log as a JSON alongside each intermediate dataset. This creates a complete audit trail.

Experimental Protocols for Key Validation Steps

Protocol: Assessing Covariate Shift in the Curation Pipeline Purpose: To detect if your curation steps inadvertently introduce a distributional shift in molecular or protein descriptors between the sourced raw data and the final curated set. Methodology:

- Descriptor Calculation: For both the initially aggregated "raw" dataset (Draw) and the final "curated" dataset (Dcurated), calculate a standard set of descriptors (e.g., ECFP4 fingerprints for ligands, amino acid composition for proteins).

- Dimensionality Reduction: Apply PCA to the descriptor matrices separately, but project Dcurated onto the PCA space defined by Draw.

- Statistical Test: Perform a two-sample Kolmogorov-Smirnov (KS) test on the distributions of the first principal component scores between Draw and Dcurated.

- Interpretation: A significant p-value (<0.05) indicates a covariate shift, prompting investigation into the filtering/sampling steps that caused it.

Protocol: Benchmarking Bias Mitigation via Hold-out Family Evaluation Purpose: To empirically test if your curation strategy reduces model overfitting to prevalent protein families. Methodology:

- Stratified Splitting: Split your final curated dataset into training (80%) and test (20%) sets using random splitting. Train and evaluate Model A.

- Temporal/Family Hold-out Splitting: Split your dataset such that all proteins from a specific, diverse family (e.g., GPCRs) or all data published after a certain date are placed exclusively in the test set. Train Model B on the remaining data.

- Comparison: Compare the performance drop of Model B versus Model A on the held-out family/test set.

| Evaluation Scheme | Model | Test Set AUC (Overall) | Test Set AUC (Held-out Family) | Performance Drop |

|---|---|---|---|---|

| Random Split | Model A | 0.89 | 0.87 | -0.02 |

| Family Hold-out | Model B | 0.85 | 0.72 | -0.13 |

A smaller performance drop in the Hold-out scheme suggests a more robust, less biased model enabled by better curation.

Diagrams

Bias-Aware Data Curation Pipeline

Bias Assessment via Hold-out Evaluation

The Scientist's Toolkit: Research Reagent Solutions

| Item/Resource | Primary Function in Curation | Key Considerations for Bias Mitigation |

|---|---|---|

| PDBbind Database | Provides high-quality, experimentally determined protein-ligand complexes with binding affinity data. | Use the "refined" or "core" sets as a high-quality seed. Be aware of its bias towards well-studied, crystallizable targets. |

| BindingDB | Large collection of measured binding affinities (KI, Kd, IC50). | Crucial for expanding chemical space. Requires rigorous filtering by assay type (prefer "single protein" over "cell-based"). |

| ChEMBL | Bioactivity data from medicinal chemistry literature. | Excellent for bioactive compounds. Use confidence scores and document data curation level. Beware of patent-driven bias towards lead-like space. |

| MMseqs2 / CD-HIT | Protein sequence clustering tools. | Essential for controlling structural redundancy. The choice of sequence identity threshold (e.g., 30% vs 70%) directly controls the diversity of the protein set. |

| RDKit / Open Babel | Cheminformatics toolkits. | Used to standardize molecular representations (tautomers, protonation states, removing salts), calculate descriptors, and check for chemical integrity. Inconsistent application introduces bias. |

| IMBALANCE Library (Python) | Provides algorithms like SMOTE, ADASYN, SMOTE-ENN. | Used to algorithmically balance class distributions. Critical: Apply only to the training fold after data splitting to prevent data leakage and over-optimistic performance. |

| Snakemake / Nextflow | Workflow management systems. | Ensure reproducible, documented, and versioned curation pipelines. Automatically tracks provenance, which is mandatory for auditing bias sources. |

Technical Support Center

Troubleshooting Guides & FAQs

Q1: During preprocessing, my model shows high performance on validation splits but fails dramatically on external, real-world chemical libraries. What could be the cause? A1: This is a classic sign of dataset bias, often from benchmarking sets like ChEMBL being non-representative of broader chemical space. To diagnose, create a bias audit table comparing the distributions of key molecular descriptors between your training set and the target library.

| Descriptor | Training Set Mean (Std) | External Library Mean (Std) | Kolmogorov-Smirnov Statistic (p-value) |

|---|---|---|---|

| Molecular Weight | 450.2 (150.5) | 380.7 (120.8) | 0.32 (<0.001) |

| LogP | 3.5 (2.1) | 2.8 (1.9) | 0.21 (0.003) |

| QED | 0.6 (0.2) | 0.7 (0.15) | 0.28 (<0.001) |

| TPSA | 90.5 (50.2) | 110.3 (45.6) | 0.19 (0.012) |

Protocol for Bias Audit:

- Compute Descriptors: Use RDKit (

rdMolDescriptors) or Mordred to calculate a diverse set of 2D/3D molecular descriptors for both datasets. - Normalize: Apply StandardScaler to all descriptors.

- Statistical Test: For each descriptor, perform a two-sample Kolmogorov-Smirnov test (or Mann-Whitney U for non-normal) using

scipy.stats. - Visualize: Plot kernel density estimates for the top 5 divergent descriptors.

Q2: After applying a re-weighting technique (like Importance Weighting), my model's loss becomes unstable and fails to converge. How do I fix this? A2: Unstable loss is often due to extreme importance weights causing gradient explosion. Implement weight clipping or normalization.

Mitigation Protocol:

- Calculate Weights: Use Kernel Mean Matching or propensity score estimation to get initial weights

w_ifor each training sample. - Clip Weights: Set a threshold (e.g., 95th percentile).

w_i_clipped = min(w_i, percentile(w, 0.95)). - Normalize: Renormalize weights so their mean is 1:

w_i_normalized = w_i_clipped / mean(w_i_clipped). - Adaptive Optimizer: Use Adam or AdaGrad instead of SGD, as they are more robust to noisy, scaled gradients.

Q3: How do I choose between adversarial debiasing and re-sampling for my protein-ligand affinity prediction model? A3: The choice depends on your bias type and computational resources. Use the following diagnostic table:

| Technique | Best For Bias Type | Computational Overhead | Key Hyperparameter | Effect on Performance |

|---|---|---|---|---|

| Adversarial Debiasing | Latent, complex biases (e.g., bias towards certain protein folds) | High (requires adversarial training) | Adversary loss weight (λ) | May reduce training set accuracy but improves generalization |

| Re-sampling (SMOTE/Cluster) | Simple, distributional bias (e.g., overrepresented scaffolds) | Low to Medium | Sampling strategy (over/under) | Can increase minority class recall; risk of overfitting to synthetic samples |

Protocol for Adversarial Debiasing:

- Setup: Build a primary model (predictor) and an adversary model. The adversary tries to predict the protected variable (e.g., protein family) from the primary model's representations.

- Joint Training: Minimize predictor loss while maximizing adversary loss (or minimize negative adversary loss). The loss is:

L_total = L_prediction - λ * L_adversary. - Gradient Reversal: Implement a gradient reversal layer between the predictor and adversary during backpropagation for easier training.

Title: Adversarial Debiasing Workflow for Chemogenomic Models

Q4: I suspect temporal bias in my drug-target interaction data (newer compounds have different assays). How can I correct for this algorithmically? A4: Implement temporal cross-validation and a time-aware re-weighting scheme.

Temporal Holdout Protocol:

- Order Data: Sort all protein-ligand interaction pairs by assay date.

- Split: Use the earliest 70% for training, the next 15% for validation, and the latest 15% for testing. Do not shuffle.

- Apply Causal Correction: Use a method like Doubly Robust Estimation that combines propensity weighting and outcome regression to adjust for shifting assay conditions over time.

Q5: When using bias-corrected models in production, how do I monitor for new, previously unseen biases? A5: Implement a bias monitoring dashboard with statistical process control.

| Monitoring Metric | Calculation | Alert Threshold |

|---|---|---|

| Descriptor Drift | Wasserstein distance between training and incoming batch descriptor distributions | > 0.1 (per descriptor) |

| Performance Disparity | Difference in RMSE/ROC-AUC between major and minority protein family groups | > 0.15 |

| Fairness Metric | Subgroup AUC for under-represented scaffold classes | < 0.6 |

The Scientist's Toolkit: Research Reagent Solutions

| Item Name | Function in Bias Correction | Key Parameters/Notes |

|---|---|---|

| AI Fairness 360 (AIF360) Toolkit | Provides a unified framework for bias checking and mitigation algorithms (e.g., Reweighing, AdversarialDebiasing). | Use sklearn.compose.ColumnTransformer with aif360.datasets.StandardDataset. |

| RDKit with Mordred Descriptors | Generates comprehensive 2D/3D molecular features to quantify chemical space and identify distribution shifts. | Calculate 1800+ descriptors. Use PCA for visualization of dataset coverage. |

| DeepChem MoleculeNet | Curated benchmark datasets with tools for stratified splitting to avoid data leakage and scaffold bias. | Use ScaffoldSplitter for a more realistic assessment of generalization. |

| Propensity Score Estimation (via sklearn) | Estimates the probability of a sample being included in the training set given its features, used for re-weighting. | Use calibrated classifiers like LogisticRegressionCV to avoid extreme weights. |

| SHAP (SHapley Additive exPlanations) | Explains model predictions to identify if spurious correlations (biases) are being used. | Look for high SHAP values for non-causal features (e.g., specific vendor ID). |

Title: Bias-Correction Pipeline for Structure-Based Models

The Role of Physics-Based and Hybrid Modeling in Counteracting Pure Data-Driven Bias

Technical Support Center

Troubleshooting Guides & FAQs

Q1: Our purely data-driven chemogenomic model performs excellently on the training and validation sets but fails to generalize to novel protein targets outside the training distribution. What is the likely cause and how can we address it? A: This is a classic sign of data-driven bias and overfitting to spurious correlations in the training data. The model may have learned features specific to the assay conditions or homologous protein series rather than generalizable structure-activity relationships. Recommended Protocol: Implement a Hybrid Model Pipeline

- Feature Augmentation: Generate physics-based descriptors (e.g., MM/GBSA binding energy components, pharmacophore points, molecular interaction fields) for your ligand-target complexes.

- Model Fusion: Train a hybrid model where the final prediction is a weighted ensemble:

- Model A: Your existing data-driven model (e.g., Graph Neural Network).

- Model B: A simpler model trained solely on the physics-based descriptors (e.g., Random Forest).

- Validation: Use a temporally split or structurally dissimilar test set to validate the hybrid model's improved generalizability.

Q2: During hybrid model training, the physics-based component seems to dominate, drowning out the data-driven signal. How do we balance the two? A: This indicates a scaling or weighting issue between feature sets. Recommended Protocol: Feature Scaling & Attention-Based Fusion

- Standardize Features: Independently standardize all data-driven features and physics-based features to zero mean and unit variance.

- Implement an Attention/Gating Mechanism: Instead of simple concatenation, use a neural attention layer that learns to dynamically weight the contribution of physics-based vs. data-driven feature channels for each input sample.

- This allows the model to "decide" when to trust physical principles versus empirical patterns.

Q3: How can we formally test if our hybrid model has reduced bias compared to our pure data-driven model? A: Implement a bias audit using quantitative metrics on held-out bias-controlled sets. Recommended Protocol: Bias Audit Framework

- Create Diagnostic Test Sets:

- Set A (Property Bias): Molecules with similar physicochemical properties but different binding outcomes.

- Set B (Scaffold Bias): Molecules with novel core scaffolds absent from training.

- Set C (Target Bias): Proteins from a distant fold class.

- Measure & Compare: Evaluate both models on these sets using metrics like AUC, RMSE, and

ΔAUC(AUCtrain - AUCdiagnostic).

Table 1: Bias Audit Results for Model Comparison

| Diagnostic Test Set | Pure Data-Driven Model (AUC) | Hybrid Physics-Informed Model (AUC) | ΔAUC (Improvement) |

|---|---|---|---|

| Standard Hold-Out | 0.89 | 0.87 | -0.02 |

| Novel Scaffold Set | 0.62 | 0.78 | +0.16 |

| Distant Target Fold | 0.58 | 0.71 | +0.13 |

| Property-Bias Control Set | 0.65 | 0.81 | +0.16 |

Q4: What is a practical first step to incorporate physics into our deep learning workflow without a full rebuild? A: Use physics-based features as a regularizing constraint during training. Recommended Protocol: Physics-Informed Regularization Loss

- Calculate Reference Value: For each training sample, compute a coarse physics-based score (e.g., scaled docking score or simple energy estimate).

- Add a Loss Term: Modify your loss function:

Total Loss = Task Loss (e.g., BCE) + λ * |(Model Prediction - Physics-Based Reference)| - Tune λ: Start with a small λ (e.g., 0.1) to gently guide the model, preventing severe deviation from physical plausibility without forcing strict adherence.

Experimental Protocol: Building a Robust Hybrid Chemogenomic Model

Title: Hybrid Model Training with Bias-Conscious Validation Splits

Objective: To train a chemogenomic model that integrates graph-based ligand features, protein sequence embeddings, and physics-based binding energy approximations to improve generalizability and reduce data bias.

Materials: See "Research Reagent Solutions" table below.

Methodology:

- Data Curation & Splitting:

- Source data from public repositories (e.g., ChEMBL, BindingDB).

- Critical: Perform cluster-based splitting. Cluster proteins by sequence similarity and ligands by scaffold. Assign entire clusters to train/validation/test sets to ensure true generalization assessment.

- Feature Engineering:

- Data-Driven: Generate molecular graphs (atoms as nodes, bonds as edges) and protein language model embeddings.

- Physics-Based: For each ligand-protein pair, run a fast MM/GBSA calculation (using implicit solvent) to obtain per-residue energy decomposition terms.

- Model Architecture (Hybrid Graph Network):

- Branch A: Graph Neural Network processing the ligand.

- Branch B: 1D Convolutional Neural Network processing protein embeddings.

- Branch C: Dense network processing the physics-based energy vector.

- Fusion: Concatenate the latent representations from all three branches, followed by a gating mechanism and fully connected layers for prediction.

- Training & Validation:

- Train using the hybrid loss function (Protocol Q4).

- Validate on the cluster-held-out validation set.

- Apply early stopping based on validation loss.

Mandatory Visualizations

Hybrid Model Development & Validation Workflow

Hybrid Model Architecture with Gating Fusion

The Scientist's Toolkit: Research Reagent Solutions

| Item/Category | Function in Hybrid Modeling | Example/Note |

|---|---|---|

| Molecular Dynamics (MD) Suite | Generate structural ensembles for targets; compute binding free energies. | GROMACS, AMBER, OpenMM. Essential for rigorous physics-based scoring. |

| MM/GBSA Scripts & Pipelines | Perform efficient, end-state binding free energy calculations for feature generation. | gmx_MMPBSA, AmberTools MMPBSA.py. Key source for physics-based feature vectors. |

| Protein Language Model (pLM) | Generate informative, evolution-aware embeddings for protein sequences. | ESMFold, ProtT5. Provides deep learning features for the target. |

| Graph Neural Network (GNN) Library | Model the ligand as a graph and learn its topological features. | PyTorch Geometric, DGL. Standard for data-driven ligand representation. |

| Differentiable Docking | Integrate a physics-like scoring function directly into the training loop. | DiffDock, TorchDrug. Emerging tool for joint physics-DL optimization. |

| Clustering Software | Perform scaffold-based and sequence-based clustering for robust data splitting. | RDKit (Butina Clustering), MMseqs2. Critical for bias-conscious train/test splits. |

| Model Interpretation Toolkit | Audit which features (physics vs. data) drive predictions. | SHAP, Captum. Diagnose model bias and build trust. |

Technical Support Center

Troubleshooting Guide & FAQs

Q1: My de-biased model for a novel target class shows excellent validation metrics but fails to identify any active compounds in the final wet-lab screen. What could be the issue?

A: This is a classic sign of "over-correction" or "loss of signal." The bias mitigation strategy may have removed not only the confounding bias but also the true biological signal. This is common when using adversarial debiasing or stratification on small datasets.

Troubleshooting Steps:

- Check Data Leakage: Re-audit your training/validation/test splits. Ensure no temporal, structural, or vendor bias has leaked from the validation set into model training, giving false confidence.

- Analyze the Removed Features: Use SHAP or similar analysis on your de-biasing model (e.g., the adversary in an adversarial network) to see which molecular features it identified as "biased." Cross-reference these with known privileged scaffolds or substructures for the target class. If there is significant overlap, you may have removed real pharmacophores.

- Implement a Gradual Debias: Instead of fully removing the identified bias, apply a re-weighting or penalty-based approach. Retrain with a weaker de-biasing strength (λ parameter) and observe the performance on a small, diverse validation HTS set.

Protocol: Step 2 - SHAP Analysis for De-biasing Audit

- Objective: Identify molecular features the debiasing model associates with data bias.

- Method:

- Train your primary activity prediction model and your bias prediction model (e.g., "assay" or "year" predictor).

- Using the

shap.DeepExplainer(for neural networks) orshap.TreeExplainer(for RF/GBM) on the bias prediction model, calculate SHAP values for a representative sample of your training data. - For the top 20 features with the highest mean absolute SHAP value for the bias model, compute their frequency in known active compounds for related targets (from public ChEMBL data).

- If >30% of these high-bias features are also prevalent in known actives, your debiasing is likely too aggressive.

Q2: When applying a transfer learning model from a well-characterized target family (e.g., GPCRs) to a novel, understudied class (e.g., solute carriers), how do I handle the drastic difference in available training data?

A: The core challenge is negative set definition bias. For novel targets, confirmed inactives are scarce, and using random compounds from other assays introduces strong confounding bias.

Troubleshooting Steps:

- Construct a Robust Negative Set: Do not use random "inactives." Employ a "distant background" approach.

- Use Domain Adversarial Training: Implement a Gradient Reversal Layer (GRL) network to learn target-invariant features, forcing the model to focus on signals not tied to the over-represented source domain.

- Prioritize Diversity-Oriented Libraries: For screening, choose libraries maximally diverse from your source target training data to reduce model extrapolation errors.

Protocol: Step 1 - Constructing a 'Distant Background' Negative Set

- Objective: Build a negative set for a novel target that minimizes latent bias.

- Method:

- Gather all available active compounds for the novel target (even 10-50 is useful).

- From a large chemical database (e.g., ZINC, Enamine REAL), calculate the pairwise Tanimoto distance (1 - similarity) from each database compound to the nearest active.

- Select compounds in the lowest quartile of similarity (most distant) as your putative negatives. This minimizes the chance of including unconfirmed, latent actives.

- Validate this set by confirming it does not enrich for actives in a related, better-characterized target from the same family, if such data exists.

Q3: How can I detect and mitigate "temporal bias" in a continuously updated screening dataset for a novel target?

A: Temporal bias arises because early screening compounds are often structurally similar, and assay technology/conditions change over time. A model may learn to predict the "year of screening" rather than activity.

Troubleshooting Steps:

- Visualize Temporal Drift: Perform a time-series PCA on the compound feature space (e.g., ECFP4 fingerprints) colored by assay year.

- Apply Temporal Cross-Validation: Never use future data to validate past data. Train on data from years 1-3, validate on year 4, test on year 5.

- Use a Temporal Holdout: For the final model, hold out the most recent 1-2 years of data as the ultimate test of predictive utility for future campaigns.

Diagram: Temporal Bias Detection & Mitigation Workflow

Diagram Title: Temporal Bias Mitigation Protocol

Q4: What are the best practices for evaluating a de-biased model's performance, given that standard metrics like ROC-AUC can be misleading?

A: Relying solely on ROC-AUC is insufficient as it can be inflated by dataset bias. A multi-faceted evaluation protocol is mandatory.

Troubleshooting Steps:

- Use Bias-Aware Metrics: Calculate "Bias-Discrepancy" (BD) and "Subgroup AUC".

- Perform External Testing: Use a meticulously curated, fully independent external test set from a different source lab or compound library.

- Conduct "Negative Control" Predictions: Run the model on a set of compounds known to be inactive against any target (e.g., certain metabolic intermediates). High false-positive rates indicate artifact learning.

Evaluation Metrics Table:

| Metric | Formula/Description | Target Value | Interpretation |

|---|---|---|---|

| Subgroup AUC | AUC calculated separately for compounds from each major vendor or assay batch. | All Subgroup AUCs > 0.65 | Model performance is consistent across data sources. |

| Bias Discrepancy (BD) | abs(AUC_overall - mean(Subgroup_AUC)) |

< 0.10 | Low discrepancy indicates robust performance. |

| External Validation AUC | AUC on a truly independent, recent, and diverse compound set. | > 0.70 | Model has generalizable predictive power. |

| Scaffold Recall | % of unique active scaffolds in the top 1% of predictions. | > 30% (context-dependent) | Model is not just recovering a single chemotype. |

The Scientist's Toolkit: Research Reagent Solutions

| Item | Function & Role in De-biasing |

|---|---|

| Diverse Compound Libraries (e.g., Enamine REAL Diversity, ChemBridge DIVERSet) | Provide a broad, unbiased chemical space for prospective screening and for constructing "distant background" negative sets. Essential for testing model generalizability. |

| Benchmark Datasets (e.g., DEKOIS, LIT-PCBA) | Provide carefully curated datasets with hidden validation cores, designed to test a model's ability to avoid decoy bias and recognize true activity signals. |

Adversarial Debiasing Software (e.g., aix360, Fairlearn) |

Python toolkits containing implementations of adversarial debiasing, reweighing, and prejudice remover algorithms. Critical for implementing advanced bias mitigation. |

Chemistry-Aware Python Libraries (e.g., RDKit, DeepChem) |

Enable fingerprint generation, molecular featurization, scaffold analysis, and seamless integration of chemical logic into machine learning pipelines. |

Model Explainability Tools (e.g., SHAP, Captum) |

Used to audit which features a model (and its adversarial debiasing counterpart) relies on, identifying potential "good signal" removal or artifact learning. |

| Structured Databases (e.g., ChEMBL, PubChem) | Provide essential context for understanding historical bias, identifying potential assay artifacts, and performing meta-analysis across target classes. |

Diagram: The De-biased Virtual Screening Workflow

Diagram Title: De-biased Virtual Screening Protocol

Technical Support Center: Troubleshooting Guides & FAQs

This support center addresses common challenges in implementing active learning (AL) and bias-aware sampling for chemogenomic model refinement. The context is a research thesis on Handling data bias in structure-based chemogenomic models.

FAQs & Troubleshooting

Q1: My active learning loop seems to be stuck, selecting redundant data points from a narrow chemical space. How can I encourage exploration? A: This indicates the acquisition function may be overly greedy. Implement a diversity component.

- Protocol: Cluster-Based Diversity Sampling

- Step 1: After model training, generate embeddings (e.g., from the penultimate layer) for all compounds in the unlabeled pool.

- Step 2: Perform clustering (e.g., k-means, Butina) on these embeddings. Use the elbow method on sum-of-squared-distances to estimate clusters.

- Step 3: Within each cluster, use the primary acquisition function (e.g., uncertainty) to rank candidates.

- Step 4: Select the top-N candidates from each cluster to form the batch for the next iteration.

- Quantitative Impact: This typically increases the spread of selected compounds, measured by internal diversity metrics (e.g., average Tanimoto distance).

Q2: My model performance degrades on hold-out test sets representing underrepresented protein families, despite high overall accuracy. Is this bias, and how can I detect it? A: Yes, this is a classic sign of dataset bias. Implement bias-aware validation splits.

- Protocol: Stratified Performance Analysis

- Step 1: Stratify your test/validation data by relevant metadata before model training. For chemogenomics, key strata are: Protein Family (e.g., GPCRs, Kinases), Ligand Scaffold, and Experimental Source.

- Step 2: Track performance metrics (AUC-ROC, RMSE) per stratum across AL iterations.

- Step 3: Calculate the performance disparity (e.g., max AUC difference) between the best and worst-performing strata.

- Data Presentation:

Q3: How do I integrate bias correction directly into the active learning sampling strategy? A: Use a bias-aware acquisition function that weights selection probability inversely to the density of a point's stratum in the training set.

- Protocol: Inverse Density Weighting

- Step 1: For each compound

iin the unlabeled poolU, identify its stratums_i(e.g., protein family). - Step 2: Compute the representation ratio:

r_s = (Count(s_i) in Training Set) / (Total Training Set Size). - Step 3: Calculate the base acquisition score

a_i(e.g., predictive variance). - Step 4: Compute the final bias-aware score:

a_i' = a_i * (1 / (r_s + α)), whereαis a small smoothing constant. - Step 5: Select the batch with the highest

a_i'scores.

- Step 1: For each compound

Q4: What are the computational resource bottlenecks in scaling these methods for large virtual libraries (>1M compounds)? A: The primary bottlenecks are model inference on the unlabeled pool and clustering for diversity.

- Solution Protocol: Submodular Proxy Sampling

- Step 1: Use a cheaper, lower-fidelity model (e.g., ECFP fingerprint + Random Forest) to screen the entire library and select a top-100k subset.

- Step 2: Apply your primary, expensive structure-based model (e.g., Graph Neural Network) only to this subset for precise uncertainty estimation.

- Step 3: Apply clustering and bias-aware weighting within this manageable candidate set.

Visualizations

Diagram Title: Active Learning with Bias-Aware Iteration Loop

Diagram Title: Bias-Aware Score Calculation for a Candidate

The Scientist's Toolkit: Research Reagent Solutions

| Item | Function in Experiment | Example/Description |

|---|---|---|

| Structure-Based Featurizer | Converts protein-ligand 3D structures into machine-readable features. | DeepChem's AtomicConv or DGL-LifeSci's PotentialNet. Critical for the primary predictive model. |

| Fingerprint-Based Proxy Model | Enables fast pre-screening of large compound libraries. | RDKit for generating ECFP/Morgan fingerprints paired with a Scikit-learn Random Forest. |

| Stratified Data Splitter | Creates training/validation/test splits that preserve subgroup distributions. | Scikit-learn's StratifiedShuffleSplit or custom splits based on protein family SCOP codes. |

| Clustering Library | Enforces diversity in batch selection. | RDKit's Butina clustering (for fingerprints) or Scikit-learn's MiniBatchKMeans (for embeddings). |

| Bias Metric Calculator | Quantifies performance disparity across strata. | Custom script to compute maximum gap in AUC-ROC or standard deviation of per-stratum RMSE. |

| Active Learning Framework | Manages the iterative training, scoring, and data addition loop. | ModAL (Modular Active Learning) for Python, extended with custom acquisition functions. |

| Metadata-Enabled Database | Stores compound-protein pairs with essential stratification metadata. | SQLite or PostgreSQL with tables for protein family, ligand scaffold, assay conditions. |

Diagnosing and Correcting Bias: A Troubleshooting Guide for Model Developers

Troubleshooting Guides & FAQs

This technical support center addresses common issues in detecting bias through learning curves within chemogenomic model development. The context is research on handling data bias in structure-based chemogenomic models.

FAQ: Interpreting Curve Behavior

Q1: My training loss decreases steadily, but my validation loss plateaus early. What does this indicate? A: This is a primary red flag for overfitting, suggesting the model is memorizing training data specifics (e.g., artifacts of a non-representative chemical scaffold split) rather than learning generalizable structure-activity relationships. It indicates high variance and likely poor performance on new, structurally diverse compounds.

Q2: Both training and validation loss are decreasing but remain high and parallel. What is the problem? A: This pattern indicates underfitting or high bias. The model is too simple to capture the complexity of the chemogenomic data. Potential causes include inadequate featurization (e.g., poor pocket descriptors), overly strict regularization, or a model architecture insufficient for the task.

Q3: My validation curve is more jagged/noisy compared to the smooth training curve. Why? A: Noise in the validation curve often stems from a small or non-representative validation set. In chemogenomics, this can occur if the validation set contains few examples of key target families or chemical classes, making performance assessment unstable.

Q4: What does a sudden, sharp spike in validation loss after a period of decrease signify? A: This is a classic sign of catastrophic overfitting, often related to an excessively high learning rate or a significant distribution shift between the training and validation data (e.g., validation compounds have different binding modes not seen in training).

Diagnostic Metrics & Quantitative Thresholds

The following table summarizes key metrics derived from training/validation curves to diagnose bias and variance.

Table 1: Diagnostic Metrics from Learning Curves

| Metric | Formula / Description | Interpretation Threshold (Typical) | Indicated Problem |

|---|---|---|---|

| Generalization Gap | Validation Loss - Training Loss (at convergence) | > 10-15% of Training Loss | Significant Overfitting |

| Loss Ratio (Final) | Validation Loss / Training Loss | > 1.5 | High Variance / Overfitting |

| Loss Ratio (Final) | Validation Loss / Training Loss | ~1.0 but both high | High Bias / Underfitting |

| Convergence Delta | Epoch of Val. Loss Minus Epoch of Train Loss Min | > 20 Epochs (context-dependent) | Early Stopping point; late validation min suggests overfitting. |

| Curve Area Gap | Area between train and val curves after epoch 5. | Large, increasing area | Progressive overfitting during training. |

Experimental Protocol: Systematic Learning Curve Analysis for Bias Detection

Objective: To diagnose bias (underfitting) and variance (overfitting) in a structure-based chemogenomic model by generating and analyzing training/validation learning curves.

Materials: See "The Scientist's Toolkit" below.

Methodology:

- Data Partitioning: Split your protein-ligand complex dataset using a scaffold split (based on Bemis-Murcko frameworks) or a temporal split to simulate real-world generalization. Avoid random splits, as they can mask bias.

- Model Training Setup: Configure your model (e.g., Graph Neural Network for protein-ligand graphs). Set a fixed, moderately high learning rate initially for clear curve dynamics.

- Metric Logging: Train the model for a preset number of epochs (e.g., 200). After each epoch, calculate and record the loss (e.g., Mean Squared Error) on both the training and hold-out validation sets.

- Curve Generation: Plot epochs (x-axis) against loss (y-axis) for both sets on the same plot.

- Diagnostic Analysis:

- Identify the convergence point for each curve.

- Calculate the Generalization Gap and Loss Ratio from Table 1.

- Observe the shape: parallel curves (underfitting), diverging gap (overfitting), validation spikes (instability).

- Iterative Intervention:

- If underfitting is detected: Increase model capacity (more layers/features), reduce regularization (dropout, weight decay), or improve input features (e.g., add pharmacophore descriptors).

- If overfitting is detected: Apply stronger regularization, implement early stopping at the validation loss minimum, or augment the training data (e.g., via ligand conformer generation).

Workflow Diagram: Bias Detection Protocol

Title: Bias Diagnosis and Mitigation Workflow

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Materials for Chemogenomic Bias Analysis Experiments

| Item / Solution | Function in Bias Detection |

|---|---|