Enhancing Genomic Subtyping Resolution: Strategies for Superior Pathogen Discrimination and Precision Medicine

This article provides a comprehensive exploration of modern approaches for enhancing the discriminatory power of genomic subtyping methods, a critical need for researchers and drug development professionals.

Enhancing Genomic Subtyping Resolution: Strategies for Superior Pathogen Discrimination and Precision Medicine

Abstract

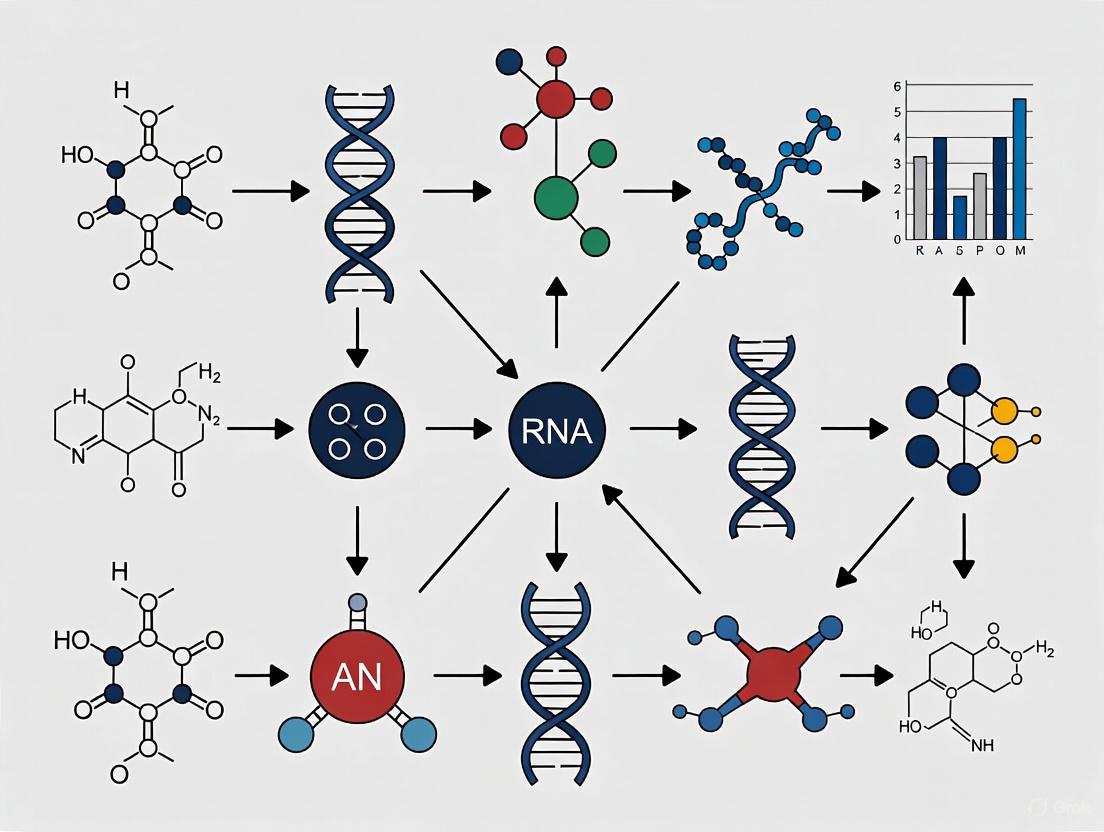

This article provides a comprehensive exploration of modern approaches for enhancing the discriminatory power of genomic subtyping methods, a critical need for researchers and drug development professionals. It covers foundational principles, from transitioning beyond traditional methods like PFGE to advanced whole-genome sequencing (WGS) techniques. The scope includes a detailed analysis of current methodologies like cgMLST and wgMLST, tackles optimization challenges such as mobile genetic element interference, and presents rigorous validation frameworks for comparing statistical and deep learning-based integration. By synthesizing insights from bacterial epidemiology and cancer genomics, this resource aims to equip scientists with the knowledge to achieve higher resolution in strain discrimination and disease subtyping for improved outbreak detection and personalized therapies.

From Phenotypes to Base Pairs: The Evolution and Core Principles of High-Resolution Subtyping

In genomic epidemiology, discriminatory power refers to the ability of a subtyping method to distinguish between epidemiologically unrelated bacterial strains. This fundamental characteristic determines the effectiveness of outbreak investigations, source tracking, and pathogen surveillance. The transition from traditional methods like pulsed-field gel electrophoresis (PFGE) to whole-genome sequencing (WGS) has fundamentally transformed our approach to bacterial subtyping, offering unprecedented resolution for differentiating bacterial pathogens. However, this advanced capability comes with significant technical challenges that can impact the consistency and reliability of laboratory results. This technical support center addresses the specific issues researchers encounter when implementing these sophisticated genomic subtyping methods, providing practical troubleshooting guidance framed within the broader research objective of optimizing discriminatory power.

Table: Evolution of Key Subtyping Methods and Their Resolutions

| Subtyping Method | Genetic Basis | Discriminatory Power | Primary Use Case |

|---|---|---|---|

| PFGE [1] [2] | Restriction fragment patterns | Moderate (Gold standard for outbreak investigation) | Outbreak investigations, source tracking |

| MLST [1] | Sequences of 2-10 genes | Low to Moderate (Phylogenetic subtyping) | Phylogenetic studies, population genetics |

| Whole-Genome Sequencing (WGS) [1] | Full genomic sequence | High (Can be tailored for low or high resolution) | Outbreak detection, transmission tracing, comprehensive characterization |

Technical Support Center: Troubleshooting Genomic Subtyping Methods

Frequently Asked Questions (FAQs)

FAQ 1: Why does our WGS analysis yield different cluster results compared to other laboratories when analyzing the same bacterial isolates?

Differences in cluster results between laboratories typically stem from pipeline heterogeneity rather than data quality issues. Recent multi-country assessments revealed that different bioinformatics pipelines can generate varying cluster compositions, particularly at the outbreak detection level [3]. This inconsistency primarily occurs because:

- Schema Differences: Laboratories use different cgMLST or wgMLST schemas with varying numbers and selections of loci

- Algorithm Variations: Clustering may be performed with single-linkage hierarchical clustering (HC) or Minimum-Spanning Tree (MST) generation through MSTreeV2 (GT) [3]

- Threshold Discrepancies: Lack of standardized genetic distance thresholds for defining clusters

Solution: Implement threshold flexibilization strategies and participate in continuous pipeline comparability assessments, as demonstrated by the BeONE consortium, which found that adjusting thresholds improved detection of similar outbreak signals across different laboratories [3].

FAQ 2: Why does discriminatory power vary significantly across different pathogens when using the same cgMLST approach?

Different traditional typing groups (e.g., serotypes) exhibit remarkably different genetic diversity profiles, which directly impacts how effectively cgMLST can discriminate between strains [3]. For example:

- Listeria monocytogenes shows clear stability plateaus that correspond to sequence types (STs) [3]

- Campylobacter jejuni demonstrates marked discrepancies between pipelines due to different resolution powers of allele-based schemas [3]

- The genetic heterogeneity within a species affects how many allele differences constitute an outbreak cluster

Solution: Develop species-specific and sequence-type-specific thresholds rather than applying uniform criteria across all pathogens.

FAQ 3: Why is our config file update not being recognized during the allele calling process?

This common bioinformatics issue typically relates to caching of previous configurations. To resolve this, you must overwrite the collection of terms that were cached for older versions of your config file by specifying --no-cache in your command [4]. Always verify that:

- File paths in the config are correctly specified

- The schema version matches your reference database

- All dependencies have been updated compatibly

Troubleshooting Guides

Issue: Low Discriminatory Power with cgMLST for Certain Pathogens

Problem: cgMLST analysis fails to provide sufficient resolution to distinguish between epidemiologically unrelated isolates of Campylobacter jejuni.

Investigation:

- Verify that your schema includes an adequate number of core genome loci (typically 500-3,000 depending on the pathogen)

- Check the genetic diversity of your dataset - some clonal pathogens naturally exhibit limited diversity

- Compare your results with traditional typing methods like MLST to ensure biological relevance

Resolution:

- Transition to wgMLST (whole-genome MLST), which includes both core and accessory genomes, providing higher resolution power [3]

- Implement a SNP-based approach for high-resolution analysis of closely related isolates [1] [3]

- For C. jejuni, consider using a standardized, higher-resolution schema specifically validated for this pathogen

Issue: Inconsistent Cluster Composition Between Analytical Pipelines

Problem: Your WGS pipeline identifies different outbreak clusters compared to collaborative laboratories using the same raw data.

Investigation:

- Document the specific parameters of each pipeline:

- Allele-calling algorithm

- Schema version and source

- Clustering algorithm (HC vs. MST/GT)

- Distance threshold criteria

- Analyze a standardized dataset with known epidemiological relationships

Resolution:

- Apply ReporTree [3] to harmonize clustering information across different distance thresholds

- Perform an inter-pipeline clustering congruence assessment [3]

- Establish internal validation protocols using reference strains with known relationships

- Implement threshold flexibilization to identify optimal outbreak detection parameters [3]

Troubleshooting Inconsistent Cluster Results Between Pipelines

Experimental Protocols for Assessing Discriminatory Power

Protocol: Inter-Pipeline Congruence Assessment

Purpose: To evaluate the congruence of clustering results between different WGS bioinformatics pipelines used for genomic surveillance of foodborne bacterial pathogens [3].

Materials:

- WGS datasets of target pathogen (e.g., Listeria monocytogenes, Salmonella enterica, Escherichia coli, Campylobacter jejuni)

- Multiple bioinformatics pipelines (e.g., cg/wgMLST schemas, allele/SNP-callers)

- ReporTree software [3]

- Computing infrastructure with adequate storage and processing capacity

Methodology:

- Dataset Preparation:

- Select a diverse collection of bacterial isolates with known epidemiological relationships

- Include reference strains where genetic relationships are well-established

- Ensure sequence quality meets minimum requirements (completeness, coverage)

Multi-Pipeline Analysis:

- Analyze the same dataset using each participating laboratory's standard pipeline

- Include both allele-based (cgMLST, wgMLST) and SNP-based pipelines

- Apply both hierarchical clustering (HC) and Minimum-Spanning Tree (MST) generation where possible

Cluster Comparison:

- Use ReporTree to harmonize clustering information across all possible distance thresholds

- Identify stability regions where cluster composition remains consistent across threshold ranges

- Calculate congruence scores between pipelines at different threshold levels

Threshold Optimization:

- Identify threshold ranges where different pipelines detect similar outbreak signals

- Determine pathogen-specific thresholds that maximize discriminatory power while maintaining epidemiological relevance

Expected Results: This protocol will identify pipeline-specific biases and establish optimal thresholds for cluster detection, directly contributing to improved discriminatory power in genomic subtyping methods.

Protocol: Validation of Discriminatory Power Against Known Epidemiological Relationships

Purpose: To validate the discriminatory power of a subtyping method by comparing genetic relationships with established epidemiological links [1].

Materials:

- Bacterial isolates from documented outbreaks (known related isolates)

- Environmental or sporadic isolates (known unrelated isolates)

- Reference subtyping method (e.g., PFGE, MLST)

- WGS platform and bioinformatics pipeline

Methodology:

- Strain Selection:

- Include isolates from confirmed outbreak events with epidemiological links

- Include spatially and temporally distinct isolates with no known epidemiological connections

- Balance the dataset to include diverse genetic backgrounds

Blinded Analysis:

- Perform WGS and subtyping analysis without knowledge of epidemiological relationships

- Apply appropriate genetic distance thresholds for cluster definition

- Construct phylogenetic trees to visualize genetic relationships

Concordance Assessment:

- Compare genetic clustering with known epidemiological links

- Calculate sensitivity and specificity for detecting known relationships

- Compare resolution with reference subtyping methods

Discriminatory Power Quantification:

- Calculate Simpson's index of diversity to quantify discriminatory power

- Determine the number of types identified and the frequency of each type

- Assess the ability to distinguish between epidemiologically unrelated isolates

Experimental Validation of Discriminatory Power

The Scientist's Toolkit: Essential Research Reagents and Platforms

Table: Key Research Reagent Solutions for Genomic Subtyping

| Tool/Platform | Type | Function | Application in Discriminatory Power Research |

|---|---|---|---|

| ReporTree [3] | Software | Harmonizes clustering information across distance thresholds | Enables comparison of cluster results between different pipelines and laboratories |

| cg/wgMLST Schemas [3] | Bioinformatics Resource | Defines loci for allele-based typing | Provides framework for standardized strain comparison; different schemas offer varying resolution |

| ResFinder [5] | Web Tool | Identifies antimicrobial resistance genes from WGS data | Adds functional characterization to genetic subtyping, enhancing epidemiological investigation |

| SNP-based Pipelines [1] [3] | Bioinformatics Method | Detects single-nucleotide polymorphisms across genomes | Offers highest resolution subtyping for outbreak investigation and transmission tracing |

| INNUENDO [3] | Analytical Platform | Integrated WGS data analysis and visualization | Supports standardized bioinformatic analyses for cross-laboratory comparisons |

| PFGE [1] [2] | Laboratory Method | Separates large DNA fragments to generate fingerprints | Gold standard reference method for validating new subtyping approaches |

The pursuit of enhanced discriminatory power in genomic epidemiology requires continuous methodological refinement and standardization. As the field transitions from traditional to genomic subtyping methods, researchers must address the challenges of pipeline heterogeneity, threshold optimization, and species-specific validation. The protocols and troubleshooting guides presented here provide a framework for overcoming these obstacles, enabling more accurate cluster detection and outbreak investigation. By implementing standardized congruence assessments, validating against known epidemiological relationships, and selecting appropriate reagents and platforms, researchers can significantly improve the reliability and discriminatory power of their genomic subtyping methods, ultimately strengthening public health responses to infectious disease threats.

For decades, public health and clinical microbiology laboratories relied on pulsed-field gel electrophoresis (PFGE) and multi-locus sequence typing (MLST) as cornerstone methods for bacterial strain typing and outbreak investigation. While these methods served as crucial public health tools, they presented significant limitations in resolution, speed, and reproducibility. The emergence of whole-genome sequencing (WGS) has fundamentally transformed microbial surveillance by providing unprecedented resolution for distinguishing bacterial strains. WGS-based methods represent a paradigm shift in molecular epidemiology, offering superior discriminatory power that enables public health officials to detect outbreaks with greater precision, trace transmission pathways more accurately, and distinguish between truly related cases and sporadic infections with remarkable clarity [6] [7].

Traditional methods like PFGE and the 7-locus MLST scheme provided initial frameworks for strain differentiation but lacked the resolution needed for fine-scale outbreak investigations. PFGE, while widely used in networks like PulseNet USA for over two decades, offered limited discriminatory power for certain pathogens and produced results that were challenging to standardize across laboratories [7]. Similarly, MLST schemes based on only seven housekeeping genes often failed to differentiate between closely related isolates, particularly for monomorphic species or widespread sequence types like Legionella pneumophila ST1 or Clostridioides difficile RT027 [8] [6]. The transition to WGS-based typing methods addresses these limitations by examining genetic variation across thousands of loci or the entire genome, providing a resolution that has redefined our approach to outbreak detection and microbial population genetics.

Technical Foundations: Understanding WGS-Based Typing Methods

Whole-genome sequencing enables several analytical approaches for strain typing, each with distinct methodologies and applications in public health and research settings. The primary WGS-based typing methods include core genome MLST (cgMLST), whole genome MLST (wgMLST), and single nucleotide polymorphism (SNP) analysis.

Core Genome MLST (cgMLST) analyzes genetic variation in a standardized set of core genes present in nearly all isolates of a species. This approach typically examines 500-2,000 genes that are conserved across the bacterial population, providing a balance between standardization and discriminatory power. For example, a cgMLST scheme for Legionella pneumophila may utilize 1,521 core genes, while a simplified 50-loci scheme has been proposed for easier standardization between laboratories [6]. cgMLST forms the backbone of national surveillance systems, such as PulseNet 2.0 in the United States, which uses a threshold of 0-10 allelic differences to define clusters of Shiga-toxin-producing E. coli (STEC) infections [7].

Whole Genome MLST (wgMLST) extends the analysis beyond the core genome to include accessory genes that may be present or absent in different isolates. This method typically analyzes thousands of genes (e.g., 4,000-6,000 loci) and provides higher resolution by capturing strain-specific genetic elements. Studies have shown high concordance between wgMLST and SNP-based analyses for outbreak detection, with wgMLST (chromosome-associated loci) demonstrating nearly equivalent performance to high-quality SNP analysis for clustering related STEC isolates [7].

High-Quality SNP (hqSNP) Analysis identifies single nucleotide polymorphisms by comparing isolate genomes to a closely related reference sequence. This method provides the highest possible resolution for distinguishing closely related isolates and is particularly valuable for investigating outbreaks involving highly clonal pathogens. Regression analyses have demonstrated strong correlations between hqSNP differences and cgMLST allelic differences, though the relationship varies by bacterial species and outbreak context [7].

Table 1: Comparison of Major Genomic Typing Methods

| Method | Genetic Targets | Discriminatory Power | Standardization Potential | Primary Applications |

|---|---|---|---|---|

| PFGE | Whole genome restriction fragments | Moderate | Limited due to technical variability | Historical outbreak investigation |

| MLST | 7-8 housekeeping genes | Low to moderate | High for sequence-based comparison | Population structure analysis |

| cgMLST | 500-2,000 core genes | High | High with standardized schemes | Routine surveillance & outbreak detection |

| wgMLST | All chromosomal genes | Very high | Moderate with standardized schemes | High-resolution outbreak investigation |

| hqSNP | Single nucleotide variants | Highest | Low due to reference dependence | Fine-scale transmission mapping |

Comparative Advantages: Quantitative Evidence of WGS Superiority

Multiple studies have demonstrated the superior performance of WGS-based methods compared to traditional typing techniques across various bacterial pathogens. The transition to WGS represents not merely an incremental improvement but a fundamental advancement in discriminatory power, throughput, and epidemiological concordance.

For Legionella pneumophila outbreak investigations, WGS has proven particularly valuable for discriminating within common sequence types that were previously challenging to differentiate using conventional methods. Research comparing WGS typing tools for Belgian L. pneumophila outbreaks found that all three WGS approaches (cgMLST, wgMLST, and 50-loci cgMLST) provided concordant results that aligned with traditional sequence-based typing, but with significantly improved resolution. This enhanced discrimination is especially crucial for widespread sequence types like ST1, where standard 7-locus MLST often lacks sufficient resolution to distinguish related from unrelated isolates [6]. The study demonstrated that a simplified 50-loci cgMLST scheme successfully classified isolates into subtypes while maintaining epidemiological concordance, offering a practical solution for standardizing WGS analysis across public health laboratories.

In the context of Clostridioides difficile infections, WGS has revealed important insights into population structures that were obscured by traditional ribotyping methods. A 2025 study analyzing C. difficile isolates from hospitals in Berlin-Brandenburg found that cgMLST analysis revealed very close genetic relatedness between RT027 isolates despite their epidemiological unrelatedness, suggesting a monomorphic population structure. Similar patterns were observed for RT078 isolates, while other ribotypes showed more heterogeneous populations [8]. These findings have important implications for outbreak investigations, suggesting that for monomorphic strains like RT027 and RT078, new definitions of clonal relatedness may be necessary when using high-resolution WGS methods.

The analytical performance of WGS typing methods has been systematically validated in national surveillance systems. For STEC outbreak detection, a comprehensive evaluation of PulseNet 2.0's WGS-based approaches demonstrated high concordance between hqSNP, cgMLST, and wgMLST methods. The regression slope for hqSNP versus cgMLST allele differences was 0.432, while the slope for hqSNP versus wgMLST (chromosomal loci) was 0.966, indicating a nearly 1:1 relationship for the latter comparison [7]. K-means analysis using the Silhouette method showed clear separation of outbreak groups with average silhouette widths ≥0.87 across all methods, confirming the robust clustering performance of WGS-based typing approaches.

Table 2: Performance Metrics of WGS Typing Methods for STEC Outbreak Detection

| Method | Comparison to hqSNP (Regression Slope) | Average Silhouette Width | Typical Analysis Time | Technical Complexity |

|---|---|---|---|---|

| hqSNP | Reference | ≥0.87 | Longer | High |

| wgMLST | 0.966 | ≥0.87 | Moderate | Moderate |

| cgMLST | 0.432 | ≥0.87 | Faster | Lower |

Implementation and Workflow Integration

The integration of WGS into public health and clinical laboratories has been facilitated by the development of automated platforms and standardized bioinformatics pipelines. These advancements have addressed earlier challenges related to workflow complexity, turnaround time, and technical expertise requirements.

Automated WGS platforms have demonstrated significant improvements in efficiency compared to manual methods. A 2025 evaluation of the Clear Dx WGS platform for bacterial strain typing found that the automated workflow reduced turnaround time by 16–19 hours and eliminated 3 hours of manual labor while decreasing costs by an estimated 34%–57% depending on the number of isolates processed [9]. Despite these efficiency gains, the analytical performance remained statistically similar to manual methods, with 99% concordance in isolate groupings across 224 bacterial isolates representing 18 species. This demonstrates that automation can substantially improve workflow efficiency without compromising data quality.

National genomic surveillance networks have developed sophisticated infrastructure to support WGS implementation. France's Genomic Medicine Initiative (PFMG2025) has established a comprehensive framework including reference centers, clinical laboratories, and data analysis facilities [10]. Similarly, PulseNet USA's transition to PulseNet 2.0 implemented a cloud-based, modular platform that performs end-to-end analysis including sequence quality assessment, de novo assembly, speciation, allele calling, and genotyping tasks using standardized workflows [7]. These standardized systems enable comparable results across different laboratories and facilitate rapid cluster detection.

The development of novel MLST schemes based on WGS data represents another advancement in the field. For Staphylococcus capitis, researchers applied a hierarchical filtering strategy to core genome analysis of 603 high-quality genomes to develop an optimized MLST scheme with superior discriminatory power [11]. This approach identified seven target genes (mntC, phoA, atpB_2, hisS, rluB, carB, and clpP) that provided an optimal balance between cluster resolution and discrimination, successfully distinguishing clinically relevant lineages like the NRCS-A clone (ST1) and linezolid-resistant L clone (ST6). This methodology demonstrates how WGS data can inform the development of more effective typing schemes even for traditionally challenging organisms.

Troubleshooting Guide: Addressing Common WGS Implementation Challenges

Frequently Asked Questions

Q: Our NGS library yields are consistently low, leading to failed runs or insufficient coverage for reliable typing. What are the primary causes and solutions? A: Low library yield can result from multiple factors in the preparation process:

- Poor input DNA quality: Degraded DNA or contaminants (phenol, salts, EDTA) inhibit enzymatic reactions. Check 260/280 and 260/230 ratios and re-purify if necessary [12].

- Inaccurate quantification: UV spectrophotometry (NanoDrop) often overestimates concentration. Use fluorometric methods (Qubit) for accurate DNA quantification [12] [13].

- Fragmentation issues: Over- or under-shearing creates suboptimal fragment sizes. Optimize fragmentation parameters for your specific instrument [12].

- Adapter ligation inefficiency: Incorrect adapter-to-insert ratios reduce yield. Titrate adapter concentrations and ensure fresh ligase reagents [12].

Q: We observe high rates of adapter dimers in our sequencing results, reducing useful sequence data. How can we minimize this? A: Adapter dimers (sharp ~70-90 bp peaks in electropherograms) indicate ligation issues:

- Optimize purification: Increase bead cleanup ratios to better exclude small fragments [12].

- Adjust adapter concentration: Excess adapters promote dimer formation; titrate to find optimal concentration [12].

- Verify fragment size: Ensure your insert DNA is properly sized before adapter ligation [12].

Q: How do we handle plasmid mixtures or contaminated samples that complicate assembly and analysis? A: Sample purity is critical for reliable WGS:

- Validate sample quality: Run uncut plasmid on gel or BioAnalyzer to detect multiple species or concatemers [13].

- Linearize plasmids: Distinguish monomers from multimers by running linearized preparations [13].

- Size selection: Gel extraction can isolate the target plasmid from contaminants [13].

- Note: Automated pipelines typically only return consensus for the most abundant species, potentially missing minor variants [13].

Q: What coverage depth is sufficient for reliable cgMLST calling in bacterial isolates? A: Coverage requirements vary by application:

- Manual WGS: Typically targets 100× coverage for most species, though 200× may be needed for difficult genomes like C. difficile [9].

- Automated WGS: Can achieve reliable results with lower coverage (30-80× depending on genome size), with actual coverage often averaging 88× [9].

- Quality metrics: For Oxford Nanopore sequencing, ~20× coverage generally produces highly accurate consensus sequences [13].

Q: How do we validate that our WGS typing results are epidemiologically relevant? A: Validation requires multiple approaches:

- Compare with known outbreaks: Test methods on previously characterized outbreaks to establish thresholds [6] [7].

- Use multiple schemes: Compare cgMLST, wgMLST, and hqSNP results for concordance [7].

- Establish allele difference thresholds: For STEC, PulseNet uses 0-10 cgMLST allele differences to define clusters [7].

- Epidemiological correlation: Always correlate genetic relatedness with epidemiological data [8] [6].

Technical Issue Resolution Table

Table 3: Troubleshooting Common WGS Preparation Issues

| Problem | Primary Indicators | Root Causes | Corrective Actions |

|---|---|---|---|

| Low Library Yield | Low molar concentration; faint electropherogram peaks | Input DNA degradation; contaminants; quantification errors | Re-purify DNA; use fluorometric quantification; optimize fragmentation [12] |

| High Adapter Dimer Rate | Sharp ~70-90 bp peak in BioAnalyzer | Excessive adapters; inefficient ligation; incomplete cleanup | Titrate adapter:insert ratio; optimize bead cleanup; verify fragment size [12] |

| Insufficient Coverage | <20× average coverage; poor assembly metrics | Low input DNA; sequencing failures; poor library quality | Verify DNA concentration fluorometrically; check library quality metrics; repeat preparation [9] [13] |

| Poor Assembly Quality | Low N50; many contigs; missing genes | Mixed samples; high fragmentation; repetitive elements | Check sample purity; optimize DNA extraction; use appropriate assembler [6] [11] |

| Discordant Typing Results | Inconsistent cluster assignments between methods | Different analytical schemes; quality thresholds | Standardize scheme; validate against reference isolates; establish QC metrics [6] [7] |

Essential Reagents and Research Solutions

Successful implementation of WGS for molecular typing requires careful selection of reagents and platforms optimized for specific applications. The following solutions represent key components of robust WGS workflows for public health and research laboratories.

Table 4: Essential Research Reagents and Platforms for WGS Typing

| Reagent/Platform | Function | Application Notes | Performance Characteristics |

|---|---|---|---|

| Clear Dx WGS Platform | Automated nucleic acid extraction and sequencing | Fully automated solution for bacterial strain typing; integrates liquid handling, thermocyclers, and sequencers | Reduces turnaround time by 16-19h; decreases costs by 34-57%; 99% concordance with manual methods [9] |

| Nextera XT DNA Library Prep Kit | Manual library preparation | Fragment DNA and attach adapters in single-tube reaction | Compatible with Illumina sequencing; used in multiple validation studies [9] [8] |

| Kapa HyperPlus Library Prep Kit | Manual library preparation | High-performance kit for challenging samples | Used in Legionella WGS studies; provides uniform coverage [6] |

| SeqSphere+ Software | cgMLST analysis | Commercial platform for allele calling and cluster analysis | Supports standardized cgMLST schemes; used in multiple validation studies [9] [8] [6] |

| BioNumerics wgMLST | Whole genome analysis | Integrated platform for wgMLST analysis | Used in PulseNet validation; demonstrates high concordance with hqSNP [7] |

| SKESA Assembler | De novo assembly | Optimized for bacterial genome assembly | Used in multiple public health pipelines including PulseNet 2.0 [9] [7] |

The revolution in molecular typing brought by whole-genome sequencing represents a fundamental shift in how public health laboratories detect and investigate disease outbreaks. The superior discriminatory power of WGS-based methods like cgMLST and wgMLST has enabled investigators to distinguish between related and unrelated isolates with precision that was previously unattainable with PFGE or traditional MLST. As standardization improves and costs continue to decline, WGS is poised to become the universal method for pathogen characterization in public health, clinical, and research settings.

The implementation of automated WGS platforms and streamlined bioinformatics pipelines will further accelerate this transition, making high-resolution typing accessible to a broader range of laboratories. Future developments will likely focus on real-time analysis during outbreaks, integration of antimicrobial resistance prediction, and direct sequencing from clinical samples to bypass culture requirements. As these advancements mature, WGS will continue to enhance our ability to track disease transmission, identify emerging threats, and implement targeted control measures with unprecedented speed and accuracy.

Frequently Asked Questions (FAQs)

1. What is the key difference between Simpson's Index and the Shannon Index? Simpson's Index and the Shannon Index respond differently to the abundance of species or types in a community. The Shannon Index is more sensitive to the presence of rare species, while Simpson's Index is more sensitive to changes in the abundance of the most common species [14] [15]. This means that in communities with the same richness but different evenness, these two indices can sometimes show opposite trends [15].

2. My Simpson's Index value decreased after a treatment, but my Shannon Index increased. Is this possible? Yes, this is a possible scenario and highlights why choosing the correct index is critical. This opposite response occurs because the indices weight different aspects of the population. An increase in the Shannon Index suggests an increase in the number of rare types, to which it is sensitive. A simultaneous decrease in Simpson's Index suggests a reduction in evenness, likely through an increased dominance of one or a few common types, to which Simpson's Index is sensitive [15]. You should interpret this result based on which component—rare types or dominant types—is more relevant to your research question.

3. When should I use Simpson's Index over the Shannon Index in my genomic research? The choice depends on your research focus:

- Use Simpson's Index (or its reciprocal/inverse, Simpson's Diversity,

1/D) when your primary interest is in understanding the dominance and evenness of common subtypes, for instance, when tracking the spread of a dominant pathogen strain in an outbreak [15]. - Use the Shannon Index when your study is concerned with the full diversity profile, particularly the presence and importance of rare subtypes. This is often crucial in ecological studies and for conservation purposes, where rare species provide critical habitats [15].

4. How do I calculate Simpson's Diversity Index from my data? Simpson's Index can be calculated in a few related ways. A common formula used in ecology is: Simpson's Index of Diversity = 1 - D, where D = Σ n(n-1) / N(N-1) [16].

n= the total number of organisms of a particular species/typeN= the total number of organisms of all species/typesΣ= the sum of the calculations for each species/type [16]

This calculation yields a value between 0 and 1, where 1 represents infinite diversity and 0 represents no diversity [16].

5. What are "Hill's numbers" and how do they relate to common diversity indices?

Hill's numbers provide a unified framework for diversity indices, known as the effective number of species or "true diversity" [17] [14]. They are represented by qD, where the parameter q defines the sensitivity to species abundances. Common diversity indices are special cases of Hill's numbers [17]:

0D(q=0): Species Richness (S). Sensitive to rare species.1D(q=1): Exponential of Shannon entropy (exp(H')). Equally sensitive to all species.2D(q=2): Inverse Simpson index (1/D). Sensitive to common species. Using Hill's numbers allows for a more consistent and intuitive comparison across communities [17] [14].

Troubleshooting Guides

Issue: Low Discriminatory Power in My Multilocus Sequence Typing (MLST) Scheme

Problem: Your current MLST scheme is not providing enough resolution to distinguish between closely related bacterial isolates, leading to an unclear picture of transmission dynamics.

Solution:

- Re-evaluate Locus Selection: The core of a high-resolution MLST scheme is the selection of highly variable genes. Follow this validated workflow for designing a new scheme:

This workflow is adapted from a study that developed a high-resolution MLST scheme for *Treponema pallidum [18].*

- Increase the Number of Loci: If you are using an older scheme with fewer than seven loci, consider expanding it. A seven-gene MLST scheme is a standard and robust approach that has been successfully applied to various pathogens like Staphylococcus aureus and Treponema pallidum to achieve high discriminatory power [18].

- Consider Alternative Typing Methods: If optimizing MLST fails, transition to a Multi-Locus Variable Number Tandem Repeat Analysis (MLVA). MLVA typically offers higher resolution than MLST because it targets rapidly evolving VNTR regions. This method has been shown to subdivide common Cryptosporidium parvum gp60 subtypes into multiple distinct MLVA profiles, greatly enhancing outbreak investigation [19].

Issue: Choosing the Wrong Diversity Index Leading to Misinterpretation

Problem: The diversity index you selected is giving counter-intuitive results or is not aligned with your research question, potentially leading to incorrect conclusions.

Solution: Follow this decision pathway to select the most appropriate index:

This guide synthesizes insights from ecological studies comparing index behavior [14] [15].

Verification: After selecting an index, validate your results by calculating a second, complementary index. For example, if you use Simpson's Index, also calculate the Shannon Index. If they show opposite trends, investigate the species abundance distribution in your data to understand why, as this is a known phenomenon [15].

Comparative Tables of Key Metrics

Table 1: Core Diversity Indices and Their Properties

| Index Name | Formula | Sensitivity | Interpretation | Common Use Case |

|---|---|---|---|---|

| Species Richness (S) | S = Count of types [17] |

Rare species [14] | The total number of different types/species present. | Quick assessment of variety; detecting impacts of disturbance [14]. |

| Shannon Index (H') | H' = -∑(p_i * ln(p_i)) [17] |

Equally sensitive to rare and abundant species [14] | Measures the uncertainty in predicting the identity of a randomly chosen individual. A higher H' indicates greater diversity [17] [14]. | General-purpose diversity assessment; emphasizes overall heterogeneity [15]. |

| Simpson's Index (D) | D = ∑(p_i²) [17] |

Abundant species [14] | The probability that two randomly chosen individuals belong to the same type. | Emphasizes the dominance of common types [15]. |

| Simpson's Index of Diversity | 1 - D [16] |

Abundant species | The probability that two randomly chosen individuals belong to different types. Ranges from 0-1 [16]. | More intuitive interpretation of diversity; used in ecology [16]. |

| Inverse Simpson Index | 1 / D [17] |

Abundant species | Equivalent to Simpson's Diversity in Hill's numbers (²D) [17] [14]. Represents the effective number of common species. |

Used in population genetics and community ecology. |

| Berger-Parker Index | 1 / p_max [14] |

Most abundant species only | The reciprocal of the proportion of the most abundant type. Measures dominance [14]. | Assessing the dominance of a single type in a community. |

Table 2: Research Reagent Solutions for Genomic Subtyping

| Reagent / Material | Function in Experiment | Specification / Notes |

|---|---|---|

| Target Genomic DNA | The template for PCR amplification in MLST or MLVA. | For best results, use DNA extracted from clinical/environmental samples with sufficient pathogen burden. Sample concentration and purity are critical [18]. |

| Primers (Oligonucleotides) | To amplify specific, highly variable genetic loci for sequencing or fragment analysis. | Should be designed to anneal to conserved regions flanking variable sites. Follow design criteria: 18-22 bp length, 45-60% GC content, amplicon size of 400-700 bp [18]. |

| PCR Master Mix | Enzymatic amplification of the target loci. | Must be high-fidelity to minimize errors during amplification, especially when Sanger sequencing is the next step. |

| Sanger Sequencing Kit | Determining the nucleotide sequence of the amplified MLST loci. | Required for traditional MLST. The workflow is suitable for laboratories with standard Sanger sequencing resources [18]. |

| Capillary Electrophoresis System | For fragment size analysis in MLVA protocols. | Used to separate and size the amplified VNTR fragments from an MLVA reaction, generating the profile data [19]. |

| PubMLST Database | A public repository for curating and comparing allele profiles and sequence types. | Enables standardization and global comparison of your typing data with other isolates [18]. |

Troubleshooting Guides and FAQs

Guide 1: Addressing Multi-Omics Data Integration Challenges

Problem: My multi-omics subtyping results are unstable and fail to capture biologically meaningful patterns.

Root Cause: This often stems from relying on a single distance metric that cannot capture the complex relationships in your molecular data. Euclidean distance alone may miss important directional patterns in feature vectors [20].

Solution: Implement a multi-metric consensus approach.

Actionable Steps:

- Calculate both Euclidean and Angular distance matrices for each omics data type [20].

- Convert distances to affinity matrices using a scaling parameter

σ, typically set as the median of all pairwise Euclidean distances [20]. - Construct separate consensus matrices for Euclidean affinity, Angular affinity, and overall connectivity.

- For small datasets (<200 samples), average full affinity matrices. For larger datasets, employ stability-driven approaches to mitigate sensitivity to data size [20].

Validation: Compare cluster stability using internal validation metrics (silhouette width, Dunn index) across different metric combinations. Biologically validate subtypes using known pathway enrichment.

Problem: I have significant missing data across omics types, leading to substantial sample loss.

Solution: Utilize methods specifically designed for incomplete multi-omics data.

- Actionable Steps:

- Implement frameworks like DSCC or NEMO that can handle missing modalities [20].

- Apply gene-level aggregation to create a consistent framework across mRNA, miRNA, DNA methylation, and protein data when possible [20].

- For metabolomics data, apply log2 transformation and replace missing values with zero after confirming this is appropriate for your data structure [20].

Guide 2: Improving Subtyping Discriminatory Power

Problem: My current subtyping method lacks directional awareness and fails to differentiate subtle molecular patterns.

Root Cause: Traditional magnitude-based metrics neglect angular relationships between feature vectors, which can be particularly important for capturing distinct molecular signatures [20].

Solution: Incorporate angular distance metrics to enhance pattern discrimination.

Technical Implementation:

Expected Improvement: Studies show combining angular and Euclidean affinity captures complementary views of each omics type, significantly improving subtyping performance [20].

Problem: I need to incorporate biological knowledge but lack pathway information for non-transcriptomic data.

Solution: Map diverse molecular features to gene-level representations compatible with existing pathway databases.

- Actionable Steps:

- For miRNA data: Map miRNA IDs to target genes using miRTarBase or similar databases [20].

- For DNA methylation: Map CpG sites to genes using manufacturer-provided annotations and calculate median methylation per gene.

- For protein data: Average measurements of all proteins encoded by the same gene.

- Remove genes not associated with KEGG or Reactome pathways to focus on biologically interpretable features [20].

Guide 3: Validating Clinical Relevance of Subtypes

Problem: My molecular subtypes don't correlate with clinical outcomes or treatment response.

Root Cause: Subtypes may reflect technical artifacts rather than biologically distinct groups with clinical relevance.

Solution: Integrate multiple clinical endpoints into subtyping validation.

Methodology: Implement multi-endpoint frameworks like MuTATE that simultaneously model overall survival, progression-free survival, and tumor-free survival during subtype discovery [21].

Clinical Validation Protocol:

- Use subtype information as a covariate in prognostic models to test if it improves survival prediction accuracy [20].

- In umbrella trial designs (like FUTURE for TNBC), assign different targeted therapies based on molecular subtypes and compare outcomes across arms [22].

- Evaluate if subtypes show differential response to specific drug classes in preclinical models.

Success Metrics: Look for statistically significant separation in survival curves (p < 0.05) and objective response rates that differ by ≥20% between subtypes receiving matched versus unmatched therapies [22].

Experimental Protocols for Enhanced Discriminatory Power

Protocol 1: DSCC Multi-Omics Subtyping Workflow

Objective: Identify disease subtypes from diverse molecular data using spectral clustering and community detection.

Materials: Processed multi-omics data (gene expression, miRNA, methylation, CNV, mutations, protein, metabolites)

Methodology:

Data Processing:

- Perform gene-level aggregation for all possible data types (mRNA, miRNA, methylation, protein)

- Map features to KEGG-compatible representations

- Apply log2 transformation to metabolomics data, replace missing values with zero

Network Construction:

- Calculate both Euclidean and Angular affinity matrices for each data matrix

- Set scaling parameter σ as median of pairwise Euclidean distances

- Construct three consensus matrices: Euclidean affinity, Angular affinity, connectivity

Ensemble Clustering:

- Apply both spectral clustering (captures global structure) and Louvain community detection (captures local patterns)

- Integrate results from multiple algorithms and metrics

- Validate cluster stability using bootstrapping approaches

Validation: Compare against 13 state-of-the-art methods using 43 cancer datasets with >11,000 patients [20].

Protocol 2: Multi-Endpoint Decision Tree Optimization

Objective: Generate interpretable molecular subtypes that optimize multiple clinical endpoints.

Materials: Molecular feature matrix with associated clinical outcomes (overall survival, progression-free survival, treatment response)

Methodology:

Data Preparation:

- Curate molecular features from sequencing, proteomic, or epigenetic profiling

- Annotate with multiple clinical endpoints

- Split data into training (60%) and validation (40%) sets

MuTATE Framework Application:

- Implement multi-target decision tree algorithm that jointly models all clinical endpoints

- Compare against single-endpoint CART models

- Optimize tree depth and partitioning parameters via grid search

Clinical Interpretation:

- Analyze reassignment of cases between risk categories compared to established models

- Validate novel subtypes using external datasets when available

- Perform biomarker discovery from splitting rules in decision trees

Performance Metrics: In simulations, MuTATE showed significantly lower test error (2.97 vs. >3.0 for CART) and lower false discovery rate (5.7% for 5-target vs. 11.0% for CART) [21].

Table 1: Performance Comparison of Advanced Subtyping Methods

| Method | Key Innovation | Validation Scale | Accuracy Improvement | Clinical Utility |

|---|---|---|---|---|

| DSCC | Multi-distance metrics + ensemble clustering | 43 cancer datasets (>11,000 patients) | Superior to 13 state-of-art methods [20] | Improves survival prediction as covariate [20] |

| MuTATE | Multi-endpoint decision trees | 682 patients across 3 cancers | Reclassified 13-72% of cases [21] | Enhanced risk stratification accuracy [21] |

| FUTURE Trial | Subtype-guided targeted therapy | 141 metastatic TNBC patients | 29.8% objective response rate in heavily pretreated patients [22] | 4/7 arms achieved efficacy boundaries [22] |

Table 2: Troubleshooting Common Subtyping Experimental Failures

| Failure Mode | Root Cause | Diagnostic Signals | Corrective Actions |

|---|---|---|---|

| Low discriminatory power | Single distance metric limitations | Poor cluster separation, unstable assignments | Implement multi-metric consensus (Euclidean + Angular) [20] |

| Poor biological relevance | Lack of pathway context | Subtypes not enriched for known pathways | Map multi-omics features to KEGG-compatible gene representations [20] |

| Weak clinical correlation | Single-endpoint optimization | Subtypes don't predict multiple outcomes | Implement multi-endpoint frameworks like MuTATE [21] |

| Missing data bias | Exclusion of samples with incomplete omics | Reduced sample size, selection bias | Use methods that handle missing data (DSCC, NEMO) [20] |

Pathway and Workflow Visualizations

Research Reagent Solutions

Table 3: Essential Research Reagents for Genomic Subtyping Experiments

| Reagent/Tool | Function | Application Notes |

|---|---|---|

| KEGG Pathway Database | Biological pathway reference for gene aggregation | Enables consistent multi-omics framework; focus on pathway-associated genes [20] |

| miRTarBase | miRNA-to-gene mapping resource | Critical for incorporating miRNA data into gene-level framework [20] |

| PharmGKB/CPIC Guidelines | Pharmacogenomic clinical implementation | Essential for translating subtypes to treatment recommendations [23] |

| TCGA/Public Data Portals | Validation datasets and benchmarking | Required for method comparison across >11,000 patients [20] |

| Custom Gene Panels | Targeted sequencing for validation | Balance coverage depth with cost; useful for subclonal mutation detection [24] |

| Imaging Mass Cytometry | Spatial proteomic profiling | Reveals tumor microenvironment context of molecular subtypes [24] |

| DEPICT/MAGMA Tools | Gene prioritization and pathway analysis | Supports functional interpretation of subtype-discriminating features [25] |

A Toolkit for Modern Biology: Core Genomic Subtyping Methods and Their Applications

Core Genome Multilocus Sequence Typing (cgMLST) is a high-resolution, whole-genome sequencing (WGS)-based method that characterizes bacterial isolates by indexing genetic variation across hundreds to thousands of core genes—those shared by all or nearly all isolates of a species [26]. This technique extends the principles of traditional multilocus sequence typing (MLST), which typically analyzes only six to eight housekeeping genes, by utilizing a much larger portion of the genome. This expansion offers a powerful tool for standardized genomic surveillance, providing the discriminatory power necessary for detailed epidemiological investigations while ensuring global comparability of results [27].

The primary advantage of cgMLST lies in its ability to provide a universal nomenclature for bacterial typing. Unlike traditional methods such as pulsed-field gel electrophoresis (PFGE), which produces results that can be subjective and difficult to interpret, cgMLST generates portable, unambiguous data that can be directly compared across laboratories worldwide [1] [28]. This is crucial for tracking the global spread of pathogens, investigating outbreaks, and understanding bacterial population dynamics. Furthermore, cgMLST is less affected by the confounding effects of horizontal gene transfer and recombination compared to methods based on single nucleotide polymorphisms (SNPs) for some species, as it treats all allelic changes as single events, making it particularly suitable for analyzing highly recombining organisms like Haemophilus influenzae and Moraxella catarrhalis [26] [27].

cgMLST Workflow: From Scheme Definition to Isolate Analysis

Developing and implementing a cgMLST scheme is a multi-stage process that requires careful planning and validation. The workflow can be broadly divided into two main phases: (1) the initial development and evaluation of the typing scheme itself, and (2) the application of the scheme to analyze and type new bacterial isolates. The following diagram illustrates the key stages involved in creating a stable cgMLST scheme.

Diagram Title: cgMLST Scheme Development Workflow

Defining the cgMLST Scheme

The process begins with the careful selection of a seed genome. This genome must be complete, well-annotated, and publicly accessible (e.g., from NCBI). Ideally, the seed isolate should be a type strain or another well-characterized strain available from a culture collection [29]. For example, in developing a scheme for Neisseria meningitidis, the FAM18 strain (accession NC_008767) can serve as the seed genome [29].

Next, a diverse set of penetration query genomes is added to represent the full genetic variation within the species. These genomes should span different sequence types (STs) and clonal complexes (CCs). A good starting point is to select all available finished genomes from NCBI, perform an MLST analysis, and then choose genomes that differ by ST and/or CC [29]. The example for N. meningitidis uses a list of 13 NCBI accession numbers. It is also possible to incorporate assembled sequence data from other sources, such as NCBI SRA or your own sequenced isolates, if sufficient complete genomes are not available [29].

A critical quality control step involves removing outlier genomes. Software tools can compare all query genomes against the seed genome's genes. Genomes where a significantly lower percentage (e.g., only 76% in an example with Pseudomonas aeruginosa) of the non-homologous seed genes are found should be considered taxonomic outliers and excluded from the scheme definition process [29].

To ensure the scheme focuses on chromosomal genes, it is advisable to exclude genes from mobile genetic elements. This can be done by adding plasmid sequences from the same species to an "exclude" list. For instance, the N. meningitidis scheme development incorporated two known plasmid sequences to prevent plasmid-borne genes from being included in the cgMLST targets [29].

Finally, the calculation of the scheme is performed using specialized software. This process categorizes every gene from the seed genome into one of three groups [29]:

- cgMLST: Genes that are non-homologous, have valid start/stop codons in the seed genome, appear uniquely in all query genomes, and do not have invalid stop codons in most queries. These form the targets for typing.

- Accessory: Genes that are non-homologous but may overlap with others, not appear in all queries, or have invalid stop codons in many queries. These are not part of the core scheme but can be used to increase discriminatory power.

- Discarded: Genes that are homologous or have invalid start/stop codons in the seed genome. These are not used.

Evaluating and Validating the cgMLST Scheme

Once a scheme is defined, it must be rigorously evaluated. A pragmatic first test is to re-sequence the seed strain using a modern NGS platform (e.g., Illumina) and analyze the data with the new scheme. The expectation is that 98.5% or more of the cgMLST targets should be found and pass all automated checks. If not, the reasons for failure must be investigated, and problematic targets might be moved to the accessory genome [29].

The most comprehensive evaluation involves testing the scheme against a well-characterized and diverse collection of isolates that represents the entire population genetic background of the species. The scheme is considered stable if most genomes in this collection have at least 95% good cgMLST targets. If some genomes fall below this threshold, the scheme definition process should be iterated by adding more representative isolates as query genomes [29].

After successful validation, the scheme can be finalized and implemented on public platforms like PubMLST to ensure global accessibility [26] [27]. For example, the cgMLST scheme for Haemophilus influenzae was developed using a dataset of over 2,200 genomes and subsequently implemented in PubMLST, providing a standardized tool for public health authorities worldwide [26].

Performance Comparison of Bacterial Typing Methods

Different typing methods offer varying levels of resolution, which must be matched to the specific epidemiological question. The table below summarizes the key advantages and disadvantages of the most common methods, highlighting the position of cgMLST.

Table 1: Comparison of Bacterial Subtyping Methods

| Subtyping Method | Advantages | Disadvantages |

|---|---|---|

| Whole Genome Sequencing (WGS) | - Can be tailored for low or high discriminatory power- Provides phylogenetically relevant data- High reproducibility if standardized- High typability- Broadly applicable to any species | - High cost (though decreasing)- Requires significant technical and bioinformatics expertise- Long turn-around time in some settings- Complex data interpretation [1] |

| Core Genome MLST (cgMLST) | - High discriminatory power and reproducibility- Standardized nomenclature for global surveillance- Mitigates effects of recombination- Easier data interpretation and comparison than WGS SNP analysis | - Requires a pre-defined, validated scheme- Dependent on quality of genome assemblies- May miss recent outbreaks in highly clonal populations without accessory genome |

| Pulsed-Field Gel Electrophoresis (PFGE) | - Historical gold standard for outbreak investigations- High reproducibility when standardized- High typability- Relatively inexpensive | - Labor-intensive- Results can be subjective and difficult to interpret- Does not produce phylogenetically relevant information [1] |

| Multilocus Sequence Typing (MLST) | - Used for phylogenetic studies- High repeatability and reproducibility- High typability- Portable data | - Low to moderate discriminatory power (little use in outbreaks)- Moderately expensive and labor-intensive- Requires adaptation/validation for each species [1] |

The superior discriminatory power of cgMLST is evident in a study of Clostridium difficile, where WGS (using an SNV approach) revealed that among patient pairs whose isolates matched by MLST and were on the same hospital ward, 28% of the pairs were actually highly genetically distinct (with >10 SNVs difference). This demonstrates that MLST, which queries only a small fraction of the genome, can group together genetically unrelated isolates, whereas cgMLST provides the resolution needed for accurate transmission mapping [1].

cgMLST in Action: Key Applications and Experimental Findings

Case Study: Resolving the Population Structure ofMoraxella catarrhalis

A recent study developed a cgMLST scheme comprising 1,319 core genes to investigate the population structure of nearly 2,000 M. catarrhalis genomes [27]. The scheme confirmed the existence of two divergent lineages, seroresistant (SR) and serosensitive (SS), with distinct evolutionary paths. The SR genomes were more conserved, while the SS genomes showed greater genetic variability. The cgMLST data, combined with a Life Identification Number (LIN) code system, provided a robust framework for characterizing lineages and identifying variations in virulence genes and antimicrobial resistance elements, such as the bro β-lactamase, which was more common in SR lineages [27].

Case Study: Tracking OXA-48-ProducingKlebsiella pneumoniae

A study of OXA-48-producing K. pneumoniae compared cgMLST using two different schemes (SeqSphere+ with 2,365 genes and Institut Pasteur's BIGSdb-Kp with 634 genes) with whole-genome MLST (wgMLST) and core-genome SNP (cgSNP) analysis [28]. For the predominant sequence type, ST405, cgMLST using SeqSphere+ found 0–10 allele differences between isolates, while wgMLST found 0–14 differences. The cgSNP analysis showed 6–29 SNPs even in isolates with identical cgMLST profiles. This highlights that while different high-resolution methods may yield slightly different results, they generally lead to the same epidemiological conclusions. The study emphasized that threshold parameters for defining relatedness must be applied cautiously and in conjunction with clinical data [28].

Table 2: Key Research Reagents and Resources for cgMLST

| Item | Function in cgMLST Analysis |

|---|---|

| Seed Genome | A complete, annotated reference genome from a well-characterized strain (e.g., type strain) that serves as the foundation for defining the core gene set. [29] |

| Penetration Query Genomes | A diverse panel of genomes spanning the genetic breadth of the species, used to identify a stable set of core genes present in most isolates. [29] |

| BIGSdb (PubMLST Platform) | A widely used open-source platform for hosting and curating cgMLST schemes and allele databases, enabling standardized global analysis and nomenclature. [26] [30] |

| Ridom SeqSphere+ Software | A commercial software application that facilitates the entire cgMLST workflow, from scheme development and data analysis to visualization and cluster detection. [29] [28] |

| chewBBACA | An open-source bioinformatics tool for pangenome analysis and cgMLST scheme development, used to identify core genes and call alleles. [26] |

| Pathogenwatch | A free, web-based platform that uses cgMLST schemes from PubMLST and other sources to rapidly genotype uploaded genomes and identify close relatives. [31] [32] |

Frequently Asked Questions (FAQs) and Troubleshooting

Q1: My newly developed cgMLST scheme fails to call a large number of targets in my evaluation dataset. What could be wrong? A1: A high rate of failure to call targets often indicates an unstable scheme. This typically occurs when the initial set of penetration query genomes was not diverse enough to capture the full genetic variation of the species. The solution is an iterative process: acquire additional representative isolates, produce high-quality draft genomes, and add them as new query genomes to re-calculate and refine the cgMLST scheme [29].

Q2: How do I determine the threshold for considering two isolates as part of the same outbreak cluster using cgMLST? A2: There is no universal threshold. The number of allele differences that defines a cluster is species-specific and context-dependent. Thresholds should be established based on retrospective analysis of well-defined outbreaks and population genetic studies of the species. For example, a study on K. pneumoniae suggested a genetic distance threshold of 0.0035 for cgMLST to discriminate between related and unrelated isolates. It is critical to use such thresholds in combination with epidemiological data [28].

Q3: What is the difference between a "stable" and an "ad hoc" cgMLST scheme? A3: A stable scheme provides a public, expandable nomenclature and is laborious to define, evaluate, and calibrate. Once approved, it is available for immediate use by the global community. In contrast, an ad hoc scheme provides a local nomenclature and can be established quickly by individual users for specific, limited analyses [29].

Q4: How does cgMLST handle the issue of homologous genes and paralogs? A4: The presence of paralogs (duplicated genes) can mislead genetic relationships. During scheme development, software pipelines (e.g., chewBBACA, Panaroo) include steps to identify and filter out paralogous loci. Furthermore, a robust scheme should be developed and validated using a large dataset (e.g., >500 genomes), which has been identified as a threshold for stable paralogous locus detection [26].

Q5: My assembly quality is good, but the cgMLST analysis flags many missing genes. What should I check? A5: First, verify that the assembly pipeline has not introduced systemic errors. Differences in assembly methods can sometimes lead to fragmented genes or missing regions, particularly in repetitive areas. Check the "Stats" table in your analysis platform for quality metrics. If the quality is confirmed, investigate whether the missing genes are part of a known difficult-to-assemble gene family (e.g., the PPE & PE gene family in M. tuberculosis). If problems are repeatedly observed for certain targets, they might need to be manually removed from the core scheme and added to the accessory genome [29] [31].

Frequently Asked Questions (FAQs)

1. What is the primary advantage of wgMLST over traditional molecular typing methods?

wgMLST provides significantly higher discriminatory power compared to traditional methods like pulsed-field gel electrophoresis (PFGE) or conventional multilocus sequence typing (MLST). While standard MLST targets only 7-8 housekeeping genes, wgMLST extends this to thousands of core and accessory genes across the entire genome, enabling detection of even minor genetic variations between closely related isolates. This enhanced resolution is particularly valuable for precise outbreak investigations and transmission tracking [33] [34].

2. How does wgMLST differ from cgMLST?

Whole genome MLST (wgMLST) analyzes both the core genome (genes present in all isolates of a species) and the accessory genome (genes variably present among isolates). In contrast, core genome MLST (cgMLST) focuses only on the core genome. The inclusion of accessory genes in wgMLST provides additional discriminatory power, especially for distinguishing closely related bacterial strains that may have acquired or lost specific genetic elements [35] [36].

3. What are typical allele difference thresholds for distinguishing outbreak-related isolates?

The acceptable number of allele differences for considering isolates as part of the same outbreak varies by bacterial species. For Pseudomonas aeruginosa, epidemiologically linked isolates typically show 0-13 allele differences in wgMLST analysis. However, these thresholds should be established for each specific pathogen and validated with epidemiological data [36].

4. What software tools are available for wgMLST analysis?

Several bioinformatics tools support wgMLST analysis, including:

- chewBBACA: For schema creation and allele calling [37]

- BioNumerics: Provides integrated wgMLST and cgMLST schemes [36]

- EnteroBase: Enables accessory genome analysis [35]

- pymlst: A Python-based workflow for wgMLST analysis [38]

5. How should I handle paralogous genes in wgMLST analysis?

Paralogous genes (homologous sequences within the same genome resulting from gene duplication) should be identified and removed from your analysis as they can cause uncertainty in allele assignment. The chewBBACA pipeline includes a paralog detection step that outputs a list of potentially paralogous loci, which should be excluded from the final schema [37].

Troubleshooting Guides

Issue 1: Low Number of Loci in cgMLST Schema

Problem: After running ExtractCgMLST, the number of loci in your core genome is unexpectedly low.

Solutions:

- Check genome assembly quality using metrics like N50 value, number of contigs, and assembly size

- Apply quality filters: exclude genomes with >150 contigs, abnormal genome sizes, or >5% missing loci from the cgMLST

- Recompute cgMLST after excluding low-quality assemblies [37]

Example Quality Control Metrics: Table: Recommended Genome Assembly Quality Thresholds

| Metric | Threshold Value | Rationale |

|---|---|---|

| Number of Contigs | <150 | Ensures sufficient assembly continuity |

| Genome Size | Within expected species range | Identifies anomalous assemblies |

| N50 Value | As high as possible (varies by species) | Indicator of assembly fragmentation |

| Missing Loci | <5% of cgMLST | Ensures adequate gene detection |

Issue 2: Discrepancies Between wgMLST and SNP-Based Phylogenies

Problem: Your wgMLST analysis produces clustering results that conflict with SNP-based phylogenies.

Solutions:

- Investigate potential homologous recombination events, which can affect tree topology

- For highly recombinant species like Pseudomonas aeruginosa, consider using cgMLST instead of wgMLST

- Exclude identified recombinant regions from your analysis [36]

Issue 3: Poor Schema Creation or Allele Calling Performance

Problem: chewBBACA schema creation or allele calling fails or produces suboptimal results.

Solutions:

- Ensure you're using the appropriate Prodigal training file for your species

- Verify that input genomes meet quality requirements

- For allele calling, use the correct BLAST Score Ratio (BSR) value (default is 0.6)

- Check that the schema seed and input files are properly formatted [37]

Experimental Protocols

Protocol 1: Creating a wgMLST Schema with chewBBACA

Purpose: Develop a novel wgMLST schema for a bacterial species.

Materials:

- Representative genome assemblies (complete genomes preferred)

- Species-specific Prodigal training file

- Computational resources (minimum 6 CPU cores recommended)

Methodology:

- Install chewBBACA and download necessary datasets

- Run schema creation command:

- Perform allele calling on the same genomes:

- Identify and remove paralogous loci:

- Determine core genome using ExtractCgMLST module with desired threshold (e.g., 95%) [37]

Protocol 2: Accessory Genome Analysis in EnteroBase

Purpose: Identify and visualize accessory genome elements across a set of bacterial isolates.

Materials:

- Assembled genomes with pre-called wgMLST loci

- EnteroBase account with appropriate permissions

Methodology:

- Navigate to "Tasks > Accessory Genome" in EnteroBase

- Click "View Accessory Genome" and select your workspace/tree

- Analyze the visualization interface with three main sections:

- Phylogenetic tree (left panel)

- Presence/absence heatmap of accessory loci (middle panel)

- Strain labels (right panel)

- Use controls to zoom, scroll, and explore specific loci

- Click on loci of interest to view detailed information in genome browser

- Export data as allele matrix or create custom sub-schemes for specific research questions [35]

Table: Comparison of Typing Methods for Pseudomonas aeruginosa Outbreak Investigation [36]

| Typing Method | Epidemiologically Linked Isolate Range | Coefficient of Correlation with SNP (R²) | Discriminatory Power |

|---|---|---|---|

| wgMLST | 0-13 allele differences | 0.78-0.99 | High |

| cgMLST | Not specified | 0.92-0.99 | High |

| SNP calling | 0-26 SNPs | Reference method | Highest |

Table: Effect of Quality Control on cgMLST Schema Size [37]

| Analysis Scenario | Number of Genomes | cgMLST Loci (95% threshold) |

|---|---|---|

| Initial 32 complete genomes | 32 | 1,271 |

| All 712 assemblies (no QC) | 712 | 1,194 |

| After quality filtering | 645 | 1,248 |

Workflow Visualization

wgMLST Analysis Workflow

Core vs. Accessory Genome Analysis

Research Reagent Solutions

Table: Essential Materials for wgMLST Analysis

| Reagent/Resource | Function/Application | Example Sources |

|---|---|---|

| Prodigal Training Files | Gene prediction parameters optimized for specific species | chewBBACA included files [37] |

| wgMLST Schemes | Pre-defined locus sets for specific genera/species | BioNumerics, EnteroBase [35] [36] |

| Quality Control Tools | Assess genome assembly quality before analysis | CLC Genomics Workbench, SPAdes [33] [39] |

| Reference Genomes | Basis for gene presence/absence calls and schema creation | NCBI RefSeq, GenBank [33] [39] |

| Allele Calling Algorithms | Identify known and novel alleles in sequenced genomes | chewBBACA, BioNumerics [37] [36] |

Whole-genome sequencing (WGS) has revolutionized microbial infectious disease surveillance by providing nucleotide-level resolution for investigating outbreaks. Single nucleotide variant (SNV)-based phylogenomic methods have emerged as a powerful approach for classifying microbial samples, offering superior discriminatory power compared to traditional subtyping methods like pulsed-field gel electrophoresis (PFGE) or multilocus sequence typing (MLST) [40] [1]. High-quality core genome SNV (hqSNV) analysis represents a refined methodology that focuses on identifying high-quality variants present in the core genome across a population of isolates, providing a robust foundation for phylogenetic inference. This technical support document details the implementation of hqSNV analysis using the SNVPhyl pipeline, a bioinformatics tool specifically designed for identifying high-quality SNVs and constructing whole-genome phylogenies within the user-friendly Galaxy framework [40].

Framed within broader research to improve discriminatory power in genomic subtyping, hqSNV analysis addresses a critical need in public health microbiology. While traditional MLST methods might classify distantly related isolates as the same sequence type, studies have demonstrated that WGS can reveal significant genetic divergence (e.g., >10 SNVs) within these same MLST-matched pairs, highlighting previously undetected transmission chains and diverse infection sources [1]. The SNVPhyl pipeline operationalizes this enhanced discriminatory capability through an integrated, validated workflow that combines reference mapping, sophisticated variant filtering, and phylogeny construction, making high-resolution outbreak investigation accessible to researchers and public health laboratories [40].

Pipeline Architecture and Key Stages

The SNVPhyl pipeline integrates both pre-existing and custom-developed bioinformatics tools into a cohesive workflow within the Galaxy platform [40]. This integration provides a scalable environment that can be deployed on everything from a local server to a high-performance computing cluster. The workflow proceeds through several critical stages:

- Data Preparation and Quality Control: Input data, consisting of sequence reads and a reference genome, are prepared and validated.

- Sequence Mapping: Reads are mapped to the reference genome using the SMALT aligner.

- Variant Calling: High-quality variants are identified using FreeBayes, with parallel processing by SAMtools/mpileup/BCFtools for verification.

- Variant Filtering: A multi-stage filtering process removes low-quality variants, including those in repetitive regions, recombinant regions, or positions with insufficient coverage.

- Phylogenetic Tree Construction: A maximum-likelihood phylogeny is generated from the filtered SNV alignment using PhyML.

The entire workflow is designed with quality assurance in mind, generating extensive diagnostic outputs that allow researchers to verify each analysis step and troubleshoot potential issues [40] [41].

Workflow Visualization

The following diagram illustrates the logical flow and key stages of the SNVPhyl pipeline:

Essential Research Reagents and Computational Tools

Successful implementation of hqSNV analysis requires specific computational tools and resources. The following table details the essential components of the "research reagent kit" for SNVPhyl-based phylogenomics:

Table 1: Essential Research Reagents and Computational Tools for hqSNV Analysis

| Item Name | Type/Format | Primary Function in Workflow |

|---|---|---|

| Whole-Genome Sequence Reads [42] | FASTQ format (fastqsanger) |

Input data containing sequencing reads from microbial isolates for analysis. |

| Reference Genome [42] [43] | FASTA format | Reference sequence for read mapping and coordinate-based variant identification. |

| Invalid Positions Masking File [42] | BED-like format (tab-delimited) | Optional file to exclude problematic regions (e.g., phage, plasmids) from analysis. |

| SNVPhyl Pipeline [40] [42] | Galaxy Workflow / Docker Image | Core bioinformatics pipeline for hqSNV discovery and phylogeny construction. |

| PHASTER [43] | Web Service / Tool | Used for pre-analysis to identify phage regions in the reference genome for masking. |

Critical Parameters for High-Quality SNV Calling

The discriminatory power of SNVPhyl analysis depends on appropriate parameter settings. These parameters control the stringency for variant calling and filtering, directly impacting the quality and epidemiological relevance of the resulting phylogeny.

Table 2: Key SNVPhyl Parameters for Optimizing hqSNV Detection

| Parameter | Default/Recommended Value | Impact on Analysis |

|---|---|---|

min_coverage [42] [43] |

10-15× | Lower values may include false positives; higher values may exclude valid variants in low-coverage regions. |

min_mean_mapping [42] |

30 | Ensures only reads mapping with high confidence to unique genomic regions are considered. |

relative_snv_abundance(also snv_abundance_ratio, alternative_allele_proportion) [42] [43] |

0.75 | Critical for mixed infections; requires 75% of reads to support variant, reducing false positives. |

min_percent_coverage [44] |

Varies (e.g., 90) | Minimum percentage of reference genome that must meet min_coverage for sample inclusion. |

| Density Threshold & Window Size [44] | Varies (e.g., 5 SNVs in 100bp window) | Identifies/rejects hyper-variable regions indicative of recombination or horizontal gene transfer. |

Frequently Asked Questions (FAQs) and Troubleshooting Guides

Common Error Messages and Resolutions

Error: "No valid phylip alignment" / SNV Alignment file is empty [42]

- Explanation: This indicates no valid SNVs passed all filtering criteria. The most common cause is that one or more samples failed mapping or coverage requirements.

- Solution:

- Check the

filterStats.txtoutput file to see how many SNVs were filtered at each stage. - Examine the

mappingQuality.txtfile to verify all samples met the minimum coverage and percent coverage thresholds. A sample with very low coverage or poor mapping will cause positions to be filtered out as "filtered-coverage" [41]. - Ensure the reference genome is appropriately related to your samples. A distantly related reference will yield too few mapped reads for reliable variant calling.

- Check the

Error: "Tool [...] missing" in Galaxy [45]

- Explanation: The Galaxy instance is missing a specific tool or tool version required by the SNVPhyl workflow.

- Solution:

- A system administrator must log into Galaxy and install the missing tool from the Galaxy ToolShed.

- Ensure the tool version specified in the error message matches the version required by the SNVPhyl workflow.

Error: "Timeout while uploading" to Galaxy [45]

- Explanation: File transfer from the analysis client (e.g., IRIDA) to the Galaxy instance took longer than the configured time limit.

- Solution: A system administrator must increase the timeout limit (e.g.,

galaxy.library.upload.timeoutin the configuration file) and restart the service.

Interpretation of Output Files

How do I interpret the different statuses in the