Automating NGS Workflows: A Strategic Guide to Unlocking Reproducible Chemogenomic Research

Next-generation sequencing (NGS) is revolutionizing drug discovery and biomedical research, but its potential is often limited by manual workflow inconsistencies.

Automating NGS Workflows: A Strategic Guide to Unlocking Reproducible Chemogenomic Research

Abstract

Next-generation sequencing (NGS) is revolutionizing drug discovery and biomedical research, but its potential is often limited by manual workflow inconsistencies. This article provides researchers, scientists, and drug development professionals with a comprehensive guide to automating NGS workflows to achieve superior chemogenomic reproducibility. We explore the foundational drivers, including market growth and strategic partnerships, detail methodological implementations from library prep to data analysis, offer best practices for troubleshooting and optimization, and establish a framework for rigorous validation and quality control to ensure compliance with clinical standards.

The Imperative for Automation: Foundations of Reproducible NGS in Chemogenomics

Technical Support Center

Troubleshooting Guides

Guide 1: Troubleshooting Common Automated NGS Library Preparation Failures

Problem: Low Library Yield

Low library yield is a frequent and frustrating outcome that can compromise entire sequencing runs. The table below outlines the primary causes and their respective corrective actions [1].

| Cause of Failure | Mechanism of Yield Loss | Corrective Action |

|---|---|---|

| Poor Input Quality / Contaminants | Enzyme inhibition from residual salts, phenol, EDTA, or polysaccharides [1]. | Re-purify input sample; ensure wash buffers are fresh; target high purity (260/230 > 1.8, 260/280 ~1.8) [1] [2]. |

| Inaccurate Quantification / Pipetting | Suboptimal enzyme stoichiometry due to concentration errors [1]. | Use fluorometric methods (Qubit) over UV absorbance; calibrate pipettes; use master mixes [1] [2]. |

| Fragmentation/Tagmentation Inefficiency | Over- or under-fragmentation reduces adapter ligation efficiency [1]. | Optimize fragmentation parameters (time, energy); verify fragmentation profile before proceeding [1]. |

| Suboptimal Adapter Ligation | Poor ligase performance, wrong molar ratio, or reaction conditions reduce adapter incorporation [1]. | Titrate adapter-to-insert molar ratios; ensure fresh ligase and buffer; maintain optimal temperature [1]. |

Problem: High Duplicate Read Rate and Amplification Bias

Over-amplification during library preparation is a major source of bias and artifacts, leading to inaccurate data [1].

| Cause of Failure | Mechanism of Bias | Corrective Action |

|---|---|---|

| Too Many PCR Cycles | Overcycling introduces size bias, duplicates, and flattens fragment size distribution [1]. | Optimize and minimize the number of PCR cycles; repeat amplification from leftover ligation product rather than overamplifying a weak product [1]. |

| Carryover of Enzyme Inhibitors | Residual salts or phenol can inhibit polymerases mid-amplification [1]. | Re-purify input sample using clean columns or beads to remove inhibitors [1]. |

| Primer Exhaustion or Mispriming | Primers may run out prematurely or misprime under suboptimal conditions [1]. | Optimize primer design and annealing conditions; ensure adequate primer concentration [1]. |

Problem: Contamination and Batch Effects

Batch effects, where technical variables systematically influence data, can confound results and lead to false conclusions [3] [4].

| Cause of Failure | Impact on Data | Corrective Action |

|---|---|---|

| Researcher-to-Researcher Variation | Differences in manual pipetting technique can cause batch effects, masking true biological differences [4] [5]. | Implement automated liquid handling to standardize protocols and eliminate user-based variation [4] [5]. |

| Cross-Contamination | Improper sample handling leads to contamination, resulting in inaccurate results and data misinterpretation [5] [6]. | Use automated, closed systems; sterilize workstations; handle one sample at a time; include DNA-free controls [5] [6]. |

| Reagent Degradation | Ethanol wash solutions losing concentration over time can lead to suboptimal washes and failures [1]. | Enforce reagent quality control logs; track lot numbers and expiry dates [1]. |

Guide 2: Diagnosing Systemic NGS Data Biases

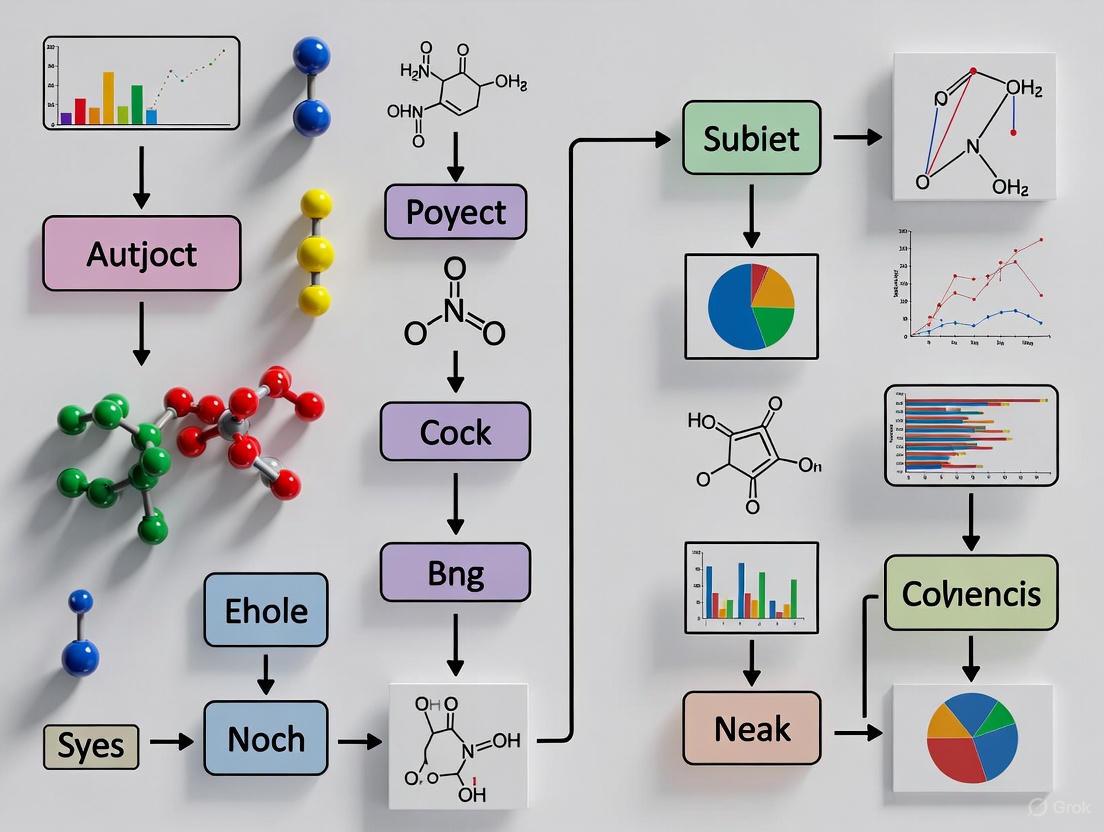

Systemic biases can be introduced at various stages of the automated NGS workflow. The following diagram illustrates the logical flow for diagnosing the root cause of common data biases.

The table below details these specific biases and their solutions.

| Bias Type | Description & Impact | Solution |

|---|---|---|

| GC Coverage Bias | Strong, reproducible effect of local GC content on sequencing read coverage. Problematic for RNA-Seq, ChIP-Seq, and copy number detection [3]. | Adjust signal for GC content in bioinformatic analysis to improve precision [3]. |

| Base-Call Error Bias | Base-call errors are not random and can cluster by cycle position on the sequencer. Impacts alignment and can cause false-positive variant calls [3]. | Employ alternative base-calling methods or post-hoc error correction algorithms [3]. |

| Batch Effects | Technical variability (e.g., processing date, technician, reagent lot) correlates with and confounds experimental outcomes [3]. | Use careful experimental design with randomization; apply batch effect correction methods (e.g., surrogate variable analysis) during data analysis [3]. |

Frequently Asked Questions (FAQs)

Q1: What are the most significant challenges when first automating an NGS workflow, and how can we overcome them?

A1: Labs new to automation often face three core challenges [7]:

- Lack of Software Knowledge: Building and modifying custom protocols can require coding expertise. Solution: Choose platforms with modular, user-friendly software (e.g., Tecan's FluentControl) that separates method development from daily operation, enabling protocol customization without programming [7].

- Difficulty Designing a Worktable: The vast array of hardware and accessories can be confusing. Solution: Invest in a flexible, universal worktable configuration and ensure the software GUI clearly displays reagent and sample loading requirements [7].

- Long Workflow Optimization Times: It can take months to optimize a new automated script. Solution: Select platforms with pre-developed, extensively tested routines for common tasks and commercial kits (e.g., the collaboration between Zymo Research and Tecan) to eliminate lengthy optimization [7].

Q2: Our automated preps are inconsistent. What are the common hidden sources of variation?

A2: Inconsistency in automated runs often stems from these hidden factors:

- Liquid Handler Calibration: Improperly calibrated pipetting axes (x, y, z) can lead to inaccurate liquid transfers. Regularly maintain and calibrate instruments [7].

- Reagent Degradation: Enzymes (e.g., ligases, polymerases) and buffers lose activity over time. Strictly monitor reagent lot numbers and expiry dates, and avoid using expired reagents [1].

- Human Procedural Errors: Even on automated systems, manual pre- and post-steps can introduce variation. Implement standardized operating procedures (SOPs), checklists, and master mixes to reduce pipetting errors and improve consistency across technicians [1].

Q3: How can we reduce the cost of our automated NGS workflows without sacrificing quality?

A3: Several strategies can lead to significant cost savings:

- Reagent Miniaturization: Automated liquid handlers can accurately dispense low-volume reactions. Miniaturizing reactions to 1/10th of the manufacturer's recommended volume can drastically reduce reagent consumption and cost [8] [5].

- Reduce Repeat Experiments: Automation enhances precision and reduces human error, which minimizes failed runs and the need for costly reagent-wasting repeats [4] [5].

- Efficient Normalization: Use library prep kits with high levels of auto-normalization to achieve consistent read depths across samples without the need for individual sample normalization, saving time and reagents [6].

The Scientist's Toolkit: Key Research Reagent Solutions

The following table details essential materials and their functions for robust automated NGS workflows.

| Item | Function in Automated NGS |

|---|---|

| High-Fidelity DNA Polymerase | Enzymes with proofreading capabilities minimize errors during PCR amplification, ensuring accurate representation of the template DNA [9]. |

| Fluorometric Quantitation Kits (e.g., Qubit) | Provides highly accurate quantification of DNA/RNA concentration by specifically binding to nucleic acids, unlike UV absorbance which can be skewed by contaminants [1] [2]. |

| Magnetic Beads (SPRI) | Used for automated purification and size selection of DNA fragments, enabling efficient cleanup and removal of unwanted reagents like adapter dimers [1]. |

| Multiplexed Sequencing Adapters | Short, double-stranded DNA sequences with unique molecular barcodes ligated to fragments, allowing multiple samples to be pooled and sequenced in a single run [10]. |

| Automated NGS Library Prep Kit | Integrated kits (e.g., seqWell's ExpressPlex, Tecan/Zymo's DreamPrep) provide pre-optimized, ready-to-use reagents formatted for automated liquid handlers, streamlining the entire process [7] [6]. |

| Internal Control Spikes | Known DNA sequences added to the sample to monitor the efficiency and accuracy of the entire workflow, from library prep to sequencing [8]. |

Automated NGS Workflow Diagram

The diagram below outlines the key stages of a typical automated NGS workflow, highlighting where automation and critical quality control (QC) steps are integrated.

Strategic industry partnerships are revolutionizing next-generation sequencing (NGS) by integrating specialized expertise to overcome critical bottlenecks in automated workflows. In chemogenomic reproducibility research, where consistent, high-throughput genetic data is paramount for evaluating compound effects, these collaborations are not merely beneficial—they are essential. They combine advanced library preparation chemistries with sophisticated automation platforms, directly addressing longstanding challenges in manual NGS protocols such as pipetting variability, cross-contamination, and workflow inefficiencies that compromise data integrity. This technical support center provides targeted guidance for scientists leveraging these collaborative tools to achieve robust, reproducible results in their drug discovery pipelines.

Frequently Asked Questions (FAQs)

How do strategic partnerships specifically improve the quality of my NGS library prep? Partnerships merge distinct areas of expertise, such as a reagent company's specialized enzymes with an automation firm's precision liquid handling. This synergy creates optimized, validated, and standardized protocols. For example, a study comparing manual and automated library prep for a 22-gene solid tumour panel showed that the automated workflow, developed through a partnership, achieved on-target rates exceeding 90% and higher reproducibility, significantly improving data quality for clinical analysis [11].

My lab is new to automation. What is the biggest challenge we should anticipate? The most common initial challenge is a lack of software knowledge and the complexity of designing a functional worktable [7]. Building custom scripts for your specific protocols and selecting the correct hardware from hundreds of configurations can delay projects for months. The solution is to seek partnerships that offer platforms with pre-developed, optimized routines for common NGS tasks and intuitive software that separates complex method development from daily operation [7].

Are collaborative automation solutions compatible with the regulatory standards required for drug development? Yes, a key driver behind these partnerships is to ensure compliance with stringent regulatory frameworks like IVDR and ISO 13485 [12]. Automated systems enhance compliance by providing standardized, traceable processes, integrated quality control checks, and thorough documentation—features that are critical for gaining regulatory approval for diagnostics and therapies [12].

What is the return on investment (ROI) for implementing a partnered automation solution? The ROI is realized through significant long-term savings from reduced reagent waste (via miniaturized dispensing), decreased hands-on time, and fewer failed experiments due to human error [5] [12]. Automation can reduce hands-on time in library preparation by over 75%, from hours to just 45 minutes in some cases, freeing highly skilled personnel for data analysis and other value-added tasks [11].

Troubleshooting Guides

Problem 1: Inconsistent Library Yields and Quality

Potential Causes and Solutions:

- Cause: Manual pipetting inaccuracies during reagent dispensing. Even minor volumetric errors are amplified during PCR.

- Cause: Inefficient or variable bead-based clean-up steps in manual protocols.

- Solution: Integrate an automated clean-up device like the G.PURE into your workflow. These devices perform rapid, consistent magnetic bead-based purification in plate formats, standardizing this critical step across all samples [13].

- Cause: User-to-user and batch-to-batch variation.

Problem 2: Difficulty Scaling from Low- to High-Throughput NGS

Potential Causes and Solutions:

- Cause: Laboratory Information Management System (LIMS) integration failures create data silos and tracking issues.

- Solution: Prior to purchasing, verify the automation platform's compatibility with your existing LIMS. Choose systems designed for seamless integration to ensure smooth sample and data tracking from sample-in to result-out [12].

- Cause: Inability to efficiently process large sample batches due to hardware limitations.

- Cause: Protocol optimization for high-throughput takes too long.

- Solution: Leverage partnership-based solutions that offer pre-developed and pre-optimized scripts for common high-throughput kits, such as the DreamPrep NAP solution from Tecan and Zymo Research, which can save months of optimization time [7].

Problem 3: High Contamination Rates and False Positives

Potential Causes and Solutions:

- Cause: Sample cross-contamination from manual pipetting and handling.

- Cause: Inadequate real-time quality control, allowing low-quality samples to proceed.

- Solution: Implement automated QC tools like omnomicsQ that monitor sample quality in real-time against pre-set thresholds, flagging failing samples before they consume valuable sequencing resources [12].

Workflow Visualization

The following diagram illustrates how strategic partnerships integrate different technological components to create a seamless, automated NGS workflow, directly addressing common manual challenges.

Automated NGS Workflow Integration

Market Data and Performance Metrics

The tables below summarize key market data on the growing NGS library preparation market and quantitative performance gains from automation.

| Metric | Value | Source / Note |

|---|---|---|

| Global Market (2025) | USD 2.07 Billion | [14] |

| Projected Market (2034) | USD 6.44 Billion | [14] |

| CAGR (2025-2034) | 13.47% | [14] |

| Dominant Region (2024) | North America (44% share) | [14] |

| Fastest Growing Region | Asia Pacific (CAGR ~15%) | [14] |

| Fastest Growing Segment | Automated/High-Throughput Prep (CAGR 14%) | [14] |

Documented Benefits of Automated NGS Workflows

| Performance Metric | Manual Workflow | Automated Workflow | Improvement & Source |

|---|---|---|---|

| Hands-on Time (per run) | ~23 hours [11] | ~6 hours [11] | ~73% reduction |

| Total Runtime | 42.5 hours [11] | 24 hours [11] | ~44% faster |

| Aligned Reads | ~85% [11] | ~90% [11] | ~5 percentage point increase |

| Single-Cell Prep Hands-on Time | 4 hours [11] | 45 minutes [11] | Over 81% reduction |

| Inter-User Variation | High [5] | Eliminated [5] [12] | Essential for reproducibility |

The Scientist's Toolkit: Key Research Reagent Solutions

This table details essential components and platforms, often developed through industry partnerships, that are critical for establishing robust, automated NGS workflows.

| Item | Function in Automated NGS Workflow |

|---|---|

| Library Preparation Kits | Designed for compatibility with specific sequencers (e.g., Illumina, Oxford Nanopore) and applications (e.g., whole genome, targeted). Partnerships create kits optimized for automated liquid handlers [14] [11]. |

| Automated Liquid Handling Systems | Precisely dispense reagents and samples in nanoliter-to-microliter volumes, eliminating pipetting error and enabling high-throughput processing. Examples include Tecan Fluent and Beckman Biomek i-Series [12] [7]. |

| Magnetic Bead-Based Clean-Up Modules | Integrated automated systems for purifying and size-selecting nucleic acid fragments post-amplification, replacing manual and variable centrifugation steps. The G.PURE device is an example [13]. |

| Real-Time QC Software | Tools like omnomicsQ automatically monitor sample quality metrics (e.g., concentration, fragment size) against defined thresholds, flagging failures before sequencing [12]. |

| Integrated Workflow Software | Software (e.g., FluentControl) that allows users to build, run, and monitor automated protocols without needing advanced programming skills, streamlining operations [7]. |

In modern drug discovery, chemogenomics—the study of the interaction of chemical compounds with biological systems on a genome-wide scale—relies on generating consistent, reliable data. Reproducibility is the cornerstone that ensures scientific findings are valid, trustworthy, and translatable to clinical applications. The adoption of Automated Next-Generation Sequencing (NGS) workflows is pivotal for achieving the high-throughput and precision required for reproducible chemogenomic research. This guide addresses common challenges and provides actionable protocols to help researchers fortify the reproducibility of their chemogenomic studies.

Troubleshooting Guides for Automated NGS Workflows

FAQ 1: How can I minimize variability in automated NGS library preparation?

Issue: Inconsistent library yields and quality between automated runs.

Solution:

- Regular Calibration: Perform daily calibration of robotic liquid handlers. Use fluorescent dye-based volume verification kits to ensure pipetting accuracy [15].

- Process Lockdown: Once a method is validated, "lock down" the entire automated workflow. Document all parameters, including reagent brands, lot numbers, and incubation times [16].

- Environmental Controls: Monitor and record ambient temperature and humidity in the automation workspace, as these can affect enzymatic steps in library prep [15].

FAQ 2: What are the best practices for integrating automated sample tracking to ensure data integrity?

Issue: Sample misidentification or lost chain-of-custody in high-throughput screens.

Solution:

- Barcode Integration: Implement a system where sample plates are labeled with 2D barcodes. Configure your automated liquid handler to scan and verify each barcode at critical transfer points [17].

- LIMS Connection: Integate your automation platform with a Laboratory Information Management System (LIMS). This enables real-time data capture and traceability for every sample from receipt to sequencing [15].

- Verification Steps: Program the automation software to include verification steps, such as checking for successful tip attachment and confirming liquid presence in source wells before aspiration [18].

FAQ 3: How do I validate a new automated NGS workflow for chemogenomic applications?

Issue: Uncertainty about validation criteria and metrics when transitioning from manual to automated processes.

Solution: Adhere to a structured validation plan. The NGS Quality Initiative (NGS QI) provides frameworks specifically for this purpose [16]. Key metrics to evaluate are summarized in the table below.

Table 1: Key Performance Indicators (KPIs) for Automated NGS Workflow Validation

| Metric | Target | Measurement Method |

|---|---|---|

| Sample-to-Sample Contamination | < 0.1% | Quantification of negative controls via qPCR or bioanalyzer [16] |

| Library Prep Success Rate | > 95% | Fraction of samples passing QC thresholds (e.g., DV200 > 50%) [15] |

| Inter-Run Reproducibility | CV < 10% | Coefficient of Variation (CV) of on-target rate or unique reads across multiple runs [16] |

| Variant Calling Concordance | > 99.5% | Comparison of variant calls (SNPs, Indels) between automated and validated manual methods [18] |

| Hands-on Time Reduction | 50-65% | Comparison of active technician time pre- and post-automation [15] |

FAQ 4: How can I manage the high computational cost and data complexity of automated NGS?

Issue: Bioinformatics bottlenecks and data storage challenges.

Solution:

- AI-Enhanced Pipelines: Utilize AI-driven tools like

DeepVariantfor more accurate and efficient variant calling, which can reduce manual review time [18]. - Cloud Computing: Leverage cloud-based analysis platforms (e.g., DNAnexus, Illumina BaseSpace) that offer scalable computing power and pre-configured, validated bioinformatics pipelines [18].

- Data Management Plan: Establish a formal data lifecycle policy outlining retention periods for raw sequence data, processed files, and final reports to optimize storage costs [15].

Essential Experimental Protocols for Reproducibility

Protocol 1: Validating an Automated NGS Method for Chemogenomic Screening

This protocol outlines the core steps for ensuring your automated NGS method produces reproducible and reliable data, based on guidelines from the NGS Quality Initiative [16].

1. Define Objectives and Criteria:

- Clearly state the assay's purpose (e.g., "Identify genetic variants in a cell pool after treatment with a chemogenomic library").

- Establish acceptance criteria for all Key Performance Indicators (KPIs) prior to starting, referencing targets like those in Table 1.

2. Design the Validation Study:

- Sample Selection: Include a range of sample types (e.g., high/low quality DNA, positive/negative controls) that reflect real-world conditions.

- Replication: Perform a minimum of three independent runs on different days to assess inter-run precision.

3. Execute the Locked-Down Workflow:

- Use the automated system with standardized reagents and the "locked" protocol for all validation runs.

- Integrate with LIMS for full sample tracking.

4. Data Analysis and Performance Assessment:

- Process all sequencing data through a single, version-controlled bioinformatics pipeline.

- Calculate all pre-defined KPIs and compare them against the acceptance criteria.

5. Documentation and Reporting:

- Compile a validation report detailing the protocol, raw data, results for all KPIs, and a statement of pass/fail against each acceptance criterion.

Protocol 2: Implementing a Quality Management System (QMS) for NGS

A robust QMS is non-negotiable for reproducible science. The NGS QI provides tools to build this system [16].

1. Personnel Management:

- Standardized Training: Use SOPs like the "Bioinformatics Employee Training SOP" for consistent onboarding [16].

- Competency Assessment: Regularly assess staff proficiency using tools like the "Bioinformatician Competency Assessment SOP" [16].

2. Equipment Management:

- Preventive Maintenance: Adhere to a strict schedule for all automated equipment, including liquid handlers and sequencers.

- Documentation: Maintain logs for all maintenance, calibration, and performance verification activities.

3. Process Management:

- Standard Operating Procedures (SOPs): Develop and use detailed SOPs for every stage of the workflow, from sample accessioning to data reporting. The NGS QI's "NGS Method Validation SOP" is an excellent template [16].

- Continuous Monitoring: Use the "Identifying and Monitoring NGS Key Performance Indicators SOP" to track metrics like read quality and contamination rates over time, enabling proactive process corrections [16].

Essential Visualizations for Reproducible Workflows

NGS QMS Framework

Automated NGS Workflow

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Materials for Reproducible Chemogenomic Research

| Item | Function | Example / Key Feature |

|---|---|---|

| Chemogenomic (CG) Compound Library | Collections of small molecules with defined activity profiles used for high-throughput screening and target deconvolution [19]. | The EUbOPEN library covers one-third of the druggable proteome and is openly available [19]. |

| Validated Chemical Probes | The gold standard for modulating specific protein targets; highly characterized, potent, and selective small molecules [19]. | EUbOPEN probes are peer-reviewed and released with a structurally similar inactive control compound [19]. |

| Automated Liquid Handling Systems | Robots that perform precise and reproducible liquid transfers for NGS library preparation and assay setup [15] [18]. | Tecan Fluent systems automate PCR setup, NGS library prep, and nucleic acid extractions, integrating with AI for error detection [18]. |

| NGS Method Validation Plan Template | A structured document to guide the validation of NGS assays, ensuring they meet regulatory and quality standards [16]. | A template from the NGS Quality Initiative helps labs generate standardized validation documents, reducing development burden [16]. |

| AI-Enhanced Bioinformatics Tools | Software that uses machine learning to improve the accuracy and speed of NGS data analysis, such as variant calling [18]. | Tools like DeepVariant use deep neural networks to call genetic variants more accurately than traditional methods [18]. |

Within chemogenomic reproducibility research, the push to automate Next-Generation Sequencing (NGS) workflows is driven by two powerful, interconnected forces: the dramatic decline in sequencing costs and the escalating demand for high-throughput data. As sequencing becomes more affordable, larger and more robust experiments are possible, placing immense pressure on laboratories to maintain precision and consistency across thousands of samples. This technical support center addresses the specific challenges researchers and drug development professionals face when implementing automation to meet these demands, providing targeted troubleshooting and foundational protocols to ensure data integrity and reproducibility.

Troubleshooting Guides and FAQs

FAQ: My automated liquid handler is causing inconsistent library yields. What should I check?

- Problem: Variability in NGS library preparation yields on an automated platform.

- Solution:

- Verify Liquid Handler Calibration: Regularly calibrate the robotic pipettors to ensure volume dispensing accuracy. Improper calibration is a primary source of yield inconsistency [12].

- Inspect Reagent Quality and Storage: Ensure all reagents are fresh, thoroughly mixed, and have not expired. Pre-aliquot reagents to minimize freeze-thaw cycles [2].

- Check for Tip-Seating Issues: Confirm that disposable tips are consistently and firmly seated on the pipetting head to prevent volume loss [12].

- Audit Sample Quality: Before automation, quantify DNA input samples using a fluorometric method (e.g., Qubit) to ensure accurate starting concentrations and purity, as contaminants like salts or solvents can impair reactions [2].

FAQ: My sequencing run failed during instrument initialization with a "Chip Not Detected" error.

- Problem: The sequencer fails to recognize the flow cell or sequencing chip.

- Solution:

- Re-seat the Chip: Open the chip clamp, remove the chip, and carefully re-install it, ensuring it is properly seated [20].

- Inspect for Damage: Visually inspect the chip for any signs of physical damage or liquid where it shouldn't be. Replace if damaged [20].

- Wait for Green Checkmark: After inserting a new chip, wait for a green check mark to appear on the instrument screen indicating successful detection before proceeding [20].

- Reboot and Retry: Power cycle the instrument and its associated server. If the problem persists, contact technical support, as the chip socket may be faulty [20].

FAQ: How can I improve cross-contamination in my high-throughput automated workflow?

- Problem: Suspected sample-to-sample contamination in an automated NGS pipeline.

- Solution:

- Use Filter Tips: Always use filter tips to prevent aerosol contamination and sample carryover [12].

- Implement Wash Protocols: Utilize and optimize the liquid handler's wash routines for the robotic pipetting arms between reagent additions or sample transfers.

- Validate with Blanks: Incorporate negative control samples (e.g., water blanks) at regular intervals in your sample plates to monitor for contamination.

- Maintain Instrument Cleanliness: Adhere to a strict schedule for decontaminating and cleaning the instrument's work surface, grippers, and other components [12].

Data Presentation: NGS Platforms and Costs

The following tables summarize key quantitative data relevant to automated NGS workflows, aiding in platform selection and cost-benefit analysis.

Table 1: Comparison of Common NGS Sequencing Technologies

| Platform | Sequencing Technology | Read Length | Key Limitations |

|---|---|---|---|

| Illumina [21] | Sequencing-by-Synthesis (Bridge PCR) | Short (36-300 bp) | Overcrowding can spike error rate to ~1% [21] |

| Ion Torrent [21] | Sequencing-by-Synthesis (Semiconductor) | Short (200-400 bp) | Inefficient homopolymer sequencing causes signal loss [21] |

| PacBio SMRT [21] | Sequencing-by-Synthesis (Single Molecule) | Long (avg. 10,000-25,000 bp) | Higher cost per run [21] |

| Oxford Nanopore [21] | Electrical Impedance Detection (Single Molecule) | Long (avg. 10,000-30,000 bp) | Error rates can be high (up to 15%) [21] |

Table 2: Evolution of Whole Genome Sequencing (WGS) Cost

This cost reduction is a fundamental driver for scaling up chemogenomic studies through automation [22].

| Year | Approximate Cost per Human Genome | Key Driver |

|---|---|---|

| 2007 [22] | ~$1 Million | Early NGS commercialization |

| 2024 [22] | ~$600 | Established high-throughput platforms (e.g., Illumina) |

| Projected [22] | ~$200 | Next-generation platforms (e.g., Illumina NovaSeq X) |

Experimental Protocols

Detailed Methodology: Automated NGS Library Preparation for Reproducibility

This protocol is designed for a robotic liquid handling system integrated with a Laboratory Information Management System (LIMS) for traceability.

Sample Quality Control and Normalization:

- Quantify DNA samples using a fluorometer (e.g., Qubit) for high accuracy [2].

- Using the liquid handler, normalize all samples to a uniform concentration (e.g., 70 ng/μL) in nuclease-free water in a 96-well or 384-well plate [2].

- Barcode each sample well and register the plate in the LIMS to establish a chain of custody [15].

Automated Library Construction:

- DNA Shearing: Transfer normalized samples to a microplate designated for acoustic shearing (e.g., Covaris) to fragment DNA to the desired size.

- End Repair & A-Tailing: The robot adds master mix for end-repair and A-tailing to the sheared DNA fragments. The plate is sealed, mixed, and transferred to a thermocycler for incubation.

- Adapter Ligation: The system adds a unique dual-indexed adapter to each sample well. This step is critical for sample multiplexing and must be highly precise to avoid index swapping [12].

- Library Clean-Up: The robot performs solid-phase reversible immobilization (SPRI) using magnetic beads to purify the ligated library fragments, removing short fragments and excess adapters [2].

Library QC and Normalization:

- Quality Check: The system aliquots a sample of the purified library for QC, typically using a fragment analyzer (e.g., Agilent TapeStation) [2].

- Library Quantification: Quantify the library using a fluorometric method or qPCR.

- Pooling: Based on quantification data, the liquid handler precisely combines equimolar amounts of each indexed library into a single pooling tube for sequencing.

Workflow Visualization: Automated NGS Pipeline

The following diagram illustrates the logical workflow and integration points in an automated NGS pipeline for chemogenomic research.

The Scientist's Toolkit: Research Reagent Solutions

This table details essential materials and their functions in a typical automated NGS workflow.

| Item | Function | Brief Explanation |

|---|---|---|

| Fluorometer (e.g., Qubit) [2] | Nucleic Acid Quantification | Provides highly accurate concentration measurements of dsDNA or RNA, crucial for normalizing input material before automation. |

| Magnetic Beads (e.g., AMPure XP) [2] | Library Clean-up | Selectively bind to DNA fragments of desired sizes to remove enzymes, salts, and short fragments after reaction steps. |

| Fragmentation Kit [2] | DNA Shearing | Prepares genomic DNA for sequencing by breaking it into smaller, random fragments (e.g., via acoustic shearing or enzymatic digestion). |

| Library Prep Kit with Indexed Adapters [12] | Library Construction | Contains all enzymes and buffers for end-repair, A-tailing, and adapter ligation. Unique indexes allow sample multiplexing. |

| Fragment Analyzer [2] | Library Quality Control | Assesses the size distribution and integrity of the final sequencing library, ensuring it meets the specifications for the sequencer. |

Building Your Automated NGS Workflow: From Library Prep to Data Generation

Troubleshooting Guides

Troubleshooting Liquid Handling Errors

Precise liquid handling is critical for NGS library preparation. Inaccurate dispensing can lead to failed runs, inconsistent coverage, and compromised data integrity.

Problem: Inconsistent NGS Library Yields

- Symptoms: Low final library concentration, high duplicate rates in sequencing data, or uneven coverage.

- Potential Causes & Solutions:

- Cause: Pipetting inaccuracy, especially with small volumes. Manual pipetting of microliter volumes is a common source of error [1].

- Solution: Regularly maintain and calibrate automated liquid handlers. For manual steps, use master mixes to reduce pipetting steps and enforce the use of calibrated pipettes [1].

- Cause: Incorrect bead-based cleanup ratios (e.g., during library purification) leading to unintended size selection or sample loss [1].

- Solution: Strictly adhere to recommended bead-to-sample ratios. Standardize mixing and incubation times across all users [1].

Problem: Contamination in Sequencing Data

- Symptoms: High levels of adapter-dimers (sharp peak at ~70-90 bp on electropherogram) or foreign sequence reads [1].

- Potential Causes & Solutions:

- Cause: Carryover contamination from previous runs or cross-contamination between samples on the automated deck.

- Solution: Utilize liquid handlers with integrated contamination control features, such as HEPA filters, UV decontamination lights, and enclosed waste containers [23]. Implement rigorous deck cleaning protocols between runs.

Problem: Low-Quality Sequencing Libraries

- Symptoms: Broad or multi-peaked fragment size distributions in quality control checks.

- Potential Causes & Solutions:

- Cause: Inefficient fragmentation or enzymatic steps due to reagent or enzyme inhibitors [1].

- Solution: Use liquid handlers with real-time liquid-level sensing and pressure monitoring to confirm that reagents are fully aspirated and dispensed, preventing skipped steps [23]. Ensure input nucleic acid is pure and free of contaminants.

The following diagram outlines a systematic diagnostic strategy for resolving NGS library preparation failures.

Troubleshooting Robotic System Failures

Robotic components are subject to mechanical wear and require systematic maintenance to prevent downtime.

Problem: Robot Arm Movement Errors

- Symptoms: The robotic arm fails to move, is out of sync, or cannot reach its intended positions, leading to misaligned pipetting or plate handling.

- Potential Causes & Solutions:

- Cause: Mechanical misalignment or worn-out components in joints and gears [24].

- Solution: Perform regular mechanical inspections and lubrication of moving parts as per the manufacturer's schedule [24].

- Cause: Incorrect calibration of the motion and axis systems [24].

- Solution: Recalibrate the robotic arm and all deck positions. Use systems with integrated high-precision cameras to simplify and standardize the calibration process [23].

Problem: System Generates Fault Codes

- Symptoms: The automation software displays error or fault codes, halting the workflow.

- Potential Causes & Solutions:

Troubleshooting Workflow Software Integration

Seamless integration between software systems is essential for a fully automated NGS workflow.

Problem: Incompatibility with Existing Systems

- Symptoms: Inability to transfer sample data from a Laboratory Information Management System (LIMS) to the liquid handler, or failure to export run files for downstream analysis.

- Potential Causes & Solutions:

- Cause: The automated platform lacks compatibility with existing laboratory systems and data formats [12] [25].

- Solution: Prior to purchase, verify that the automation platform supports integration with your specific LIMS and NGS analysis pipelines. Look for systems that offer API access and compatibility with common file formats like CSV for sample lists [12] [26].

Problem: Failure in Sample Tracking

- Symptoms: Lost sample metadata or inability to trace results back to original samples.

- Potential Causes & Solutions:

- Cause: Poor integration between workflow software, robotics, and LIMS, breaking the chain of custody [12].

- Solution: Ensure the automated workflow software is fully integrated with LIMS for real-time tracking of samples, reagents, and process steps. This is critical for meeting regulatory compliance requirements like IVDR [12].

Frequently Asked Questions (FAQs)

1. What are the key benefits of automating NGS library preparation?

Automation significantly enhances reproducibility by standardizing protocols and eliminating human variability in pipetting [12]. It improves efficiency by increasing throughput and freeing up researcher time, and boosts accuracy by precisely dispensing small volumes, which is crucial for miniaturized reactions and cost savings [26] [25].

2. How do I choose the right automated liquid handler for my chemogenomics research?

When selecting a system, consider your required throughput (number of samples per run), the volume range (especially for low-volume dispensing), and precision needs (look for CVs <5% at microliter volumes) [26] [25]. Ensure it has features to prevent contamination and can integrate seamlessly with your existing LIMS and bioinformatics pipelines [12] [25].

3. Our automated NGS runs are showing inconsistencies between operators. How can we fix this?

This is a common issue in manual or semi-automated workflows. The solution is to standardize protocols within the automated system's software [12]. Create locked-down, validated protocols that all operators must use, and implement thorough training programs to ensure everyone is proficient in operating and basic troubleshooting of the systems [12] [24].

4. What regular maintenance do automated liquid handlers require?

Regular maintenance includes calibrating pipetting heads for volume accuracy, calibrating deck positions (a built-in camera can simplify this [23]), and performing mechanical inspections of robotic arms and moving parts [24]. Also, follow manufacturer guidelines for replacing consumables like HEPA filters and UV lamps to maintain contamination control [23].

5. How can automation help our lab meet regulatory standards like IVDR or ISO 13485?

Automated systems support compliance by providing complete traceability of samples and reagents, enforcing standardized and validated protocols, and generating the necessary documentation for audits [12]. Integrated quality control tools, which can flag samples that don't meet pre-defined quality thresholds, further ensure the reliability of results in a regulated environment [12].

The Scientist's Toolkit: Key Research Reagent Solutions

The following table details essential components and their functions in an automated NGS workflow for chemogenomic research.

| Item | Function in Automated NGS Workflows |

|---|---|

| Liquid Handling System | Precisely dispenses and transfers liquid reagents and samples for library prep. Key for complex, multi-step protocols and reaction miniaturization [23] [26]. |

| Magnetic Bead Station | Integrated on the deck of liquid handlers for automated purification and size selection of libraries, replacing manual centrifugation columns [23]. |

| Cooling/Heating Blocks | Maintains specific temperatures for enzymatic reactions (e.g., ligation, PCR) during automated runs, ensuring optimal reaction conditions [23]. |

| Laboratory Information Management System (LIMS) | Tracks samples, reagents, and process steps in real-time, ensuring data integrity and traceability for reproducible and compliant workflows [12]. |

| qPCR Instrument | Used for accurate quantification of sequencing libraries pre-pooling. Some systems can be seamlessly operated from the same interface as the liquid handler [23]. |

| Variant Interpretation Software | Tertiary analysis software that links identified variants to biological and clinical annotations, enabling the creation of custom reports for chemogenomic insights [27]. |

Automated NGS Workflow Integration

The diagram below illustrates the logical relationship and data flow between the core components of an automated NGS workflow.

FAQs: Addressing Common Library Preparation Challenges

Q1: What are the primary causes of low library yield and how can they be fixed? Low library yield often stems from poor input DNA/RNA quality, inaccurate quantification, inefficient fragmentation or ligation, or over-aggressive purification steps [1]. To address this:

- Re-purify Input Sample: Ensure nucleic acid purity with 260/280 ratios around ~1.8 and 260/230 > 1.8 to remove enzyme inhibitors [1].

- Use Fluorometric Quantification: Replace UV absorbance methods with Qubit or PicoGreen for accurate template quantification [1] [28].

- Optimize Ligation Conditions: Titrate adapter-to-insert molar ratios, use fresh ligase, and maintain optimal temperature [29] [1].

- Review Cleanup Parameters: Avoid over-drying magnetic beads and use correct bead-to-sample ratios to prevent sample loss [1].

Q2: How can I reduce PCR-induced bias in my library? PCR bias, which leads to uneven coverage and high duplicate rates, can be minimized by:

- Reducing PCR Cycles: Use the minimum number of cycles necessary. Optimize your kit to require fewer cycles through high-efficiency end repair, A-tailing, and adapter ligation [28].

- Choosing Robust Enzymes: Select polymerases known to minimize amplification bias [30].

- Utilizing Unique Molecular Identifiers (UMIs): Tag individual molecules with UMIs to differentiate true variants from PCR errors [28].

- Adopting Hybridization Capture: For targeted sequencing, prefer hybridization-based enrichment over amplicon approaches, as it typically requires fewer PCR cycles and yields better uniformity [28].

Q3: What are the critical steps to prevent sample contamination and cross-contamination? Contamination risk can be significantly reduced through laboratory best practices and automation:

- Dedicate Pre-PCR Areas: Perform sample extraction and PCR setup in separate, dedicated areas to prevent amplicon contamination [30].

- Use Unique Dual Indexes (UDIs): Employ UDIs for multiplexing, which allows for accurate demultiplexing and helps identify and prevent index hopping [28].

- Automate Liquid Handling: Automated systems use disposable tips and controlled workflows to minimize the chance of carryover between samples [12] [29].

- Follow Basic Lab Hygiene: Aliquot reagents to avoid freeze-thaw cycles, wipe surfaces with appropriate solutions, and prepare fresh 70% ethanol daily for wash steps [28].

Q4: How does automation specifically improve the reproducibility of NGS library prep? Automation enhances reproducibility by standardizing every aspect of the protocol:

- Eliminates Manual Variability: Automated liquid handling systems dispense reagents with precise volumes, removing inconsistencies introduced by manual pipetting [12] [29].

- Enforces Standardized Protocols: Robotic systems execute predefined, validated protocols with consistent timing and incubation conditions, eliminating batch-to-batch variations [12].

- Integrates Quality Control: Some automated platforms can integrate with quality control tools (e.g., omnomicsQ) to monitor sample quality in real-time, flagging deviations before they affect downstream steps [12].

Troubleshooting Guides

Common Library Preparation Failures and Solutions

Table: Troubleshooting Common NGS Library Preparation Issues

| Problem Category | Typical Failure Signals | Common Root Causes | Corrective Actions |

|---|---|---|---|

| Sample Input & Quality | Low yield; smeared electropherogram; low complexity [1] | Degraded DNA/RNA; sample contaminants (phenol, salts); inaccurate quantification [1] | Re-purify input; use fluorometric quantification (Qubit); check purity ratios [1] |

| Fragmentation & Ligation | Unexpected fragment size; sharp ~70-90 bp peak (adapter dimers) [1] | Over-/under-shearing; poor ligase performance; incorrect adapter:insert ratio [1] | Optimize fragmentation parameters; titrate adapter ratios; ensure fresh ligation reagents [29] [1] |

| Amplification & PCR | High duplicate rate; over-amplification artifacts; sequence bias [30] [1] | Too many PCR cycles; inefficient polymerase; primer exhaustion [1] | Reduce PCR cycles; use high-fidelity enzymes; employ UMIs [30] [28] |

| Purification & Cleanup | High adapter-dimer signal; sample loss; carryover of salts [1] | Wrong bead:sample ratio; over-dried beads; inadequate washing [1] | Precisely follow bead cleanup protocols; avoid over-drying beads; use fresh wash buffers [1] [28] |

Quantitative Performance Metrics for Automated Workflows

Automated NGS library preparation directly enhances key performance metrics essential for reproducible chemogenomic research.

Table: Key Performance Metrics from an Automated Targeted Sequencing Workflow [31]

| Performance Measure | Result (at 95% CI) | Significance for Reproducibility |

|---|---|---|

| Sensitivity | 98.23% | High likelihood of detecting true variants, including low-frequency mutations. |

| Specificity | 99.99% | Minimal false positives, ensuring reliable variant calls for downstream analysis. |

| Repeatability (Intra-run Precision) | 99.99% | Exceptional consistency within a single sequencing run. |

| Reproducibility (Inter-run Precision) | 99.98% | High consistency across different runs, operators, and days. |

| Accuracy | 99.99% | Overall reliability of the sequencing data generated by the automated workflow. |

Workflow Visualization: Automated NGS Library Preparation

The following diagram illustrates a streamlined, automated workflow for NGS library preparation, integrating key steps from nucleic acid extraction to sequencing-ready libraries.

Automated NGS Library Prep Workflow

The Scientist's Toolkit: Essential Research Reagent Solutions

Table: Key Reagents and Kits for Automated NGS Library Preparation

| Item | Function | Application Notes |

|---|---|---|

| Magnetic Beads | Size selection and purification of nucleic acids; used in clean-up steps [30] [1] | Bead-to-sample ratio is critical. Over-drying can lead to inefficient elution and sample loss [1]. |

| Hybridization Capture Kits (e.g., xGen Hybrid Capture) | Target enrichment for sequencing specific genomic regions; used in automated targeted panels [32] | More robust than amplicon-based methods, providing better uniformity and fewer false positives [28]. |

| Unique Dual Indexes (UDIs) | Barcodes for multiplexing samples; allow accurate demultiplexing and prevent index hopping [28] | Essential for complex, multi-sample studies. Enables pooling of dozens of libraries in a single run [28]. |

| FFPE DNA Repair Mix | Enzyme mixture to reverse DNA damage from formalin fixation [28] | Crucial for working with degraded clinical FFPE samples to reduce sequencing artifacts and recover original sequence complexity [28]. |

| Library Quantification Kits (qPCR-based) | Accurately measure concentration of amplifiable library fragments [33] | Prefer over fluorometric methods for pooling libraries, as it only measures adapter-ligated molecules, preventing over/under-loading [28] [33]. |

| Automated Library Prep Kits (e.g., for MGI SP-100RS) | Reagents formulated for compatibility with automated liquid handling systems [31] | Designed for reduced hands-on time and improved reproducibility on platforms like the Biomek i3 or MGISP-100 [31] [32]. |

Frequently Asked Questions (FAQs)

Q1: What are the main benefits of automating NGS sample preparation? Automating NGS sample prep significantly enhances accuracy, reproducibility, and throughput while reducing costs. It eliminates human errors associated with manual pipetting, minimizes the risk of cross-contamination, and standardizes protocols to ensure consistent results across different runs and operators [5] [12]. Furthermore, automation drastically reduces hands-on time, freeing up researchers for more complex tasks [5].

Q2: How does miniaturization of reagent volumes lead to cost savings? Miniaturization involves using nanoliter-scale liquid handling to dispense reagents. This directly conserves expensive reagents and enables the use of smaller, cheaper labware (e.g., 384-well plates). One study demonstrated that a miniaturized, automated approach could process thousands of samples weekly for less than $15 per sample [13].

Q3: My automated workflow is producing inconsistent library yields. What could be the cause? Inconsistent yields often point to issues with liquid handling or reagent integration. First, verify that your liquid handler is correctly calibrated, as imprecise dispensing will directly affect reaction efficiency [7]. Second, ensure all reagents are thoroughly mixed and at the correct temperature before the run begins. Contamination from previous runs can also be a factor, so implement regular cleaning procedures [20].

Q4: What are the first steps in transitioning from a manual to an automated NGS workflow? A successful transition requires careful planning. Begin by conducting a thorough assessment of your laboratory's specific needs, including sample volume, required throughput, and existing bottlenecks [12]. Next, select an automation platform that integrates seamlessly with your Laboratory Information Management System (LIMS) and downstream analysis pipelines. Finally, invest in comprehensive, hands-on training for personnel to ensure a smooth adoption of the new system and protocols [12].

Q5: How can I ensure my automated NGS workflow is reproducible for chemogenomic research? Reproducibility is achieved through rigorous standardization. Use automated systems to enforce strict adherence to validated protocols, eliminating user-to-user variation [5] [12]. Implement real-time quality control tools to monitor sample quality and flag deviations immediately [12]. Finally, choose automation platforms that provide complete traceability for regulatory compliance, which is crucial for chemogenomic reproducibility research [13] [12].

Troubleshooting Guides

Issue 1: Low or Inconsistent Sequencing Data Output

| Possible Cause | Recommended Action | Prevention Strategy |

|---|---|---|

| Error in library quantification | Re-quantify libraries using fluorometric methods (e.g., Qubit) to ensure accuracy over spectrophotometry. | Standardize quantification and quality control steps across all automated runs [12]. |

| Pipetting inaccuracies in automation | Check liquid handler calibration; verify nozzle and tip performance for consistent nanoliter dispensing [13]. | Implement regular maintenance and calibration schedules for all automated equipment. |

| Poor template preparation | Verify the quantity and quality of the input library and template preparations before sequencing [20]. | Use integrated systems that automate the entire workflow from sample-in to library-out to minimize variability [13]. |

Issue 2: High Contamination or Background Noise in Data

| Possible Cause | Recommended Action | Prevention Strategy |

|---|---|---|

| Carryover contamination | Perform consumable-free clean-ups or use fresh tips for every sample transfer [5]. | Use closed, automated systems to minimize environmental exposure and human intervention [5]. |

| Contaminated reagents | Prepare fresh reagents and aliquot into single-use volumes. | Use automated quality control to flag samples that do not meet pre-defined quality thresholds before sequencing [12]. |

Issue 3: Automated Liquid Handling Failures

| Possible Cause | Recommended Action | Prevention Strategy |

|---|---|---|

| Clogged nozzles | Execute a line clear procedure and perform routine cleaning with recommended solutions (e.g., isopropanol) [20]. | Implement a regular cleaning and maintenance schedule as per the manufacturer's instructions. |

| Incorrect worktable design | Re-configure the worktable layout to ensure sufficient deck space and correct placement of labware [7]. | Invest in a universal worktable configuration with a user-friendly GUI that visually confirms correct deck setup [7]. |

| Software or connectivity issues | Restart the instrument and connected servers; check for and install any required software updates [20]. | Choose automation software that is modular and does not require extensive programming expertise to operate [7]. |

Quantitative Data on Automation and Miniaturization

The following table summarizes key quantitative benefits of implementing automation and miniaturization in NGS workflows, as evidenced by published studies.

Table 1: Impact of Automation and Miniaturization on NGS Workflows

| Metric | Manual Workflow | Automated & Miniaturized Workflow | Source / Protocol |

|---|---|---|---|

| Hands-on Time | ~3 hours | < 15 minutes | Sequencing-ready DNA prep platforms [13] |

| Cost per Sample | High | < $15 per sample | COVseq protocol using I.DOT Liquid Handler [13] |

| Reagent Consumption | High | Reduced via nanoliter dispensing | Non-contact, low-volume dispensing [13] |

| Data Reproducibility | Variable, user-dependent | High, minimal batch effects | Automated library prep systems [5] |

Experimental Protocols

Protocol 1: Automated, Miniaturized Library Preparation for Genomic Surveillance

This protocol, adapted from Simonetti et al. (2021), outlines a cost-effective method for large-scale sequencing, such as viral genomic surveillance [13].

- Sample Input: Use low-input samples (e.g., 5-10 ng of DNA).

- Liquid Handling: Employ a non-contact liquid handler (e.g., I.DOT Liquid Handler) to dispense library construction reagents in nanoliter volumes into a 384-well plate.

- Library Construction: Automate key steps, including enzymatic fragmentation, end-repair, adapter ligation, and PCR amplification, using an integrated workstation.

- Purification: Perform magnetic bead-based clean-up using an integrated device (e.g., G.PURE NGS Clean-Up Device).

- Quality Control: Pool the libraries and quantify using a fluorometric method. The resulting libraries are ready for sequencing.

Protocol 2: Open-Source, Automated Multiplexed Imaging (PRISMS)

This protocol, based on Zhang et al. (2025), describes an automated pipeline for spatial omics, demonstrating modularity and cost reduction [34].

- Automated Staining: Use a liquid handling robot with thermal control (e.g., Opentrons OT-2) to perform rapid, cyclic staining of RNA or protein targets on multiple tissue slides or coverslips.

- Modular Mounting: Place samples in 3D-printed or laser-cut acrylic holders compatible with various microscopes.

- Automated Imaging: Execute a custom Python (Jupyter notebook) script that:

- Writes commands for microscope software (e.g., Nikon NIS Elements) for automated acquisition.

- Computes autofocus correction for Z-drift.

- Captures individual fields of view (FOVs), tiled scans, or a combination.

- Post-Processing: The Python script generates a Fiji/ImageJ macro to stitch overlapping images and, if required, perform super-resolved reconstructions (e.g., SRRF).

Workflow Diagrams

Automated NGS Library Prep

PRISMS Multiplexed Imaging

The Scientist's Toolkit

Table 2: Key Research Reagent Solutions for Automated NGS

| Item | Function in Automated Workflows |

|---|---|

| Non-Contact Liquid Handler | Precisely dispenses nanoliter volumes of reagents for library prep, enabling miniaturization and reducing costs [13]. |

| Magnetic Bead-Based Clean-Up Kits | Used in automated systems for rapid and consistent purification of nucleic acids during library preparation steps [13]. |

| Sequencing-Ready DNA Prep Kits | Integrated reagent kits designed for fully automated, "sample-in, library-out" workflows, minimizing hands-on time [13]. |

| Open-Source Control Software | Python-based scripts (e.g., PRISMS) that customize and control laboratory instruments for tailored, automated assays [34]. |

| External Quality Assessment (EQA) Panels | Standardized samples used to validate and ensure cross-laboratory consistency and accuracy of automated NGS workflows [12]. |

Troubleshooting Guides

Guide 1: Resolving Data Transfer Failures Between Sequencer and LIMS

Q: The automated data transfer from our NextSeq 550 system to Clarity LIMS has failed. The run is complete, but the data is not appearing in the LIMS. What are the first steps I should take?

A: This is often a disruption in the automation trigger. Follow these steps to diagnose the issue [35]:

- Check the Integration Version: Confirm the version of the installed Illumina NextSeq Integration Package by running the command

rpm -qa | grep -i nextseqfrom the Clarity LIMS server console [35]. - Review Log Files: If the error involves data parsing or missing results, check the

NextSeqIntegrator.logfile, typically located at/opt/gls/clarity/extensions/Illumina_NextSeq/v2/SequencingService/[35]. - Investigate Automation Worker: Refer to the "Troubleshooting Automation Worker" section in your Clarity LIMS administration documentation for broader issues with automation triggers [35].

Q: For a NovaSeq 6000, the automated run step starts but does not complete. How can I find more details?

A: You can access detailed logging information directly from the Clarity LIMS interface [36]:

- Log in to Clarity LIMS and locate the in-progress step via the "Lab View" in the "Recent Activities" pane or by searching with the Library Tube or Flowcell barcode [36].

- On the "Record Details" screen, find the "Sequencing Log" multiline text field. This log contains specific information about the run process [36].

- If the interface does not provide enough information, review the

sequencer-api.logfile on the server for deeper technical details [36].

Guide 2: Troubleshooting Bioinformatics Pipeline Failures at the LIMS Handoff

Q: Our bioinformatics pipeline has failed with a "Foreign key constraint violation" error. What does this mean and how can it be fixed?

A: This technical error often has a simple scientific cause. It typically means that sample IDs in the sequencing file do not match any samples registered in the experiment within your LIMS [37]. This is a common sample tracking issue.

- Actionable Solution: Instead of a generic SQL error, a well-designed pipeline would display: "Sample ID ABC123 not found in experiment registry. Check [Your Sample Registry, e.g., Benchling] or contact the data team if this sample should exist." [37]. This message points you directly to the problem—a sample ID mismatch—and tells you exactly what to do next.

Q: The pipeline fails a QC step. What is the most likely cause and what are the next steps?

A: A QC failure usually indicates an issue with the raw sequencing data or sample quality.

- Actionable Solution: Look for a specific error like, "QC failed: 12% of reads below quality threshold (expected <5%). Contact the sequencing core." [37]. This tells you the metric that failed, the observed value, the expected value, and the responsible team to contact. This is preferable to a non-actionable "ValueError" which provides no guidance [37].

Guide 3: Addressing Data Quality and Sample Tracking Errors

Q: I am concerned about the "Garbage In, Garbage Out" (GIGO) principle. What are the key data quality pitfalls in automated NGS workflows?

A: Ensuring data quality is critical, as errors at the start can corrupt all downstream analysis [38]. Common pitfalls include:

- Sample Mislabeling: A survey found up to 5% of samples in clinical sequencing labs had labeling or tracking errors. This can lead to incorrect scientific conclusions or, in clinical settings, misdiagnoses [38].

- Batch Effects: Systematic technical differences between groups of samples processed at different times can mimic biological signals. This requires careful experimental design and statistical correction [38].

- Technical Artifacts: PCR duplicates, adapter contamination, and systematic sequencing errors can mimic real biological signals. Using tools like FastQC, Picard, and Trimmomatic is essential to identify these issues [38].

Proactive Methodologies for Ensuring Data Quality [38]:

- Implement Standard Operating Procedures (SOPs): Use detailed, validated protocols for every step from sample collection to data analysis.

- Automate Where Possible: Use automated sample handling and LIMS tracking to reduce human error in repetitive tasks.

- Establish QC Checkpoints: Monitor metrics like Phred scores, read length distributions, and alignment rates at every stage of the pipeline.

- Perform Data Validation: Check that data makes biological sense (e.g., gene expression profiles match known tissue types) and use cross-validation with alternative methods like qPCR.

Frequently Asked Questions (FAQs)

Q: What are the core benefits of integrating automation with a LIMS for NGS workflows?

A: Seamless integration creates a unified digital backbone for the lab, offering [39] [40]:

- Elimination of Manual Data Entry: Direct instrument integration automatically captures test results and parameters, saving time and preventing transcription errors [40].

- Complete Traceability: Track a sample and all its derivatives (extracted DNA, libraries, etc.) from submission through testing, analysis, and reporting with a full audit trail [39].

- Workflow Automation: Automate repetitive tasks like sample registration, QC checking, and report generation, allowing scientists to focus on complex analysis [40].

- Enhanced Reproducibility: Enforces standardized procedures and captures all data and metadata, which is crucial for chemogenomic reproducibility research [39].

Q: We are planning a new LIMS implementation. What are the best practices to ensure successful integration with our automated systems?

A: A successful implementation hinges on careful planning [41] [40]:

- Define Clear Goals: Start with specific, measurable objectives (e.g., "reduce data entry time by 30%") rather than vague desires to "improve efficiency" [41].

- Create a Detailed Requirements List: Have a complete understanding of your lab's processes and the data types/formats the LIMS must handle. Focus on main requirements first [40].

- Avoid Over-Customization: Stick to the must-have features and use a vendor that offers them. Excessive customizations drive up costs and complexity [41].

- Allocate Proper Resources: Implementation is a two-team effort involving both the vendor's specialists and your own lab staff to ensure the system fits daily workflows [41].

- Plan for Data Migration: Carefully plan and execute the transfer of data from legacy systems to ensure data integrity and compliance [41].

Q: How can we make our bioinformatics pipelines more user-friendly and easier for scientists to debug?

A: The key is to translate technical failures into actionable, scientific context [37]. Build these elements into your pipelines:

- Clear Error Messages: Errors should state what happened and why in scientific terms, and suggest a clear next step. For example, "Missing replicate numbers for 3 samples in plate P2024-089. Replicates required for statistical analysis." [37].

- Comprehensive Logging: Logs should tell a story with timestamps, input files, sample counts, and quality metrics so scientists can reconstruct what happened [37].

- Sensible Retry Logic: Configure the pipeline to retry after transient errors like network glitches, but not for failed QC, which requires human judgment [37].

Workflow and Data Flow Diagrams

NGS Integration Architecture

Error Resolution Workflow

Research Reagent Solutions

The following table details key materials and digital solutions essential for robust and reproducible automated NGS workflows.

| Item Name | Type | Function in Automated Workflow |

|---|---|---|

| GLUE Integration Engine [39] | Software/Data Infrastructure | Acts as a data cloud management solution; standardizes data models and enables seamless connectivity between over 200 laboratory instruments, data sources, and bioinformatics tools via API, SFTP, ASTM, and HL7 protocols [39]. |

| LabWare LIMS [42] | Enterprise Software Platform | A highly configurable LIMS designed for complex lab workflows. Provides robust sample lifecycle management, instrument integration, and compliance features (21 CFR Part 11, GLP) for large-scale, reproducible operations [42]. |

| Clarity LIMS [35] [36] | Software Platform | Illumina's web-based LIMS, commonly integrated with NGS platforms like NextSeq and NovaSeq. Manages sample tracking, sequencing runs, and automated data transfer from instrument to analysis [35] [36]. |

| FastQC [38] | Bioinformatics Tool | Provides quality control metrics for raw sequencing data (e.g., Phred scores, GC content). Used as an initial checkpoint to prevent "garbage in, garbage out" by identifying issues in sequencing runs or sample prep [38]. |

| Genome Analysis Toolkit (GATK) [38] | Bioinformatics Pipeline | A standard for variant discovery in high-throughput sequencing data. Its best practices provide detailed recommendations for variant quality assessment and filtering, which is critical for data integrity in chemogenomic research [38]. |

| Electronic Lab Notebook (ELN) [39] [42] | Software Module | Integrated within modern LIMS platforms to digitally record methods, protocols, and observations. Ensures procedural reproducibility and creates a full audit trail for regulated environments [39] [42]. |

| OncoKB / PharmGKB [39] | Knowledge Database | Curated databases of actionable genomic variants and drug-gene relationships. Integration with the bioinformatics pipeline enables automated therapeutic interpretation of variant data for clinical reporting [39]. |

Troubleshooting Guides & FAQs for Automated NGS Workflows

Oncology Research

FAQ: Our automated variant calling in oncology panels shows inconsistent results between runs. How can we improve reproducibility?

Inconsistent variant calling often stems from pre-analytical variables. Key steps to improve reproducibility include:

- Standardize Input Quality: Ensure all samples meet minimum quality thresholds (e.g., DV200 > 30% for FFPE RNA) before entering the automated workflow. Use fluorometric quantification (Qubit) instead of absorbance to accurately quantify usable nucleic acids [1].

- Automate Library Quantification: Use automated, qPCR-based library quantification instead of manual methods to ensure precise, equal molar pooling of libraries, which is critical for uniform coverage [12] [15].

- Implement Real-Time QC: Integrate a quality control solution like omnomicsQ to monitor samples in real-time and flag those that fall below pre-defined quality thresholds before downstream analysis [12].

FAQ: We are observing high duplicate read rates in our automated hybrid capture workflows for cancer genomics. What is the cause?

High duplication rates often indicate issues early in the workflow, frequently related to insufficient library complexity. Common causes and solutions are summarized below [1]:

| Cause | Mechanism | Corrective Action |

|---|---|---|

| Low Input DNA | Inadequate starting material reduces library complexity, leading to over-amplification of fewer original molecules. | Re-quantify input DNA with a fluorometric method; ensure input mass meets the protocol's minimum requirement. |

| Over-amplification | Too many PCR cycles during library amplification preferentially amplify a subset of fragments. | Optimize and reduce the number of PCR cycles in the automated protocol; use the minimum necessary for detection. |

| Inefficient Purification | Incomplete removal of primers and adapter dimers can lead to their over-representation in the final library. | Review and adjust automated bead-based cleanup parameters on your liquid handler, such as the bead-to-sample ratio [1]. |

Infectious Disease Research

FAQ: How can we improve the detection of low-abundance pathogens in metagenomic sequencing on an automated system?

Sensitivity in metagenomic sequencing is highly dependent on reducing background and maximizing the yield of microbial sequences.

- Minimize Cross-Contamination: Automated liquid handlers with disposable tips are excellent for reducing cross-contamination between samples, which is crucial for avoiding false positives [12].

- Reduce Host Nucleic Acids: Incorporate an automated host depletion step (e.g., using probes to remove human rRNA and DNA) into your workflow to enrich for microbial sequences.

- Optimize Fragmentation: Verify that the automated fragmentation settings (e.g., sonication time, enzymatic digestion) are calibrated to produce the desired insert size for your platform, as over-fragmentation can bias GC-rich microbial genomes [1].

FAQ: Our automated RNA library prep for transcriptomic studies of pathogens yields low. What should we check?

Low yield in RNA library prep can halt a project. Follow this diagnostic flowchart to identify the root cause.

Single-Cell Sequencing

FAQ: Our automated single-cell RNA-seq workflow shows high levels of ambient RNA contamination. How can we mitigate this?

Ambient RNA is a common issue in droplet-based single-cell workflows. Automation can both introduce and help solve this problem.

- Review Reagent Dispensing: Ensure the liquid handler is calibrated to dispense cell suspension and reagents smoothly and accurately. Aggressive pipetting can lyse cells, releasing RNA into the solution [12].

- Incorporate Enzymatic Cleanup: Use an automated platform to integrate enzymatic cleanup steps (e.g., using RNase inhibitors or specific nucleases) that degrade free-floating RNA without affecting encapsulated cellular material.

- Utilize Software Solutions: Employ bioinformatic tools post-sequencing to computationally identify and subtract the ambient RNA signal from your cell counts.

FAQ: Cell throughput in our automated single-cell sample loading is lower than expected.

This is often a hardware or fluidics issue.

- Check for Clogs: Inspect the fluidics lines and nozzles of the automated dispenser for partial clogs.

- Verify Cell Concentration and Viability: Ensure your input cell suspension is at the optimal concentration and has high viability. Clumps of dead cells can easily clog microfluidic chips.

- Re-calibrate Pressure/Vacuum Settings: The pressure or vacuum settings on the instrument may need re-calibration to ensure smooth and continuous flow of cells.

The Scientist's Toolkit: Key Research Reagent Solutions

The following table details essential reagents and their functions in automated NGS workflows for the featured application areas [32].

| Reagent Solution | Function in Automated Workflow |

|---|---|

| Archer FUSIONPlex | Targeted RNA-based assay for gene fusion detection in oncology, automated on platforms like the Biomek i3 [32]. |

| VARIANTPlex | Targeted DNA-based assay for mutation detection in oncology, optimized for automated liquid handling to ensure reproducibility [32]. |

| xGen Hybrid Capture | Solution for enriching specific genomic regions (e.g., for infectious disease pathogen detection or exome sequencing) in an automated, high-throughput format [32]. |

| Automated Library Prep Kits | Formulated for reduced hands-on time and consistent performance with robotic systems, covering applications from DNA-seq to single-cell RNA-seq [12] [15]. |

| Pooled Barcoded Primers | Enable multiplexing of hundreds of samples by adding unique molecular identifiers during automated library construction, crucial for single-cell and high-throughput projects [12]. |

Detailed Experimental Protocol: Automated Targeted NGS for Oncology

This protocol outlines the automated preparation of libraries for targeted sequencing (e.g., using VARIANTPlex) on a benchtop liquid handler like the Biomek i3 [32].

Workflow Overview: The process transforms extracted DNA into a sequenced-ready library through a series of automated steps.

Step-by-Step Methodology:

Input DNA Quality Control (Manual):

- Quantify DNA using a fluorometric method (e.g., Qubit dsDNA HS Assay). Do not rely on absorbance alone [1].

- Assess DNA integrity (e.g., via Genomic DNA ScreenTape or similar). For FFPE samples, note the degree of fragmentation.

- Dilute all samples to the required mass and volume in a 96-well plate using the liquid handler's automated dilution function.

Automated Library Construction (Hands-off):

- Transfer the normalized DNA plate to the deck of the liquid handler.

- The method automatically adds fragmentation and end-repair enzymes to each sample.

- Following incubation, the system adds ligation buffer and barcoded adapters to each reaction. The precise molar ratio of adapter to insert is critical and is handled accurately by the robot [12] [1].

- The workflow includes a bead-based cleanup step to stop the reaction and remove excess adapters.

Indexing PCR & Post-PCR Cleanup (Hands-off):

- The liquid handler adds a PCR master mix containing index primers to each sample.

- The plate is transferred by the robot to an integrated on-deck thermocycler for amplification [32].

- After cycling, the plate is retrieved, and a second bead-based cleanup is performed to remove PCR reagents and select for the desired library size.

Automated Hybrid Capture (Hands-off):

- The normalized libraries are pooled by the liquid handler into a single tube or well.

- The system adds biotinylated probes (e.g., xGen Lockdown Probes) to the pooled library and transfers the mixture to the integrated thermocycler for hybridization.

- Streptavidin-coated magnetic beads are added, and the target-library complexes are bound to the beads. The liquid handler performs a series of wash steps to remove non-specifically bound DNA.

Final Amplification & QC (Hands-off):

- A final PCR amplifies the captured library. The robot performs a last bead-based cleanup.

- The final library is quantified via an automated qPCR assay on the liquid handler to determine the concentration of amplifiable molecules [12].

- The library is normalized and pooled with other samples for sequencing.

Maximizing Performance: Best Practices for Troubleshooting and Optimizing Automated NGS

FAQs and Troubleshooting Guides

Adapter Ligation Troubleshooting

Q: My sequencing results show a high percentage of adapter-dimer contamination. What are the primary causes and solutions?

Adapter dimers, often visible as a sharp peak near 70-90 bp on an electropherogram, can dominate a library and reduce usable sequencing data [1]. The following table outlines the common causes and corrective actions.

| Cause | Mechanism | Corrective Action |

|---|---|---|

| Suboptimal Adapter-to-Insert Ratio [1] | Excess adapters in the ligation reaction promote adapter-to-adapter ligation instead of adapter-to-insert ligation. | Titrate the adapter:insert molar ratio. Use a lower ratio to minimize dimer formation while maintaining ligation efficiency [1]. |

| Inefficient Ligation [1] | Poor ligase performance or reaction conditions reduce the rate of insert ligation, allowing adapter dimerization. | Ensure fresh ligase and buffer; maintain optimal temperature (~20°C for blunt-end); avoid heated lid interference; optimize incubation time [1]. |